FSM Gateway

Kubernetes Gateway API implementation provided by FSM

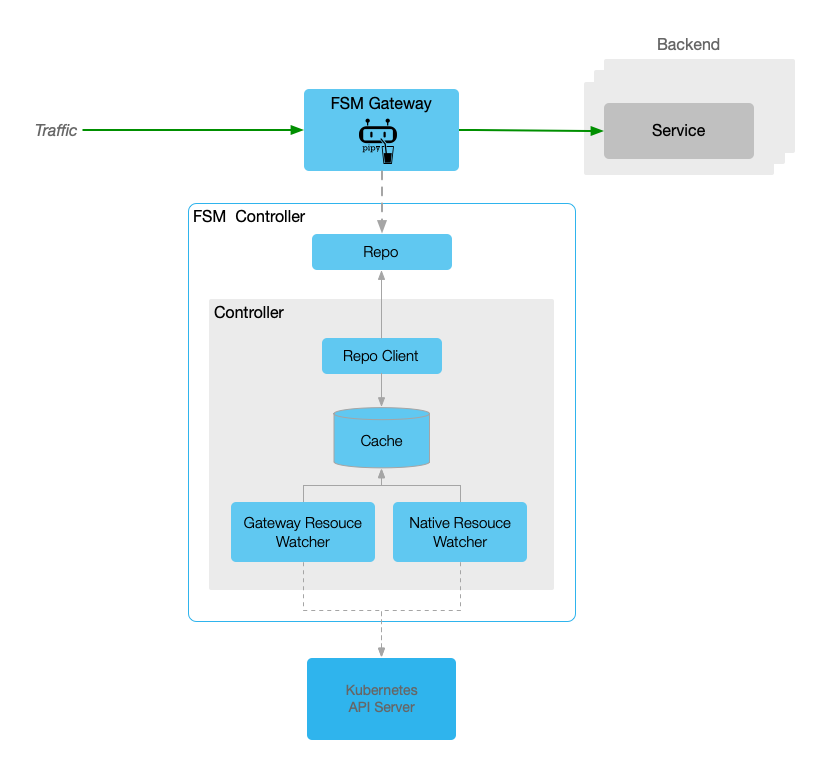

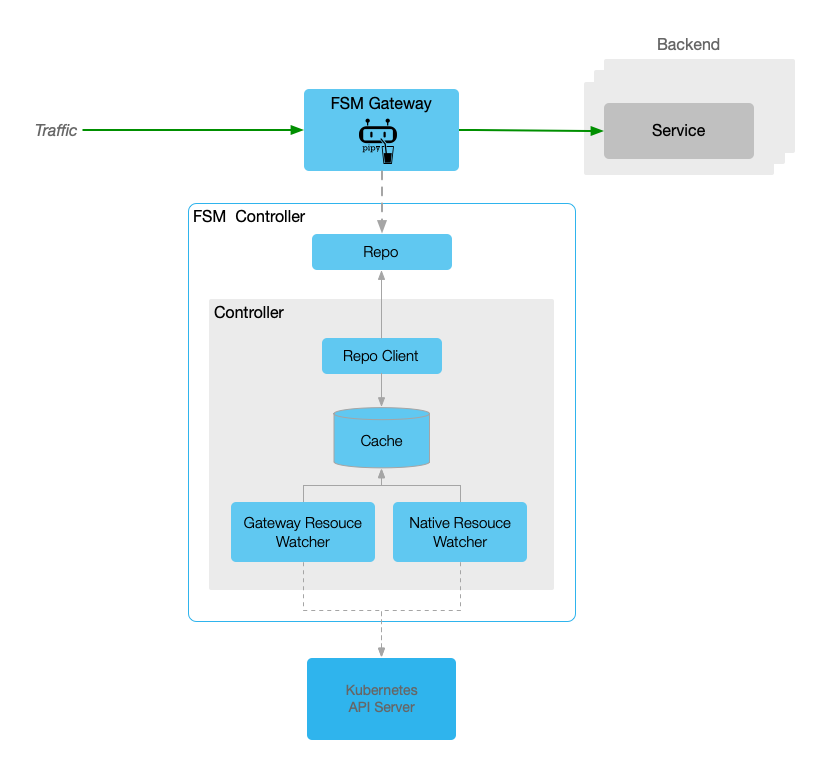

The FSM Gateway serves as an implementation of the Kubernetes Gateway API, representing one of the various components within the FSM world.

Upon activation of the FSM Gateway, the FSM controller, assuming the position of gateway overseer, diligently monitors both Kubernetes native resources and Gateway API assets. Subsequently, it dynamically furnishes the pertinent configurations to Pipy, functioning as a proxy.

Should you have an interest in the FSM Gateway, the ensuing documentation might prove beneficial.

1 - Installation

Enable FSM Gateway in cluster.

To utilize the FSM Gateway, initial activation within the FSM is requisite. Analogous to the FSM Ingress, two distinct methodologies exist for its enablement.

Note: It is imperative to acknowledge that the minimum required version of Kubernetes to facilitate the FSM Gateway activation is v1.21.0.

Let’s start.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- FSM version >= v1.1.0.

- FSM CLI to install FSM and enable FSM Gateway.

Installation

One methodology for enabling FSM Gateway is enable it during FSM installation. Remember that it’s diabled by defaulty.

fsm install \

--set=fsm.fsmGateway.enabled=true

Another approach is installing it individually if you already have FSM mesh installed.

Once done, we can check the GatewayClass resource in cluster.

kubectl get GatewayClass

NAME CONTROLLER ACCEPTED AGE

fsm-gateway-cls flomesh.io/gateway-controller True 113s

Yes, the fsm-gateway-cls is just the one we are expecting. We can also get the controller name above.

Different from Ingress controller, there is no explicit Deployment or Pod unless create a Gateway manually.

Let’s try with below to create a simple FSM gateway.

Quickstart

To create a FSM gateway, we need to create Gateway resource. This manifest will setup a gateway which will listen on port 8000 and accept the xRoute resources from same namespace.

xRoute stands for HTTPRoute, HTTPRoute, TLSRoute, TCPRoute, UDPRoute and GRPCRoute.

kubectl apply -n fsm-system -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: simple-fsm-gateway

spec:

gatewayClassName: fsm-gateway-cls

listeners:

- protocol: HTTP

port: 8000

name: http

allowedRoutes:

namespaces:

from: Same

EOF

Then we can check the resoureces:

kubectl get po,svc -n fsm-system -l app=fsm-gateway

NAME READY STATUS RESTARTS AGE

pod/fsm-gateway-fsm-system-745ddc856b-v64ql 1/1 Running 0 12m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/fsm-gateway-fsm-system LoadBalancer 10.43.20.139 10.0.2.4 8000:32328/TCP 12m

At this time, you will get result below if trying to access the gateway port:

curl -i 10.0.2.4:8000/

HTTP/1.1 404 Not Found

content-length: 13

connection: keep-alive

Not found

That’s why we have not configure any route. Let’s create a HTTRoute for the Service fsm-controller(The FSM controller has a Pipy repo running).

kubectl apply -n fsm-system -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: repo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

rules:

- backendRefs:

- name: fsm-controller

port: 6060

EOF

Trigger the request again, it responds 200 this time.

curl -i 10.0.2.4:8000/

HTTP/1.1 200 OK

content-type: text/html

content-length: 0

connection: keep-alive

2 - HTTP Routing

This document details configuring HTTP routing in FSM Gateway with the HTTPRoute resource, outlining the setup process, verification steps, and testing with different hostnames.

In FSM Gateway, the HTTPRoute resource is used to configure route rules which will match request to backend servers. Currently, the Kubernetes Service is the only one accepted as backend resource.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

Deploy sample

First, let’s install the example in namespace httpbin with commands below.

kubectl create namespace httpbin

kubectl apply -n httpbin -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/gateway/http-routing.yaml

Verification

Once done, we can get the gateway installed.

kubectl get pod,svc -n httpbin -l app=fsm-gateway default ⎈

NAME READY STATUS RESTARTS AGE

pod/fsm-gateway-httpbin-867768f76c-69s6x 1/1 Running 0 16m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/fsm-gateway-httpbin LoadBalancer 10.43.41.36 10.0.2.4 8000:31878/TCP 16m

Beyond the gateway resources, we also create the HTTPRoute resources.

kubectl get httproute -n httpbin

NAME HOSTNAMES AGE

http-route-foo ["foo.example.com"] 18m

http-route-bar ["bar.example.com"] 18m

Testing

To test the rules, we should get the address of gateway first.

export GATEWAY_IP=$(kubectl get svc -n httpbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

We can trigger a request to gateway without hostname.

curl -i http://$GATEWAY_IP:8000/headers

HTTP/1.1 404 Not Found

server: pipy-repo

content-length: 0

connection: keep-alive

It responds with 404. Next, we can try with the hostnames configured in HTTPRoute resources.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "foo.example.com",

"User-Agent": "curl/7.68.0"

}

}

curl -H 'host:bar.example.com' http://$GATEWAY_IP:8000/headers

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "bar.example.com",

"User-Agent": "curl/7.68.0"

}

}

This time, the server responds success message. There is hostname we are requesting in each response.

3 - HTTP URL Rewrite

This document describes FSM Gateway’s URL rewriting feature, allowing modification of request URLs for backend service flexibility and efficient URL normalization.

The URL rewriting feature provides FSM Gateway users with a way to modify the request URL before the traffic enters the target service. This not only provides greater flexibility to adapt to changes in backend services, but also ensures smooth migration of applications and normalization of URLs.

The HTTPRoute resource utilizes HTTPURLRewriteFilter to rewrite the path in request to another one before it gets forwarded to upstream.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

We will follow the sample in HTTP Routing.

In backend server, there is a path /get which will responds more information than path /headers.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/get

{

"args": {},

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "foo.example.com",

"User-Agent": "curl/7.68.0"

},

"origin": "10.42.0.87",

"url": "http://foo.example.com/get"

}

Replace URL Full Path

Example bellow will replace the /get path to /headers path.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /get

filters:

- type: URLRewrite

urlRewrite:

path:

type: ReplaceFullPath

replaceFullPath: /headers

backendRefs:

- name: httpbin

port: 8080

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

EOF

After updated the HTTP rule, we will get the same response as /headers when requesting /get.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/get

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "foo.example.com",

"User-Agent": "curl/7.68.0"

}

}

Replace URL Prefix Path

In backend server, there is another two paths:

/status/{statusCode} will respond with specified status code./stream/{n} will respond the response of /get n times in stream.

curl -s -w "%{response_code}\n" -H 'host:foo.example.com' http://$GATEWAY_IP:8000/status/204

204

curl -s -H 'host:foo.example.com' http://$GATEWAY_IP:8000/stream/1

{"url": "http://foo.example.com/stream/1", "args": {}, "headers": {"Host": "foo.example.com", "User-Agent": "curl/7.68.0", "Accept": "*/*", "Connection": "keep-alive"}, "origin": "10.42.0.161", "id": 0}

If we hope to change the behavior of /status to /stream, the rule is required to update again.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /status

filters:

- type: URLRewrite

urlRewrite:

path:

type: ReplacePrefixMatch

replacePrefixMatch: /stream

backendRefs:

- name: httpbin

port: 8080

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

EOF

If we trigger the request to /status/204 path again, we will stream the request data 204 times.

curl -s -H 'host:foo.example.com' http://$GATEWAY_IP:8000/status/204

{"url": "http://foo.example.com/stream/204", "args": {}, "headers": {"Host": "foo.example.com", "User-Agent": "curl/7.68.0", "Accept": "*/*", "Connection": "keep-alive"}, "origin": "10.42.0.161", "id": 99}

...

Replace Host Name

Let’s follow the example rule below. It will replace host name from foo.example.com to baz.example.com for all traffic requesting /get.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /get

filters:

- type: URLRewrite

urlRewrite:

hostname: baz.example.com

backendRefs:

- name: httpbin

port: 8080

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

EOF

Update rule and trigger request. We can see the client is requesting url http://foo.example.com/get, but the Host is replaced.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/get

{

"args": {},

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "baz.example.com",

"User-Agent": "curl/7.68.0"

},

"origin": "10.42.0.87",

"url": "http://baz.example.com/get"

4 - HTTP Redirect

This document discusses FSM Gateway’s request redirection, covering host name, prefix path, and full path redirects, with examples of each method.

Request redirection is a common network application function that allows the server to tell the client: “The resource you requested has been moved to another location, please go to the new location to obtain it.”

The HTTPRoute resource utilizes HTTPRequestRedirectFilter to redirect client to the specified new location.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

We will follow the sample in HTTP Routing.

In our backend server, there are two paths /headers and /get. The previous one responds all request headers as body, and the latter one responds more information of client than /headers.

To facilitate testing, it’s better to add records to local hosts.

echo $GATEWAY_IP foo.example.com bar.example.com >> /etc/hosts

-bash: /etc/hosts: Permission denied

curl foo.example.com/headers

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "foo.example.com",

"User-Agent": "curl/7.68.0"

}

}

curl bar.example.com/get

{

"args": {},

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "bar.example.com",

"User-Agent": "curl/7.68.0"

},

"origin": "10.42.0.87",

"url": "http://bar.example.com/get"

}

Host Name Redirect

The HTTP status code 3XX are used to redirect client to another address. We can redirect all requests to foo.example.com to bar.example.com by responding 301 status and new hostname.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /

filters:

- type: RequestRedirect

requestRedirect:

hostname: bar.example.com

port: 8000

statusCode: 301

backendRefs:

- name: httpbin

port: 8080

Now, it will return the 301 code and bar.example.com:8000 when requesting foo.example.com.

curl -i http://foo.example.com:8000/get

HTTP/1.1 301 Moved Permanently

Location: http://bar.example.com:8000/get

content-length: 0

connection: keep-alive

By default, curl does not follow location redirecting unless enable it by assign opiton -L.

curl -L http://foo.example.com:8000/get

{

"args": {},

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "bar.example.com:8000",

"User-Agent": "curl/7.68.0"

},

"origin": "10.42.0.161",

"url": "http://bar.example.com:8000/get"

}

Prefix Path Redirect

With path redirection, we can implement what we did with URL Rewriting: redirect the request to /status/{n} to /stream/{n}.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /status

filters:

- type: RequestRedirect

requestRedirect:

path:

type: ReplacePrefixMatch

replacePrefixMatch: /stream

statusCode: 301

backendRefs:

- name: httpbin

port: 8080

- matches:

backendRefs:

- name: httpbin

port: 8080

After update rull, we will get.

curl -i http://foo.example.com:8000/status/204

HTTP/1.1 301 Moved Permanently

Location: http://foo.example.com:8000/stream/204

content-length: 0

connection: keep-alive

Full Path Redirect

We can also change full path during redirecting, such as redirect all /status/xxx to /status/200.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /status

filters:

- type: RequestRedirect

requestRedirect:

path:

type: ReplaceFullPath

replaceFullPath: /status/200

statusCode: 301

backendRefs:

- name: httpbin

port: 8080

- matches:

backendRefs:

- name: httpbin

port: 8080

Now, the status of requests to /status/xxx will be redirected to /status/200.

curl -i http://foo.example.com:8000/status/204

HTTP/1.1 301 Moved Permanently

Location: http://foo.example.com:8000/status/200

content-length: 0

connection: keep-alive

5 - HTTP Request Header Manipulate

This document explains FSM Gateway’s feature to modify HTTP request headers with filter, including adding, setting, and removing headers, with examples.

The HTTP header manipulation feature allows you to fine-tune incoming and outgoing request and response headers.

In Gateway API, the HTTPRoute resource utilities two HTTPHeaderFilter filter for request and response header manipulation.

The both filters supports add, set and remove operation. The combination of them is also available.

This document will introduce the HTTP request header manipulation function of FSM Gateway. The introduction of HTTP response header manipulation is located in doc HTTP Response Header Manipulate.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

We will follow the sample in HTTP Routing.

In backend service, there is a path /headers which will respond all request headers.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "10.42.0.15:80",

"User-Agent": "curl/8.1.2"

}

}

With header adding feature, let’s try to add a new header to request by add HTTPHeaderFilter filter.

Modifying the HTTPRoute http-route-foo and add RequestHeaderModifier filter.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

add:

- name: "header-2-add"

value: "foo"

EOF

Now request the path /headers again and you will get the new header injected by gateway.

Thought HTTP header name is case insensitive but it will be converted to capital mode.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Header-2-Add": "foo",

"Host": "10.42.0.15:80",

"User-Agent": "curl/8.1.2"

}

}

set operation is used to update the value of specified header. If the header not exist, it will do as add operation.

Let’s update the HTTPRoute resource again and set two headers with new value. One does not exist and another does.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

set:

- name: "header-2-set"

value: "foo"

- name: "user-agent"

value: "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/17.0 Safari/605.1.15"

EOF

In the response, we can get the two headers updated.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Header-2-Set": "foo",

"Host": "10.42.0.15:80",

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/17.0 Safari/605.1.15"

}

}

The last operation is remove, which can remove the header of client sending.

Let’s update the HTTPRoute resource to remove user-agent header directly to hide client type from backend service.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

remove:

- "user-agent"

EOF

With resource udpated, the user agent is invisible on backend service side.

curl -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "10.42.0.15:80"

}

}

6 - HTTP Response Header Manipulate

This document covers the HTTP response header manipulation in FSM Gateway, explaining the use of filter for adding, setting, and removing headers, with practical examples and Kubernetes prerequisites.

The HTTP header manipulation feature allows you to fine-tune incoming and outgoing request and response headers.

In Gateway API, the HTTPRoute resource utilities two HTTPHeaderFilter filter for request and response header manipulation.

The both filters supports add, set and remove operation. The combination of them is also available.

This document will introduce the HTTP response header manipulation function of FSM Gateway. The introduction of HTTP request header manipulation is located in doc HTTP Request Header Manipulate.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

We will follow the sample in HTTP Routing.

In backend service responds the generated headers as below.=

curl -I -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Tue, 21 Nov 2023 08:54:43 GMT

content-type: application/json

content-length: 106

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

With header adding feature, let’s try to add a new header to response by add HTTPHeaderFilter filter.

Modifying the HTTPRoute http-route-foo and add ResponseHeaderModifier filter.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

filters:

- type: ResponseHeaderModifier

responseHeaderModifier:

add:

- name: "header-2-add"

value: "foo"

EOF

Now request the path /headers again and you will get the new header in response injected by gateway.

curl -I -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Tue, 21 Nov 2023 08:56:58 GMT

content-type: application/json

content-length: 139

access-control-allow-origin: *

access-control-allow-credentials: true

header-2-add: foo

connection: keep-alive

set operation is used to update the value of specified header. If the header not exist, it will do as add operation.

Let’s update the HTTPRoute resource again and set two headers with new value. One does not exist and another does.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

filters:

- type: ResponseHeaderModifier

responseHeaderModifier:

set:

- name: "header-2-set"

value: "foo"

- name: "server"

value: "fsm/gateway"

EOF

In the response, we can get the two headers updated.

curl -I -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

HTTP/1.1 200 OK

server: fsm/gateway

date: Tue, 21 Nov 2023 08:58:56 GMT

content-type: application/json

content-length: 139

access-control-allow-origin: *

access-control-allow-credentials: true

header-2-set: foo

connection: keep-alive

The last operation is remove, which can remove the header of client sending.

Let’s update the HTTPRoute resource to remove server header directly to hide backend implementation from client.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

filters:

- type: ResponseHeaderModifier

responseHeaderModifier:

remove:

- "server"

EOF

With resource udpated, the backend server implementation is invisible on client side.

curl -I -H 'host:foo.example.com' http://$GATEWAY_IP:8000/headers

HTTP/1.1 200 OK

date: Tue, 21 Nov 2023 09:00:32 GMT

content-type: application/json

content-length: 139

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

7 - TCP Routing

This document describes configuring TCP load balancing in FSM Gateway, focusing on traffic distribution based on network information.

This document will describe how to configure FSM Gateway to load balance TCP traffic.

During the L4 load balancing process, FSM Gateway determines which backend server to distribute traffic to based mainly on network layer and transport layer information, such as IP address and port number. This approach allows the FSM Gateway to make decisions quickly and forward traffic to the appropriate server, thereby improving overall network performance.

If you want to load balance HTTP traffic, please refer to the document HTTP Routing.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

Deploy sample

First, let’s install the example in namespace httpbin with commands below.

kubectl create namespace httpbin

kubectl apply -n httpbin -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/gateway/tcp-routing.yaml

The command above will create Gateway and TCPRoute resources except for sample app ht tpbin.

In Gateway, there are two listener defined listening on ports 8000 and 8001.

listeners:

- protocol: TCP

port: 8000

name: foo

allowedRoutes:

namespaces:

from: Same

- protocol: TCP

port: 8001

name: bar

allowedRoutes:

namespaces:

from: Same

The TCPRoute mapping to backend service httpbin is bound to the two ports defined above.

parentRefs:

- name: simple-fsm-gateway

port: 8000

- name: simple-fsm-gateway

port: 8001

rules:

- backendRefs:

- name: httpbin

port: 8080

This means we should reach backend service via either of two ports.

Testing

Let’s record the IP address of Gateway first.

export GATEWAY_IP=$(kubectl get svc -n httpbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

Sending a request to port 8000 of gateway and it will forward the traffic to backend service.

curl http://$GATEWAY_IP:8000/headers

{

"headers": {

"Accept": "*/*",

"Host": "20.24.88.85:8000",

"User-Agent": "curl/8.1.2"

}

With gatweay port 8081, it works fine too.

curl http://$GATEWAY_IP:8001/headers

{

"headers": {

"Accept": "*/*",

"Host": "20.24.88.85:8001",

"User-Agent": "curl/8.1.2"

}

}

The path /headers responds all request header received. From the header Host, we can get the entrance.

8 - TLS Termination

This document outlines setting up TLS termination in FSM Gateway.

TLS offloading is the process of terminating TLS connections at a load balancer or gateway, decrypting the traffic and passing it to the backend server, thereby relieving the backend server of the encryption and decryption burden.

This doc will show you how to use TSL termination for service.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

export GATEWAY_IP=$(kubectl get svc -n httpbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

Issue TLS certificate

If configure TLS, a certificate is required. Let’s issue a certificate first.

openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 \

-keyout example.com.key -out example.com.crt \

-subj "/CN=example.com"

With command above executed, you will get two files example.com.crt and example.com.key which we can create a secret with.

kubectl create namespace httpbin

kubectl create secret tls simple-gateway-cert --key=example.com.key --cert=example.com.crt -n httpbin

Deploy sample app

kubectl apply -n httpbin -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/gateway/tls-termination.yaml

Test

curl --cacert example.com.crt https://example.com/headers --connect-to example.com:443:$GATEWAY_IP:8000

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "example.com",

"User-Agent": "curl/7.68.0"

}

}

9 - TLS Passthrough

This document provides a guide for setting up TLS Passthrough in FSM Gateway, allowing encrypted traffic to be routed directly to backend servers. It includes prerequisites, steps for creating a Gateway and TCP Route for the feature, and demonstrates testing the setup.

TLS passthrough means that the gateway does not decrypt TLS traffic, but directly transmits the encrypted data to the back-end server, which decrypts and processes it.

This doc will guide how to use the TLS Passthrought feature.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

We will utilize https://httpbin.org for TLS passthrough testing, functioning similarly to the sample app deployed in other documentation sections.

Create Gateway

First of all, we need to create a gateway to accept incoming request. Different from TLS Termination, the mode is set to Passthrough for the listener.

Let’s create it in namespace httpbin which accepts route resources in same namespace.

kubectl create ns httpbin

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: simple-fsm-gateway

spec:

gatewayClassName: fsm-gateway-cls

listeners:

- protocol: TLS

port: 8000

name: foo

tls:

mode: Passthrough

allowedRoutes:

namespaces:

from: Same

EOF

Let’s record the IP address of gateway.

export GATEWAY_IP=$(kubectl get svc -n httpbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

Create TCP Route

To route encrypted traffic to a backend service without decryption, the use of TLSRoute is necessary in this context.

In the rules.backendRefs configuration, we specify an external service using its host and port. For example, for https://httpbin.org, these would be set as name: httpbin.org and port: 443.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1alpha2

kind: TLSRoute

metadata:

name: tcp-route

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

rules:

- backendRefs:

- name: httpbin.org

port: 443

EOF

Test

We issue requests to the URL https://httpbin.org, but in reality, these are routed through the gateway.

curl https://httpbin.org/headers --connect-to httpbin.org:443:$GATEWAY_IP:8000

{

"headers": {

"Accept": "*/*",

"Host": "httpbin.org",

"User-Agent": "curl/8.1.2",

"X-Amzn-Trace-Id": "Root=1-655dd2be-583e963f5022e1004257d331"

}

}

10 - gRPC Routing

This document describes setting up gRPC routing in FSM Gateway with GRPCRoute, focusing on directing traffic based on service and method.

The GRPCRoute is used to route gRPC request to backend service. It can match requests by hostname, gRPC service, gRPC method, or HTTP/2 header.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

Deploy sample

kubectl create namespace grpcbin

kubectl apply -n grpcbin -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/gateway/gprc-routing.yaml

In gRPC case, the listener configuration is similar with HTTP routing.

gRPC Route

We configure the match rule using service: hello.HelloService and method: SayHello to direct traffic to the target service.

rules:

- matches:

- method:

service: hello.HelloService

method: SayHello

backendRefs:

- name: grpcbin

port: 9000

Let’s test our configuration now.

Test

To test gRPC service, we will test with help of the tool grpcurl.

Let’s record the IP address of gateway first.

export GATEWAY_IP=$(kubectl get svc -n grpcbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

Issue a request using the grpcurl command, specifying the service name and method. Doing so will yield the correct response.

grpcurl -plaintext -d '{"greeting":"Flomesh"}' $GATEWAY_IP:8000 hello.HelloService/SayHello

{

"reply": "hello Flomesh"

}

11 - UDP Routing

This document outlines setting up a UDPRoute in Kubernetes to route UDP traffic through an FSM Gateway, using Fortio server as a sample application.

The UDPRoute provides a method to route UDP traffic. When combined with a gateway listener, it can be used to forward traffic on a port specified by the listener to a set of backends defined in the UDPRoute.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

Prerequisites

- Kubernetes cluster

- kubectl tool

Environment Setup

Deploying Sample Application

Use fortio server as a sample application, which provides a UDP service listening on port 8078 and echoes back the content sent by the client.

kubectl create namespace server

kubectl apply -n server -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: fortio

labels:

app: fortio

service: fortio

spec:

ports:

- port: 8078

name: udp-8078

selector:

app: fortio

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fortio

spec:

replicas: 1

selector:

matchLabels:

app: fortio

template:

metadata:

labels:

app: fortio

spec:

containers:

- name: fortio

image: fortio/fortio:latest_release

imagePullPolicy: Always

ports:

- containerPort: 8078

name: http

EOF

Creating UDP Gateway

Next, create a Gateway for the UDP service, setting the protocol of the listening port to UDP.

kubectl apply -n server -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

namespace: server

name: simple-fsm-gateway

spec:

gatewayClassName: fsm-gateway-cls

listeners:

- protocol: UDP

port: 8000

name: udp

EOF

Creating UDP Route

Similar to the HTTP protocol, to access backend services through the gateway, a UDPRoute needs to be created.

kubectl -n server apply -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1alpha2

kind: UDPRoute

metadata:

name: udp-route-sample

spec:

parentRefs:

- name: simple-fsm-gateway

namespace: server

port: 8000

rules:

- backendRefs:

- name: fortio

port: 8078

EOF

Test accessing the UDP service. After sending the word ‘fsm’, the same word will be received back.

export GATEWAY_IP=$(kubectl get svc -n server -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

echo 'fsm' | nc -4u -w1 $GATEWAY_IP 8000

fsm

12 - Fault Injection

This document will introduce how to inject specific faults at the gateway level to test the behavior and stability of the system.

The fault injection feature is a powerful testing mechanism used to enhance the robustness and reliability of microservice architectures. This capability tests a system’s fault tolerance and recovery mechanisms by simulating network-level failures such as delays and error responses. Fault injection mainly includes two types: delayed injection and error injection.

Delay injection simulates network delays or slow service processing by artificially introducing delays during the gateway’s processing of requests. This helps test whether the timeout handling and retry strategies of downstream services are effective, ensuring that the entire system can maintain stable operation when actual delays occur.

Error injection simulates a backend service failure by having the gateway return an error response (such as HTTP 5xx errors). This method can verify the service consumer’s handling of failures, such as whether error handling logic and fault tolerance mechanisms, such as circuit breaker mode, are correctly executed.

FSM Gateway supports these two types of fault injection and provides two types of granular fault injection: domain and routing. Next, we will show you the fault injection of FSM Gateway through a demonstration.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

Deploy Sample Application

Next, deploy the sample application, use the commonly used httpbin service, and create Gateway and [HTTP Route (HttpRoute)] (https://gateway-api.sigs.k8s.io/api-types/httproute/).

kubectl create namespace httpbin

kubectl apply -n httpbin -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/gateway/http-routing.yaml

Confirm Gateway and HTTPRoute created. You will get two HTTP routes with different domain.

kubectl get gateway,httproute -n httpbin

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/simple-fsm-gateway fsm-gateway-cls Unknown 3s

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/http-route-foo ["foo.example.com"] 2s

httproute.gateway.networking.k8s.io/http-route-bar ["bar.example.com"] 2s

Check if you can reach service via gateway.

export GATEWAY_IP=$(kubectl get svc -n httpbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

curl http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com'

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "10.42.0.15:80",

"User-Agent": "curl/7.81.0"

}

}

Fault Injection Testing

Route-Level Fault Injection

We add a route under the HTTP route foo.example.com with a path prefix /headers to facilitate setting fault injection.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /headers

backendRefs:

- name: httpbin

port: 8080

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

EOF

When we request the /headers and /get paths, we can get the correct response.

Next, we inject a 404 fault with a 100% probability on the /headers route. For detailed configuration, please refer to FaultInjectionPolicy API Reference.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: FaultInjectionPolicy

metadata:

name: fault-injection

spec:

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: http-route-foo

namespace: httpbin

http:

- match:

path:

type: PathPrefix

value: /headers

config:

abort:

percent: 100

statusCode: 404

EOF

Now, requesting /headers results in a 404 response.

curl -I http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com'

HTTP/1.1 404 Not Found

content-length: 0

connection: keep-alive

Requesting /get will not be affected.

curl -I http://$GATEWAY_IP:8000/get -H 'host:foo.example.com'

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Thu, 14 Dec 2023 14:11:36 GMT

content-type: application/json

content-length: 220

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

Domain-Level Fault Injection

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: FaultInjectionPolicy

metadata:

name: fault-injection

spec:

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: http-route-foo

namespace: httpbin

hostnames:

- hostname: foo.example.com

config:

abort:

percent: 100

statusCode: 404

EOF

Requesting foo.example.com returns a 404 response.

curl -I http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com'

HTTP/1.1 404 Not Found

content-length: 0

connection: keep-alive

However, requesting bar.example.com, which is not listed in the fault injection, responds normally.

curl -I http://$GATEWAY_IP:8000/headers -H 'host:bar.example.com'

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Thu, 14 Dec 2023 13:55:07 GMT

content-type: application/json

content-length: 140

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

Modify the fault injection policy to change the error fault to a delay fault: introducing a random delay of 500 to 1000 ms.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: FaultInjectionPolicy

metadata:

name: fault-injection

spec:

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: http-route-foo

namespace: httpbin

hostnames:

- hostname: foo.example.com

config:

delay:

percent: 100

range:

min: 500

max: 1000

unit: ms

EOF

Check the response time of the requests to see the introduced random delay.

time curl -s http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com' > /dev/null

real 0m0.904s

user 0m0.000s

sys 0m0.010s

time curl -s http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com' > /dev/null

real 0m0.572s

user 0m0.005s

sys 0m0.005s

13 - Access Control

This doc will demonstrate how to control the access to backend services with blakclist and whitelist.

Blacklist and whitelist functionality is an effective network security mechanism used to control and manage network traffic. This feature relies on a predefined list of rules to determine which entities (IP addresses or IP ranges) are allowed or denied passage through the gateway. The gateway uses blacklists and whitelists to filter incoming network traffic. This method provides simple and direct access control, easy to manage, and effectively prevents known security threats.

As the entry point for cluster traffic, the FSM Gateway manages all traffic entering the cluster. By setting blacklist and whitelist access control policies, it can filter traffic entering the cluster.

FSM Gateway provides two granularities of access control, both targeting L7 HTTP protocol:

- Domain-level access control: A network traffic management strategy based on domain names. It involves implementing access rules for traffic that meets specific domain name conditions, such as allowing or blocking communication with certain domain names.

- Route-level access control: A management strategy based on routes (request headers, methods, paths, parameters), where access control policies are applied to specific routes to manage traffic accessing those routes.

Next, we will demonstrate the use of blacklist and whitelist access control.

Prerequisites

- Kubernetes cluster version v1.21.0 or higher.

- kubectl CLI

- FSM Gateway installed via guide doc.

Demonstration

Deploying a Sample Application

Next, deploy a sample application using the commonly used httpbin service, and create Gateway and HTTP Route (HttpRoute).

kubectl create namespace httpbin

kubectl apply -n httpbin -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/gateway/http-routing.yaml

Check the gateway and HTTP routes; you should see two routes with different domain names created.

kubectl get gateway,httproute -n httpbin

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/simple-fsm-gateway fsm-gateway-cls Unknown 3s

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/http-route-foo ["foo.example.com"] 2s

httproute.gateway.networking.k8s.io/http-route-bar ["bar.example.com"] 2s

Verify if the HTTP routing is effective by accessing the application.

export GATEWAY_IP=$(kubectl get svc -n httpbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

curl http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com'

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "10.42.0.15:80",

"User-Agent": "curl/7.81.0"

}

}

Domain-Based Access Control

With domain-based access control, we can set one or more domain names in the policy and add a blacklist or whitelist for these domains.

For example, in the policy below:

targetRef is a reference to the target resource for which the policy is applied, which is the HTTPRoute resource for HTTP requests.- Through the

hostname field, we add a blacklist or whitelist policy for foo.example.com among the two domains. - With the prevalence of cloud services and distributed network architectures, the direct connection to the gateway is no longer the client but an intermediate proxy. In such cases, we usually use the HTTP header

X-Forwarded-For to identify the client’s IP address. In FSM Gateway’s policy, the enableXFF field controls whether to obtain the client’s IP address from the X-Forwarded-For header. - For denied communications, customize the response with

statusCode and message.

For detailed configuration, please refer to AccessControlPolicy API Reference.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: AccessControlPolicy

metadata:

name: access-control-sample

spec:

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: http-route-foo

namespace: httpbin

hostnames:

- hostname: foo.example.com

config:

blacklist:

- 192.168.0.0/24

whitelist:

- 112.94.5.242

enableXFF:

true

statusCode: 403

message: "Forbidden"

EOF

After the policy is effective, we send requests for testing, remembering to add X-Forwarded-For to specify the client IP.

curl -I http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com' -H 'x-forwarded-for:112.94.5.242'

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Fri, 29 Dec 2023 02:29:08 GMT

content-type: application/json

content-length: 139

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

curl -I http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com' -H 'x-forwarded-for: 10.42.0.1'

HTTP/1.1 403 Forbidden

content-length: 9

connection: keep-alive

From the results, when both a whitelist and a blacklist are present, the blacklist configuration will be ignored.

Route-Based Access Control

Route-based access control allows us to set access control policies for specific routes (path, request headers, method, parameters) to restrict access to these particular routes.

Before setting up the access control policy, we add a route with the path prefix /headers under the HTTP route foo.example.com to facilitate the configuration of access control for it.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /headers

backendRefs:

- name: httpbin

port: 8080

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

EOF

In the following policy:

- The

match is used to configure the routes to be matched, here we use path matching. - Other configurations continue to use the settings from above.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: AccessControlPolicy

metadata:

name: access-control-sample

spec:

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: http-route-foo

namespace: httpbin

http:

- match:

path:

type: PathPrefix

value: /headers

config:

blacklist:

- 192.168.0.0/24

whitelist:

- 112.94.5.242

enableXFF: true

statusCode: 403

message: "Forbidden"

EOF

After updating the policy, we send requests to test. For the path /headers, the results are as before.

curl -I http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com' -H 'x-forwarded-for:112.94.5.242'

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Fri, 29 Dec 2023 02:39:02 GMT

content-type: application/json

content-length: 139

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

curl -I http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com' -H 'x-forwarded-for: 10.42.0.1'

HTTP/1.1 403 Forbidden

content-length: 9

connection: keep-alive

However, if the path /get is accessed, there are no restrictions.

curl -I http://$GATEWAY_IP:8000/get -H 'host:foo.example.com' -H 'x-forwarded-for: 10.42.0.1'

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Fri, 29 Dec 2023 02:40:18 GMT

content-type: application/json

content-length: 230

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

This demonstrates the effectiveness and specificity of route-based access control in managing access to different routes within a network infrastructure.

14 - Rate Limit

This document introduces the speed limiting function, including speed limiting based on ports, domain names, and routes.

Rate limiting in gateways is a crucial network traffic management strategy for controlling the data transfer rate through the gateway, essential for ensuring network stability and efficiency.

FSM Gateway’s rate limiting can be implemented based on various criteria, including port, domain, and route.

- Port-based Rate Limiting: Controls the data transfer rate at the port, ensuring traffic does not exceed a set threshold. This is often used to prevent network congestion and server overload.

- Domain-based Rate Limiting: Sets request rate limits for specific domains. This strategy is typically used to control access frequency to certain services or applications to prevent overload and ensure service quality.

- Route-based Rate Limiting: Sets request rate limits for specific routes or URL paths. This approach allows for more granular traffic control within different parts of a single application.

Configuration

For detailed configuration, please refer to RateLimitPolicy API Reference.

targetRef refers to the target resource for applying the policy, set here for port granularity, hence referencing the Gateway resource simple-fsm-gateway.bps: The default rate limit for the port, measured in bytes per second.config: L7 rate limiting configuration.portsport specifies the port.bps sets the bytes per second.

hostnameshostname: Domain name.config: L7 rate limiting configuration.

httpmatch:headers: HTTP request matching.method: HTTP method matching.

config: L7 rate limiting configuration.

L7 Rate Limiting Configuration:

backlog: The backlog value refers to the number of requests the system allows to queue when the rate limit threshold is reached. This is an important field, especially when the system suddenly faces a large number of requests that may exceed the set rate limit threshold. The backlog value provides a buffer to handle requests exceeding the rate limit threshold but within the backlog limit. Once the backlog limit is reached, any new requests will be immediately rejected without waiting. This field is optional, defaulting to 10.requests: The request value specifies the number of allowed visits within the rate limit time window. This is the core parameter of the rate limiting strategy, determining how many requests can be accepted within a specific time window. The purpose of setting this value is to ensure that the backend system does not receive more requests than it can handle within the given time window. This field is mandatory, with a minimum value of 1.statTimeWindow: The rate limit time window (in seconds) defines the period for counting the number of requests. Rate limiting strategies are usually based on sliding or fixed windows. StatTimeWindow defines the size of this window. For example, if statTimeWindow is set to 60 seconds, and requests is 100, it means a maximum of 100 requests every 60 seconds. This field is mandatory.burst: The burst value represents the maximum number of requests allowed in a short time. This optional field is mainly used to handle short-term request spikes. The burst value is typically higher than the request value, allowing the number of accepted requests in a short time to exceed the average rate. This field is optional.responseStatusCode: The HTTP status code returned to the client when rate limiting occurs. This status code informs the client that the request was rejected due to reaching the rate limit threshold. Common status codes include 429 (Too Many Requests), but can be customized as needed. This field is mandatory.responseHeadersToAdd: HTTP headers to be added to the response when rate limiting occurs. This can be used to inform the client about more information regarding the rate limiting policy. For example, a RateLimit-Limit header can be added to inform the client of the rate limiting configuration. Additional useful information about the current rate limiting policy or how to contact the system administrator can also be provided. This field is optional.

Prerequisites

- Kubernetes Cluster

- kubectl Tool

- FSM Gateway installed via guide doc.

Demonstration

Deploying a Sample Application

Next, deploy a sample application using the popular httpbin service, and create a Gateway and HTTP Route (HttpRoute).

kubectl create namespace httpbin

kubectl apply -n httpbin -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/gateway/http-routing.yaml

Check the gateway and HTTP route, noting the creation of routes for two different domains.

kubectl get gateway,httproute -n httpbin

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/simple-fsm-gateway fsm-gateway-cls Unknown 3s

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/http-route-foo ["foo.example.com"] 2s

httproute.gateway.networking.k8s.io/http-route-bar ["bar.example.com"] 2s

Access the application to verify the HTTP route is effective.

export GATEWAY_IP=$(kubectl get svc -n httpbin -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

curl http://$GATEWAY_IP:8000/headers -H 'host:foo.example.com'

{

"headers": {

"Accept": "*/*",

"Connection": "keep-alive",

"Host": "10.42.0.15:80",

"User-Agent": "curl/7.81.0"

}

}

Rate Limiting Test

Port-Based Rate Limiting

Create an 8k file.

dd if=/dev/zero of=payload bs=1K count=8

Test sending the file to the service, which only takes 1s.

time curl -s -X POST -T payload http://$GATEWAY_IP:8000/status/200 -H 'host:foo.example.com'

real 0m1.018s

user 0m0.001s

sys 0m0.014s

Then set the rate limiting policy:

targetRef is the reference to the target resource of the policy, set here for port granularity, hence referencing the Gateway resource simple-fsm-gateway.portsport specifies port 8000bps sets the bytes per second to 2k

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: RateLimitPolicy

metadata:

name: ratelimit-sample

spec:

targetRef:

group: gateway.networking.k8s.io

kind: Gateway

name: simple-fsm-gateway

namespace: httpbin

ports:

- port: 8000

bps: 2048

EOF

After the policy takes effect, send the 8k file again. Now the rate limiting policy is in effect, and it takes 4 seconds.

time curl -s -X POST -T payload http://$GATEWAY_IP:8000/status/200 -H 'host:foo.example.com'

real 0m4.016s

user 0m0.007s

sys 0m0.005s

Domain-Based Rate Limiting

Before testing domain-based rate limiting, delete the policy created above.

kubectl delete ratelimitpolicies -n httpbin ratelimit-sample

Then use fortio to generate load: 1 concurrent sending 1000 requests at 200 qps.

fortio load -quiet -c 1 -n 1000 -qps 200 -H 'host:foo.example.com' http://$GATEWAY_IP:8000/status/200

Code 200 : 1000 (100.0 %)

Next, set the rate limiting policy:

- Limiting domain

foo.example.com - Backlog of pending requests set to

1 - Max requests in a

60s window set to 200 - Return

429 for rate-limited requests with response header RateLimit-Limit: 200

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: RateLimitPolicy

metadata:

name: ratelimit-sample

spec:

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: http-route-foo

namespace: httpbin

hostnames:

- hostname: foo.example.com

config:

backlog: 1

requests: 100

statTimeWindow: 60

responseStatusCode: 429

responseHeadersToAdd:

- name: RateLimit-Limit

value: "100"

EOF

After the policy is effective, generate the same load for testing. You can see that 200 responses are successful, and 798 are rate-limited.

-1 is the error code set by fortio during read timeout. This is because fortio’s default timeout is 3s, and the rate limiting policy sets the backlog to 1. FSM Gateway defaults to 2 threads, so there are 2 timed-out requests.

fortio load -quiet -c 1 -n 1000 -qps 200 -H 'host:foo.example.com' http://$GATEWAY_IP:8000/status/200

Code -1 : 2 (0.2 %)

Code 200 : 200 (19.9 %)

Code 429 : 798 (79.9 %)

However, accessing bar.example.com will not be rate-limited.

fortio load -quiet -c 1 -n 1000 -qps 200 -H 'host:bar.example.com' http://$GATEWAY_IP:8000/status/200

Code 200 : 1000 (100.0 %)

Route-Based Rate Limiting

Similarly, delete the previously created policy before starting the next test.

kubectl delete ratelimitpolicies -n httpbin ratelimit-sample

Before configuring the access policy,

under the HTTP route foo.example.com, we add a route with the path prefix /headers to facilitate setting the access control policy for it.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: http-route-foo

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

hostnames:

- foo.example.com

rules:

- matches:

- path:

type: PathPrefix

value: /status/200

backendRefs:

- name: httpbin

port: 8080

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin

port: 8080

EOF

Update the rate limiting policy by adding route matching rules: prefix /status/200, other configurations remain unrestricted.

kubectl apply -n httpbin -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: RateLimitPolicy

metadata:

name: ratelimit-sample

spec:

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: http-route-foo

namespace: httpbin

http:

- match:

path:

type: PathPrefix

value: /status/200

config:

backlog: 1

requests: 100

statTimeWindow: 60

responseStatusCode: 429

responseHeadersToAdd:

- name: RateLimit-Limit

value: "100"

EOF

After applying the policy, send the same load. From the results, only 200 requests are successful.

fortio load -quiet -c 1 -n 1000 -qps 200 -H 'host:foo.example.com' http://$GATEWAY_IP:8000/status/200

Code -1 : 2 (0.2 %)

Code 200 : 200 (20.0 %)

Code 429 : 798 (79.8 %)

When the path /status/204 is used, it will not be subject to rate limiting.

fortio load -quiet -c 1 -n 1000 -qps 200 -H 'host:foo.example.com' http://$GATEWAY_IP:8000/status/204

Code 204 : 1000 (100.0 %)

15 - Retry

Gateway retry feature enhances service reliability by resending failed requests, mitigating temporary issues, and improving user experience with strategic policies.

The retry functionality of a gateway is a crucial network communication mechanism designed to enhance the reliability and fault tolerance of system service calls. This feature allows the gateway to automatically resend a request if the initial request fails, thereby reducing the impact of temporary issues (such as network fluctuations, momentary service overloads, etc.) on the end-user experience.

The working principle is, when the gateway sends a request to a downstream service and encounters specific types of failures (such as connection errors, timeouts, 5xx series errors, etc.), it attempts to resend the request based on pre-set policies instead of immediately returning the error to the client.

Prerequisites

- Kubernetes cluster

- kubectl tool

- FSM Gateway installed via guide doc.

Demonstration

Deploying Example Application

We use the fortio server as the example application, which allows defining response status codes and their occurrence probabilities through the status request parameter.

kubectl create namespace server

kubectl apply -n server -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: fortio

labels:

app: fortio

service: fortio

spec:

ports:

- port: 8080

name: http-8080

selector:

app: fortio

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fortio

spec:

replicas: 1

selector:

matchLabels:

app: fortio

template:

metadata:

labels:

app: fortio

spec:

containers:

- name: fortio

image: fortio/fortio:latest_release

imagePullPolicy: Always

ports:

- containerPort: 8080

name: http

EOF

Creating Gateway and Route

kubectl apply -n server -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: simple-fsm-gateway

spec:

gatewayClassName: fsm-gateway-cls

listeners:

- protocol: HTTP

port: 8000

name: http

allowedRoutes:

namespaces:

from: Same

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: fortio-route

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: fortio

port: 8080

EOF

Check if the application is accessible.

export GATEWAY_IP=$(kubectl get svc -n server -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

curl -i http://$GATEWAY_IP:8000/echo

HTTP/1.1 200 OK

date: Fri, 05 Jan 2024 07:02:17 GMT

content-length: 0

connection: keep-alive

Testing Retry Strategy

Before setting the retry strategy, add the parameter status=503:10 to make the fortio server have a 10% chance of returning 503. Using fortio load to generate load, sending 100 requests will see nearly 10% are 503 responses.

fortio load -quiet -c 1 -n 100 http://$GATEWAY_IP:8000/echo\?status\=503:10

Code 200 : 89 (89.0 %)

Code 503 : 11 (11.0 %)

All done 100 calls (plus 0 warmup) 1

.054 ms avg, 8.0 qps

Then set the retry strategy.

targetRef specifies the target resource for the policy, which in the retry policy can only be a Service in K8s core or ServiceImport in flomesh.io (the latter for multi-cluster). Here we specify the fortio in namespace server.ports is the list of service ports, as the service may expose multiple ports, different ports can have different retry strategies.port is the service port, set to 8080 for the fortio service in this example.config is the core configuration of the retry policy.retryOn is the list of response codes that are retryable, e.g., 5xx matches 500-599, or 500 matches only 500.numRetries is the number of retries.backoffBaseInterval is the base interval for calculating backoff (in seconds), i.e., the waiting time between consecutive retry requests. It’s mainly to avoid additional pressure on services that are experiencing problems.

For detailed retry policy configuration, refer to the official documentation RetryPolicy.

kubectl apply -n server -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: RetryPolicy

metadata:

name: retry-policy-sample

spec:

targetRef:

kind: Service

name: fortio

namespace: server

ports:

- port: 8080

config:

retryOn:

- 5xx

numRetries: 5

backoffBaseInterval: 2

EOF

After the policy takes effect, send the same 100 requests, and you can see all are 200 responses. Note that the average response time has increased due to the added time for retries.

fortio load -quiet -c 1 -n 100 http://$GATEWAY_IP:8000/echo\?status\=503:10

Code 200 : 100 (100.0 %)

All done 100 calls (plus 0 warmup) 160.820 ms avg, 5.8 qps

16 - Session Sticky

Session sticky in gateways ensures user requests are directed to the same server, enhancing user experience and transaction integrity, typically implemented using cookies.

Session sticky in a gateway is a network technology designed to ensure that a user’s consecutive requests are directed to the same backend server over a period of time. This functionality is particularly crucial in scenarios requiring user state maintenance or continuous interaction, such as maintaining online shopping carts, keeping users logged in, or handling multi-step transactions.

Session sticky plays a key role in enhancing website performance and user satisfaction by providing a consistent user experience and maintaining transaction integrity. It is typically implemented using client identification information like Cookies or server-side IP binding techniques, thereby ensuring request continuity and effective server load balancing.

Prerequisites

- Kubernetes cluster

- kubectl tool

- FSM Gateway installed via guide doc.

Demonstration

Deploying a Sample Application

To verify the session sticky feature, create the Service pipy, and set up two endpoints with different responses. These endpoints are simulated using the programmable proxy Pipy.

kubectl create namespace server

kubectl apply -n server -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: pipy

spec:

selector:

app: pipy

ports:

- protocol: TCP

port: 8080

targetPort: 8080

---

apiVersion: v1

kind: Pod

metadata:

name: pipy-1

labels:

app: pipy

spec:

containers:

- name: pipy

image: flomesh/pipy:0.99.0-2

command: ["pipy", "-e", "pipy().listen(8080).serveHTTP(new Message({status: 200},'Hello, world'))"]

---

apiVersion: v1

kind: Pod

metadata:

name: pipy-2

labels:

app: pipy

spec:

containers:

- name: pipy

image: flomesh/pipy:0.99.0-2

command: ["pipy", "-e", "pipy().listen(8080).serveHTTP(new Message({status: 503},'Service unavailable'))"]

EOF

Creating Gateway and Routes

Next, create a gateway and set up routes for the Service pipy.

kubectl apply -n server -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: simple-fsm-gateway

spec:

gatewayClassName: fsm-gateway-cls

listeners:

- protocol: HTTP

port: 8000

name: http

allowedRoutes:

namespaces:

from: Same

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: fortio-route

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: pipy

port: 8080

EOF

Check if the application is accessible. Results show that the gateway has balanced the load across two endpoints.

export GATEWAY_IP=$(kubectl get svc -n server -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

curl http://$GATEWAY_IP:8000/

Service unavailable

curl http://$GATEWAY_IP:8000/

Hello, world

Testing Session Sticky Strategy

Next, configure the session sticky strategy.

targetRef specifies the target resource for the policy. In this policy, the target resource can only be a K8s core Service. Here, the pipy in the server namespace is specified.ports is a list of service ports, as a service may expose multiple ports, allowing different ports to set retry strategies.port is the service port, set to 8080 for the pipy service in this example.config is the core configuration of the strategy.cookieName is the name of the cookie used for session sticky via cookie-based load balancing. This field is optional, but when cookie-based session sticky is enabled, it defines the name of the cookie storing backend server information, such as _srv_id. This means that when a user first visits the application, a cookie named _srv_id is set, typically corresponding to a backend server. When the user revisits, this cookie ensures their requests are routed to the same server as before.expires is the lifespan of the cookie during session sticky. This defines how long the cookie will last, i.e., how long the user’s consecutive requests will be directed to the same backend server.

For detailed configuration, refer to the official documentation SessionStickyPolicy.

kubectl apply -n server -f - <<EOF

apiVersion: gateway.flomesh.io/v1alpha1

kind: SessionStickyPolicy

metadata:

name: session-sticky-policy-sample

spec:

targetRef:

group: ""

kind: Service

name: pipy

namespace: server

ports:

- port: 8080

config:

cookieName: _srv_id

expires: 600

EOF

After creating the policy, send requests again. By adding the option -i, you can see the cookie information added in the response header.

curl -i http://$GATEWAY_IP:8000/

HTTP/1.1 200 OK

set-cookie: _srv_id=7252425551334343; path=/; expires=Fri, 5 Jan 2024 19:15:23 GMT; max-age=600

content-length: 12

connection: keep-alive

Hello, world

Send 3 requests next, adding the cookie information from the above response with the -b parameter. All 3 requests receive the same response, indicating that the session sticky feature is effective.

curl -b _srv_id=7252425551334343 http://$GATEWAY_IP:8000/

Hello, world

curl -b _srv_id=7252425551334343 http://$GATEWAY_IP:8000/

Hello, world

curl -b _srv_id=7252425551334343 http://$GATEWAY_IP:8000/

Hello, world

17 - Health Check

Gateway health checks in Kubernetes ensure traffic is directed only to healthy services, enhancing system availability and resilience by isolating unhealthy endpoints in microservices.

Gateway health check is an automated monitoring mechanism that regularly checks and verifies the health of backend services, ensuring traffic is only forwarded to those services that are healthy and can handle requests properly. This feature is crucial in microservices or distributed systems, as it maintains high availability and resilience by promptly identifying and isolating faulty or underperforming services.

Health checks enable gateways to ensure that request loads are effectively distributed to well-functioning services, thereby improving the overall system stability and response speed.

Prerequisites

- Kubernetes cluster

- kubectl tool

- FSM Gateway installed via guide doc.

Demonstration

Deploying a Sample Application

To test the health check functionality, create two endpoints with different health statuses. This is achieved by creating the Service pipy, with two endpoints simulated using the programmable proxy Pipy.

kubectl create namespace server

kubectl apply -n server -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: pipy

spec:

selector:

app: pipy

ports:

- protocol: TCP

port: 8080

targetPort: 8080

---

apiVersion: v1

kind: Pod

metadata:

name: pipy-1

labels:

app: pipy

spec:

containers:

- name: pipy

image: flomesh/pipy:0.99.0-2

command: ["pipy", "-e", "pipy().listen(8080).serveHTTP(new Message({status: 200},'Hello, world'))"]

---

apiVersion: v1

kind: Pod

metadata:

name: pipy-2

labels:

app: pipy

spec:

containers:

- name: pipy

image: flomesh/pipy:0.99.0-2

command: ["pipy", "-e", "pipy().listen(8080).serveHTTP(new Message({status: 503},'Service unavailable'))"]

EOF

Creating Gateway and Routes

Next, create a gateway and set up routes for the Service pipy.

kubectl apply -n server -f - <<EOF

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: simple-fsm-gateway

spec:

gatewayClassName: fsm-gateway-cls

listeners:

- protocol: HTTP

port: 8000

name: http

allowedRoutes:

namespaces:

from: Same

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: fortio-route

spec:

parentRefs:

- name: simple-fsm-gateway

port: 8000

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: pipy

port: 8080

EOF

Check if the application is accessible. The results show that the gateway has balanced the load between a healthy endpoint and an unhealthy one.

export GATEWAY_IP=$(kubectl get svc -n server -l app=fsm-gateway -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')

curl -o /dev/null -s -w '%{http_code}' http://$GATEWAY_IP:8000/

200

curl -o /dev/null -s -w '%{http_code}' http://$GATEWAY_IP:8000/

503

Testing Health Check Functionality

Next, configure the health check policy.