This is the multi-page printable view of this section. Click here to print.

How-to Guides

- 1: Operating guides

- 1.1: Install the FSM CLI

- 1.2: Install the FSM Control Plane

- 1.3: Upgrade the FSM Control Plane

- 1.4: Uninstall the FSM Control Plane and Components

- 1.5: Mesh configuration

- 1.6: Reconciler Guide

- 1.7: Extending FSM

- 2: Application onboarding

- 2.1: Prerequisites

- 2.2: Namespace addition

- 2.3: Sidecar Injection

- 2.4: Application Protocol Selection

- 3: Traffic Management

- 3.1: Permissive Mode

- 3.2: Traffic Redirection

- 3.2.1: Iptables Redirection

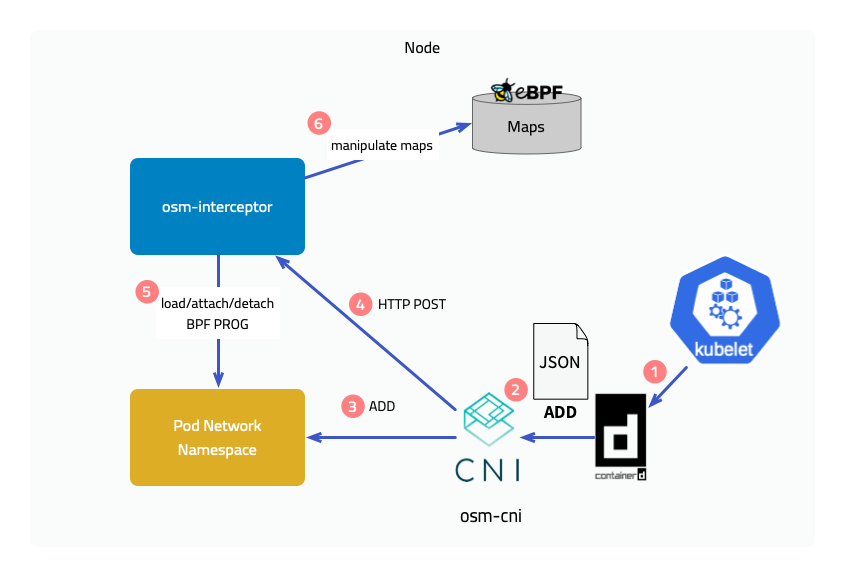

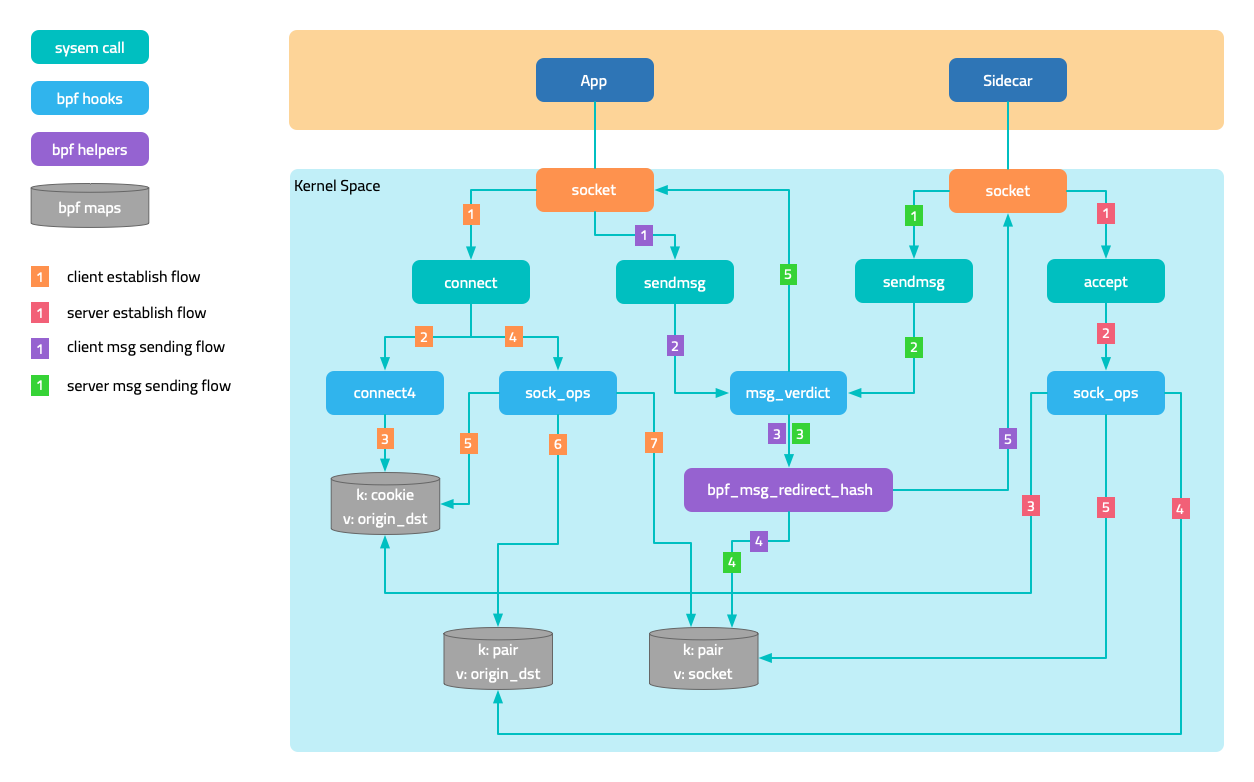

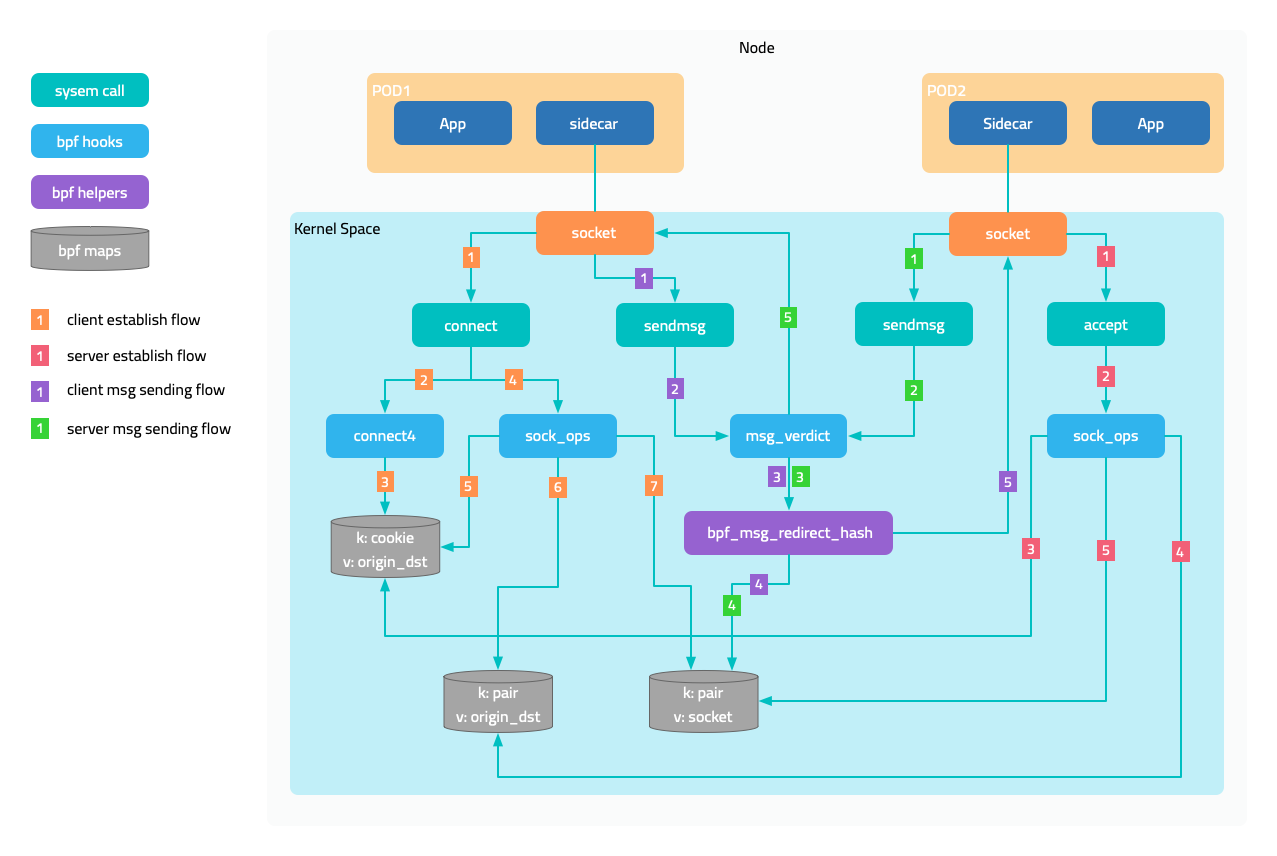

- 3.2.2: eBPF Redirection

- 3.3: Traffic Splitting

- 3.4: Circuit Breaking

- 3.5: Retry

- 3.6: Rate Limiting

- 3.7: Ingress

- 3.7.1: Ingress to Mesh

- 3.7.2: Service Loadbalancer

- 3.7.3: FSM Ingress Controller

- 3.7.3.1: Installation

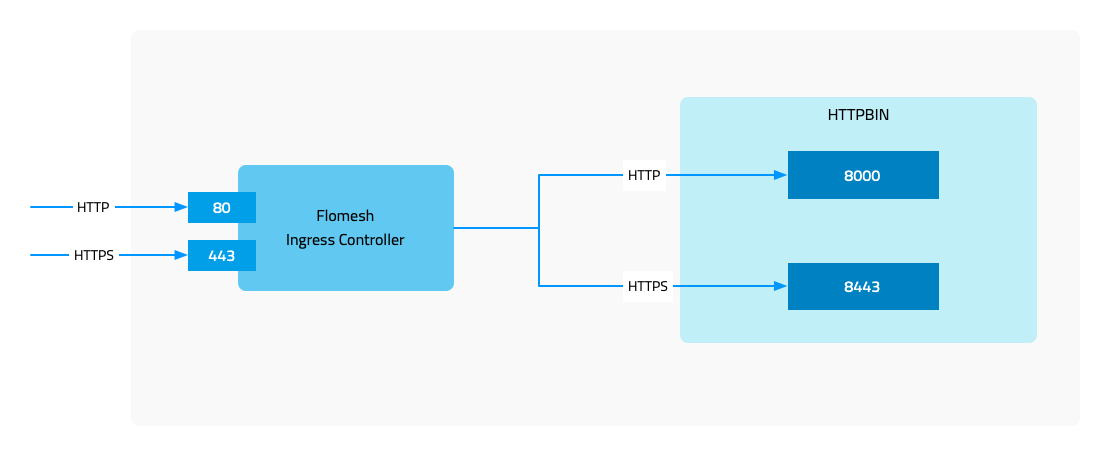

- 3.7.3.2: Basics

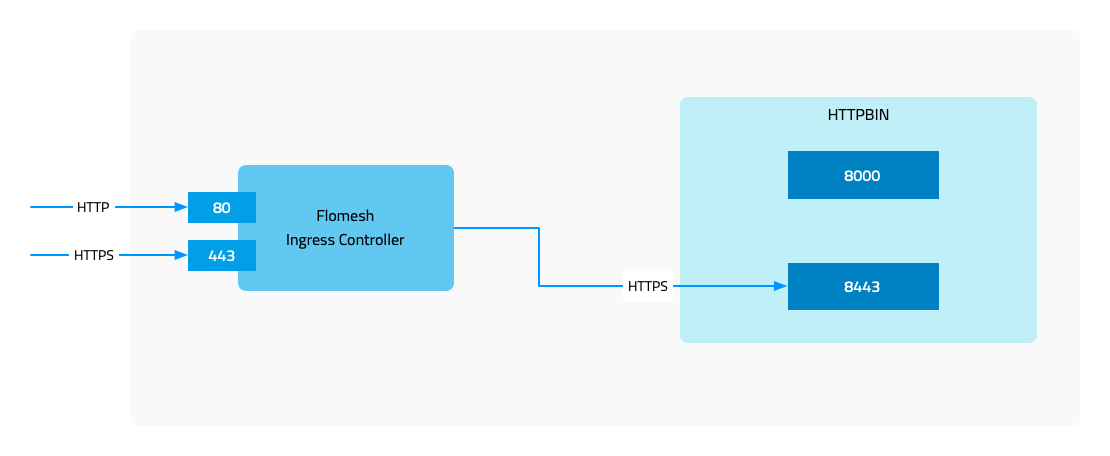

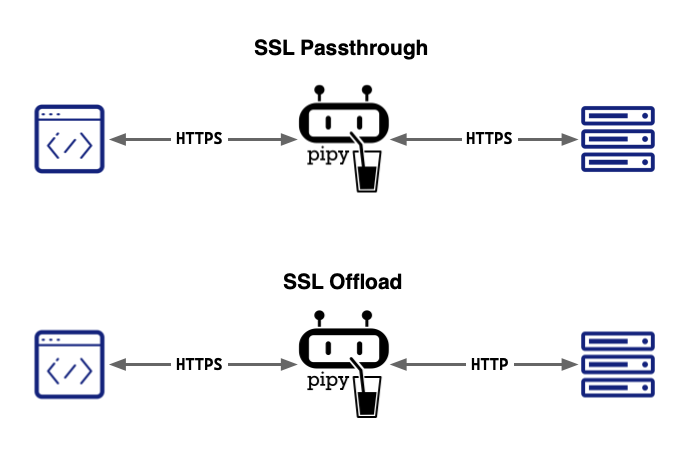

- 3.7.3.3: Advanced TLS

- 3.7.3.4: TLS Passthrough

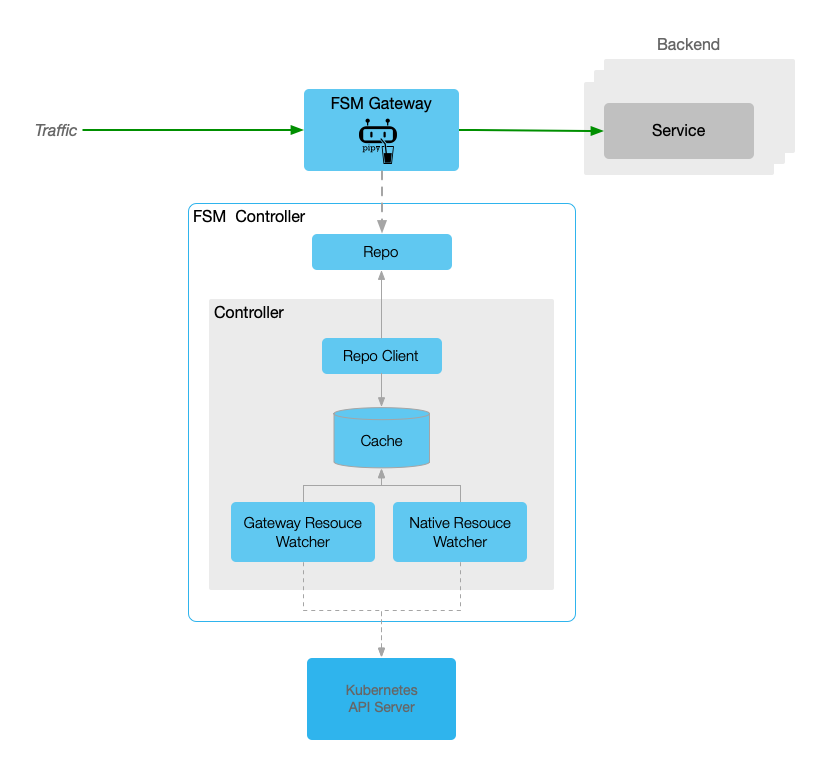

- 3.7.4: FSM Gateway

- 3.7.4.1: Installation

- 3.7.4.2: HTTP Routing

- 3.7.4.3: HTTP URL Rewrite

- 3.7.4.4: HTTP Redirect

- 3.7.4.5: HTTP Request Header Manipulate

- 3.7.4.6: HTTP Response Header Manipulate

- 3.7.4.7: TCP Routing

- 3.7.4.8: TLS Termination

- 3.7.4.9: TLS Passthrough

- 3.7.4.10: gRPC Routing

- 3.7.4.11: UDP Routing

- 3.7.4.12: Fault Injection

- 3.7.4.13: Access Control

- 3.7.4.14: Rate Limit

- 3.7.4.15: Retry

- 3.7.4.16: Session Sticky

- 3.7.4.17: Health Check

- 3.7.4.18: Loadbalancing Algorithm

- 3.7.4.19: Upstream TLS

- 3.7.4.20: Gateway mTLS

- 3.7.4.21: Traffic Mirroring

- 3.8: Egress

- 3.8.1: Egress

- 3.8.2: Egress Gateway

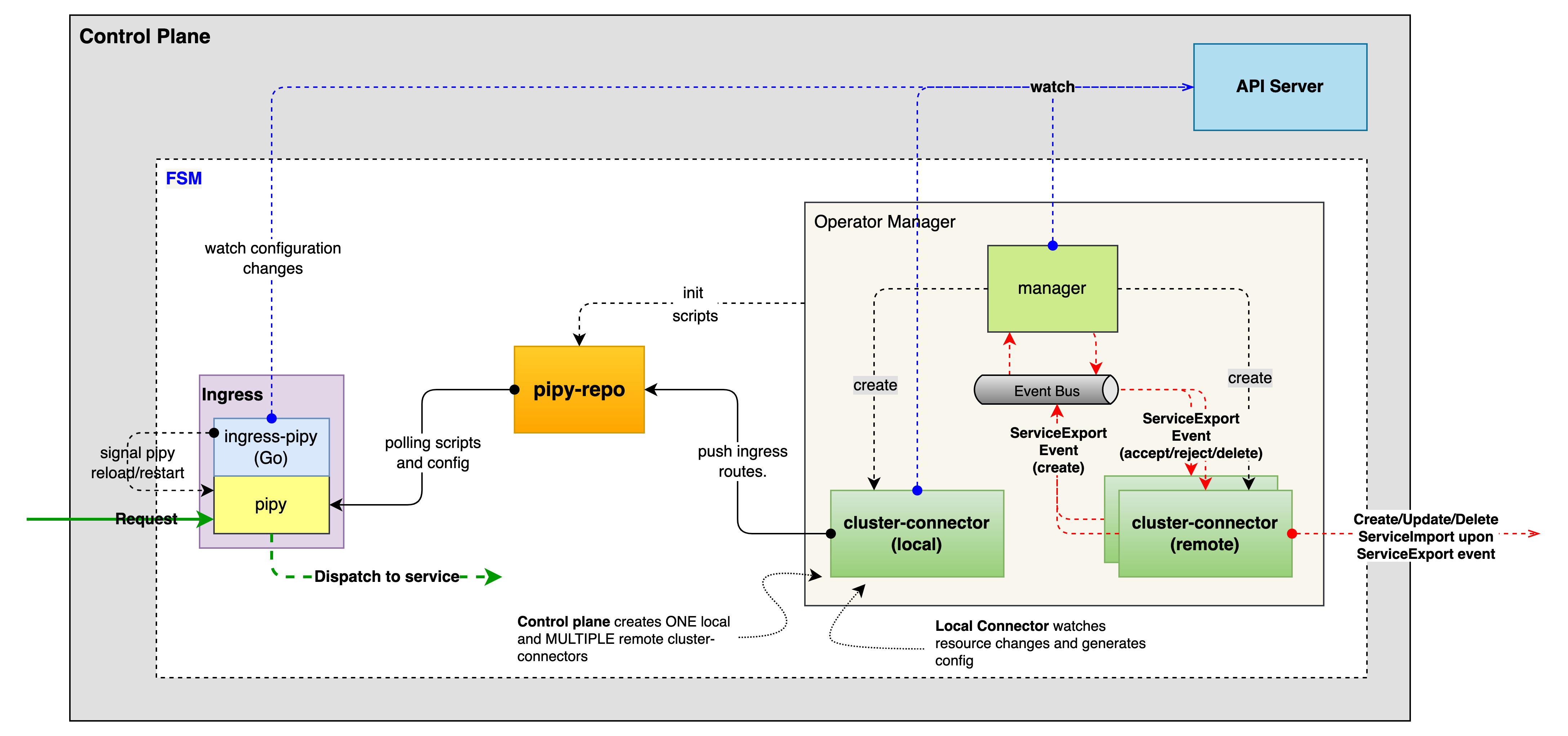

- 3.9: Multi-cluster services

- 4: Observability

- 5: Health Checks

- 6: Integrations

- 6.1: Integrate Dapr with FSM

- 6.2: Integrate Prometheus with FSM

- 6.3: Microservice Discovery Integration

- 7: Security

- 7.1: Bi-directional mTLS

- 7.2: Access Control Management

- 7.3: Certificate Management

- 7.4: Traffic Access Control

- 8: Troubleshooting

- 8.1: Application Container Lifecycle

- 8.2: Error Codes

- 8.3: Prometheus

- 8.4: Grafana

- 8.5: Uninstall

- 8.6: Traffic Troubleshooting

- 8.6.1: Iptables Redirection

- 8.6.2: Permissive Traffic Policy Mode

- 8.6.3: Ingress

- 8.6.4: Egress Troubleshooting

- 9: Data plane benchmark

1 - Operating guides

1.1 - Install the FSM CLI

fsm CLI.Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater

Set up the FSM CLI

From the Binary Releases

Download platform specific compressed package from the Releases page.

Unpack the fsm binary and add it to $PATH to get started.

Linux and macOS

In a bash-based shell on Linux/macOS or Windows Subsystem for Linux, use curl to download the FSM release and then extract with tar as follows:

# Specify the FSM version that will be leveraged throughout these instructions

FSM_VERSION=v1.3.3

# Linux curl command only

curl -sL "https://github.com/flomesh-io/fsm/releases/download/$FSM_VERSION/fsm-$FSM_VERSION-linux-amd64.tar.gz" | tar -vxzf -

# macOS curl command only

curl -sL "https://github.com/flomesh-io/fsm/releases/download/$FSM_VERSION/fsm-$FSM_VERSION-darwin-amd64.tar.gz" | tar -vxzf -

The fsm client binary runs on your client machine and allows you to manage FSM in your Kubernetes cluster. Use the following commands to install the FSM fsm client binary in a bash-based shell on Linux or Windows Subsystem for Linux. These commands copy the fsm client binary to the standard user program location in your PATH.

sudo mv ./linux-amd64/fsm /usr/local/bin/fsm

For macOS use the following commands:

sudo mv ./darwin-amd64/fsm /usr/local/bin/fsm

You can verify the fsm client library has been correctly added to your path and its version number with the following command.

fsm version

From Source (Linux, MacOS)

Building FSM from source requires more steps but is the best way to test the latest changes and useful in a development environment.

You must have a working Go environment and Helm 3 installed.

git clone https://github.com/flomesh-io/fsm.git

cd fsm

make build-fsm

make build-fsm will fetch any required dependencies, compile fsm and place it in bin/fsm. Add bin/fsm to $PATH so you can easily use fsm.

Install FSM

FSM Configuration

By default, the control plane components are installed into a Kubernetes Namespace called fsm-system and the control plane is given a unique identifier attribute mesh-name defaulted to fsm.

During installation, the Namespace and mesh-name can be configured through flags when using the fsm CLI or by editing the values file when using the helm CLI.

The mesh-name is a unique identifier assigned to an fsm-controller instance during install to identify and manage a mesh instance.

The mesh-name should follow RFC 1123 DNS Label constraints. The mesh-name must:

- contain at most 63 characters

- contain only lowercase alphanumeric characters or ‘-’

- start with an alphanumeric character

- end with an alphanumeric character

Using the FSM CLI

Use the fsm CLI to install the FSM control plane on to a Kubernetes cluster.

Run fsm install.

# Install fsm control plane components

fsm install

fsm-preinstall[fsm-preinstall-4vb8n] Done

fsm-bootstrap[fsm-bootstrap-cdbccf694-nwm74] Done

fsm-injector[fsm-injector-7c9f5f9cdf-tw99v] Done

fsm-controller[fsm-controller-6d5984fb9f-2nj7s] Done

FSM installed successfully in namespace [fsm-system] with mesh name [fsm]

Run fsm install --help for more options.

1.2 - Install the FSM Control Plane

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater

- The FSM CLI or the helm 3 CLI or the OpenShift

ocCLI.

Kubernetes support

FSM can be run on Kubernetes versions that are supported at the time of the FSM release. The current support matrix is:

| FSM | Kubernetes |

|---|---|

| 1.1 | 1.19 - 1.24 |

Using the FSM CLI

Use the fsm CLI to install the FSM control plane on to a Kubernetes cluster.

FSM CLI and Chart Compatibility

Each version of the FSM CLI is designed to work only with the matching version of the FSM Helm chart. Many operations may still work when some version skew exists, but those scenarios are not tested and issues that arise when using different CLI and chart versions may not get fixed even if reported.

Running the CLI

Run fsm install to install the FSM control plane.

fsm install

fsm-preinstall[fsm-preinstall-xsmz4] Done

fsm-bootstrap[fsm-bootstrap-7f59b7bf7-rs55z] Done

fsm-injector[fsm-injector-787bc867db-54gl6] Done

fsm-controller[fsm-controller-58d758b7fb-2zrr8] Done

FSM installed successfully in namespace [fsm-system] with mesh name [fsm]

Run fsm install --help for more options.

Note: Installing FSM via the CLI enforces deploying only one mesh in the cluster. FSM installs and manages the CRDs by adding a conversion webhook field to all the CRDs to support multiple API versions, which ties the CRDs to a specific instance of FSM. Hence, for FSM’s correct operation it is strongly recommended to have only one FSM mesh per cluster.

Using the Helm CLI

The FSM chart can be installed directly via the Helm CLI.

Editing the Values File

You can configure the FSM installation by overriding the values file.

Create a copy of the values file (make sure to use the version for the chart you wish to install).

Change any values you wish to customize. You can omit all other values.

To see which values correspond to the MeshConfig settings, see the FSM MeshConfig documentation

For example, to set the

logLevelfield in the MeshConfig toinfo, save the following asoverride.yaml:fsm: sidecarLogLevel: info

Helm install

Then run the following helm install command. The chart version can be found in the Helm chart you wish to install here.

helm install <mesh name> fsm --repo https://flomesh-io.github.io/fsm --version <chart version> --namespace <fsm namespace> --create-namespace --values override.yaml

Omit the --values flag if you prefer to use the default settings.

Run helm install --help for more options.

OpenShift

To install FSM on OpenShift:

Enable privileged init containers so that they can properly program iptables. The NET_ADMIN capability is not sufficient on OpenShift.

fsm install --set="fsm.enablePrivilegedInitContainer=true"- If you have already installed FSM without enabling privileged init containers, set

enablePrivilegedInitContainertotruein the FSM MeshConfig and restart any pods in the mesh.

- If you have already installed FSM without enabling privileged init containers, set

Add the

privilegedsecurity context constraint to each service account in the mesh.Install the oc CLI.

Add the security context constraint to the service account

oc adm policy add-scc-to-user privileged -z <service account name> -n <service account namespace>

Pod Security Policy

Deprecated: PSP support has been deprecated in FSM since v0.10.0

PSP support will be removed in FSM 1.0.0

If you are running FSM in a cluster with PSPs enabled, pass in --set fsm.pspEnabled=true to your fsm install or helm install CLI command.

Enable Reconciler in FSM

If you wish to enable a reconciler in FSM, pass in --set fsm.enableReconciler=true to your fsm install or helm install CLI command. More information on the reconciler can be found in the Reconciler Guide.

Inspect FSM Components

A few components will be installed by default. Inspect them by using the following kubectl command:

# Replace fsm-system with the namespace where FSM is installed

kubectl get pods,svc,secrets,meshconfigs,serviceaccount --namespace fsm-system

A few cluster wide (non Namespaced components) will also be installed. Inspect them using the following kubectl command:

kubectl get clusterrolebinding,clusterrole,mutatingwebhookconfiguration,validatingwebhookconfigurations -l app.kubernetes.io/name=flomesh.io

Under the hood, fsm is using Helm libraries to create a Helm release object in the control plane Namespace. The Helm release name is the mesh-name. The helm CLI can also be used to inspect Kubernetes manifests installed in more detail. Goto https://helm.sh for instructions to install Helm.

# Replace fsm-system with the namespace where FSM is installed

helm get manifest fsm --namespace fsm-system

Next Steps

Now that the FSM control plane is up and running, add services to the mesh.

1.3 - Upgrade the FSM Control Plane

This guide describes how to upgrade the FSM control plane.

How upgrades work

FSM’s control plane lifecycle is managed by Helm and can be upgraded with Helm’s upgrade functionality, which will patch or replace control plane components as needed based on changed values and resource templates.

Resource availability during upgrade

Since upgrades may include redeploying the fsm-controller with the new version, there may be some downtime of the controller. While the fsm-controller is unavailable, there will be a delay in processing new SMI resources, creating new pods to be injected with a proxy sidecar container will fail, and mTLS certificates will not be rotated.

Already existing SMI resources will be unaffected, this means that the data plane (which includes the Pipy sidecar configs) will also be unaffected by upgrading.

Data plane interruptions are expected if the upgrade includes CRD changes. Streamlining data plane upgrades is being tracked in issue #512.

Policy

Only certain upgrade paths are tested and supported.

Note: These plans are tentative and subject to change.

Breaking changes in this section refer to incompatible changes to the following user-facing components:

fsmCLI commands, flags, and behavior- SMI CRDs and controllers

This implies the following are NOT user-facing and incompatible changes are NOT considered “breaking” as long as the incompatibility is handled by user-facing components:

- Chart values.yaml

fsm-mesh-configMeshConfig- Internally-used labels and annotations (monitored-by, injection, metrics, etc.)

Upgrades are only supported between versions that do not include breaking changes, as described below.

For FSM versions 0.y.z:

- Breaking changes will not be introduced between

0.y.zand0.y.z+1 - Breaking changes may be introduced between

0.y.zand0.y+1.0

For FSM versions x.y.z where x >= 1:

- Breaking changes will not be introduced between

x.y.zandx.y+1.0or betweenx.y.zandx.y.z+1 - Breaking changes may be introduced between

x.y.zandx+1.0.0

How to upgrade FSM

The recommended way to upgrade a mesh is with the fsm CLI. For advanced use cases, helm may be used.

CRD Upgrades

Because Helm does not manage CRDs beyond the initial installation, FSM leverages an init-container on the fsm-bootstrap pod to to update existing and add new CRDs during an upgrade. If the new release contains updates to existing CRDs or adds new CRDs, the init-fsm-bootstrap on the fsm-bootstrap pod will update the CRDs. The associated Custom Resources will remain as is, requiring no additional action prior to or immediately after the upgrade.

Please check the CRD Updates section of the release notes to see if any updates have been made to the CRDs used by FSM. If the version of the Custom Resources are within the versions the updated CRD supports, no immediate action is required. FSM implements a conversion webhook for all of its CRDs, ensuring support for older versions and providing the flexibilty to update Custom Resources at a later point in time.

Upgrading with the FSM CLI

Pre-requisites

- Kubernetes cluster with the FSM control plane installed

- Ensure that the Kubernetes cluster has the minimum Kubernetes version required by the new FSM chart. This can be found in the Installation Pre-requisites

fsmCLI installed- By default, the

fsmCLI will upgrade to the same chart version that it installs. e.g. v0.9.2 of thefsmCLI will upgrade to v0.9.2 of the FSM Helm chart. Upgrading to any other version of the Helm chart than the version matching the CLI may work, but those scenarios are not tested and issues that arise may not get fixed even if reported.

- By default, the

The fsm mesh upgrade command performs a helm upgrade of the existing Helm release for a mesh.

Basic usage requires no additional arguments or flags:

fsm mesh upgrade

FSM successfully upgraded mesh fsm

This command will upgrade the mesh with the default mesh name in the default FSM namespace. Values from the previous release will NOT carry over to the new release by default, but may be passed individually with the --set flag on fsm mesh upgrade.

See fsm mesh upgrade --help for more details

Upgrading with Helm

Pre-requisites

- Kubernetes cluster with the FSM control plane installed

- The helm 3 CLI

FSM Configuration

When upgrading, any custom settings used to install or run FSM may be reverted to the default, this only includes any metrics deployments. Please ensure that you carefully follow the guide to prevent these values from being overwritten.

To preserve any changes you’ve made to the FSM configuration, use the helm --values flag. Create a copy of the values file (make sure to use the version for the upgraded chart) and change any values you wish to customize. You can omit all other values.

**Note: Any configuration changes that go into the MeshConfig will not be applied during upgrade and the values will remain as is prior to the upgrade. If you wish to update any value in the MeshConfig you can do so by patching the resource after an upgrade.

For example, if the logLevel field in the MeshConfig was set to info prior to upgrade, updating this in override.yaml will during an upgrade will not cause any change.

Warning: Do NOT change fsm.meshName or fsm.fsmNamespace

Helm Upgrade

Then run the following helm upgrade command.

helm upgrade <mesh name> fsm --repo https://flomesh-io.github.io/fsm --version <chart version> --namespace <fsm namespace> --values override.yaml

Omit the --values flag if you prefer to use the default settings.

Run helm upgrade --help for more options.

Upgrading Third Party Dependencies

Pipy

Pipy versions can be updated by modifying the value of the sidecarImage variable in fsm-mesh-config. For example, to update Pipy image to latest (this is for example only, the latest image is not recommended), the next command should be run.

export fsm_namespace=fsm-system # Replace fsm-system with the namespace where FSM is installed

kubectl patch meshconfig fsm-mesh-config -n $fsm_namespace -p '{"spec":{"sidecar":{"sidecarImage": "flomesh/pipy:latest"}}}' --type=merge

After the MeshConfig resource has been updated, all Pods and deployments that are part of the mesh must be restarted so that the updated version of the Pipy sidecar can be injected onto the Pod as part of the automated sidecar injection performed by FSM. This can be done with the kubectl rollout restart deploy command.

Prometheus, Grafana, and Jaeger

If enabled, FSM’s Prometheus, Grafana, and Jaeger services are deployed alongside other FSM control plane components. Though these third party dependencies cannot be updated through the meshconfig like Pipy, the versions can still be updated in the deployment directly. For instance, to update prometheus to v2.19.1, the user can run:

export fsm_namespace=fsm-system # Replace fsm-system with the namespace where FSM is installed

kubectl set image deployment/fsm-prometheus -n $fsm_namespace prometheus="prom/prometheus:v2.19.1"

To update to Grafana 8.1.0, the command would look like:

kubectl set image deployment/fsm-grafana -n $fsm_namespace grafana="grafana/grafana:8.1.0"

And for Jaeger, the user would run the following to update to 1.26.0:

kubectl set image deployment/jaeger -n $fsm_namespace jaeger="jaegertracing/all-in-one:1.26.0"

FSM Upgrade Troubleshooting Guide

FSM Mesh Upgrade Timing Out

Insufficient CPU

If the fsm mesh upgrade command is timing out, it could be due to insufficient CPU.

- Check the pods to see if any of them aren’t fully up and running

# Replace fsm-system with fsm-controller's namespace if using a non-default namespace

kubectl get pods -n fsm-system

- If there are any pods that are in Pending state, use

kubectl describeto check theEventssection

# Replace fsm-system with fsm-controller's namespace if using a non-default namespace

kubectl describe pod <pod-name> -n fsm-system

If you see the following error, then please increase the number of CPUs Docker can use.

`Warning FailedScheduling 4s (x15 over 19m) default-scheduler 0/1 nodes are available: 1 Insufficient cpu.`

Error Validating CLI Parameters

If the fsm mesh upgrade command is still timing out, it could be due to a CLI/Image Version mismatch.

- Check the pods to see if any of them aren’t fully up and running

# Replace fsm-system with fsm-controller's namespace if using a non-default namespace

kubectl get pods -n fsm-system

- If there are any pods that are in Pending state, use

kubectl describeto check theEventssection forError Validating CLI parameters

# Replace fsm-system with fsm-controller's namespace if using a non-default namespace

kubectl describe pod <pod-name> -n fsm-system

- If you find the error, please check the pod’s logs for any errors

kubectl logs -n fsm-system <pod-name> | grep -i error

If you see the following error, then it’s due to a CLI/Image Version mismatch.

`"error":"Please specify the init container image using --init-container-image","reason":"FatalInvalidCLIParameters"`

Workaround is to set the container-registry and fsm-image-tag flag when running fsm mesh upgrade.

fsm mesh upgrade --container-registry $CTR_REGISTRY --fsm-image-tag $CTR_TAG --enable-egress=true

Other Issues

If you’re running into issues that are not resolved with the steps above, please open a GitHub issue.

1.4 - Uninstall the FSM Control Plane and Components

This guide describes how to uninstall FSM from a Kubernetes cluster. This guide assumes there is a single FSM control plane (mesh) running. If there are multiple meshes in a cluster, repeat the process described for each control plane in the cluster before uninstalling any cluster wide resources at the end of the guide. Taking into consideration both the control plane and dataplane, this guide aims to walk through uninstalling all remnants of FSM with minimal downtime.

Prerequisites

- Kubernetes cluster with FSM installed

- The

kubectlCLI - The

FSMCLI or the Helm 3 CLI

Remove Pipy Sidecars from Application Pods and Pipy Secrets

The first step to uninstalling FSM is to remove the Pipy sidecar containers from application pods. The sidecar containers enforce traffic policies. Without them, traffic will flow to and from Pods according in accordance with default Kubernetes networking unless there are Kubernetes Network Policies applied.

FSM Pipy sidecars and related secrets will be removed in the following steps:

Disable Automatic Sidecar Injection

FSM Automatic Sidecar Injection is most commonly enabled by adding namespaces to the mesh via the fsm CLI. Use the fsm CLI to see which

namespaces have sidecar injection enabled. If there are multiple control planes installed, be sure to specify the --mesh-name flag.

View namespaces in a mesh:

fsm namespace list --mesh-name=<mesh-name>

NAMESPACE MESH SIDECAR-INJECTION

<namespace1> <mesh-name> enabled

<namespace2> <mesh-name> enabled

Remove each namespace from the mesh:

fsm namespace remove <namespace> --mesh-name=<mesh-name>

Namespace [<namespace>] successfully removed from mesh [<mesh-name>]

This will remove the flomesh.io/sidecar-injection: enabled annotation and flomesh.io/monitored-by: <mesh name> label from the namespace.

Alternatively, if sidecar injection is enabled via annotations on pods instead of per namespace, please modify the pod or deployment spec to remove the sidecar injection annotation.

Restart Pods

Restart all pods running with a sidecar:

# If pods are running as part of a Kubernetes deployment

# Can use this strategy for daemonset as well

kubectl rollout restart deployment <deployment-name> -n <namespace>

# If pod is running standalone (not part of a deployment or replica set)

kubectl delete pod <pod-name> -n namespace

k apply -f <pod-spec> # if pod is not restarted as part of replicaset

Now, there should be no FSM Pipy sidecar containers running as part of the applications that were once part of the mesh. Traffic is no

longer managed by the FSM control plane with the mesh-name used above. During this process, your applications may experience some downtime

as all the Pods are restarting.

Uninstall FSM Control Plane and Remove User Provided Resources

The FSM control plane and related components will be uninstalled in the following steps:

- Prerequisites

- Remove Pipy Sidecars from Application Pods and Pipy Secrets

- Uninstall FSM Control Plane and Remove User Provided Resources

Uninstall the FSM control plane

Use the fsm CLI to uninstall the FSM control plane from a Kubernetes cluster. The following step will remove:

- FSM controller resources (deployment, service, mesh config, and RBAC)

- Prometheus, Grafana, Jaeger, and Fluent Bit resources installed by FSM

- Mutating webhook and validating webhook

- The conversion webhook fields patched by FSM to the CRDs installed/required by FSM: CRDs for FSM will be unpatched. To delete cluster wide resources refer to Removal of FSM Cluster Wide Resources for more details.

Run fsm uninstall mesh:

# Uninstall fsm control plane components

fsm uninstall mesh --mesh-name=<mesh-name>

Uninstall FSM [mesh name: <mesh-name>] ? [y/n]: y

FSM [mesh name: <mesh-name>] uninstalled

Run fsm uninstall mesh --help for more options.

Alternatively, if you used Helm to install the control plane, run the following helm uninstall command:

helm uninstall <mesh name> --namespace <fsm namespace>

Run helm uninstall --help for more options.

Remove User Provided Resources

If any resources were provided or created for FSM at install time, they can be deleted at this point.

For example, if Hashicorp Vault was deployed for the sole purpose of managing certificates for FSM, all related resources can be deleted.

Delete FSM Namespace

When installing a mesh, the fsm CLI creates the namespace the control plane is installed into if it does not already exist. However, when uninstalling the same mesh, the namespace it lives in does not automatically get deleted by the fsm CLI. This behavior occurs because

there may be resources a user created in the namespace that they may not want automatically deleted.

If the namespace was only used for FSM and there is nothing that needs to be kept around, the namespace can be deleted at the time of uninstall or later using the following command.

fsm uninstall mesh --delete-namespace

Warning: Only delete the namespace if resources in the namespace are no longer needed. For example, if fsm was installed in

kube-system, deleting the namespace may delete important cluster resources and may have unintended consequences.

Removal of FSM Cluster Wide Resources

On installation FSM ensures that all the CRDs mentioned here exist in the cluster at install time. During installation, if they are not already installed, the fsm-bootstrap pod will install them before the rest of the control plane components are running. This is the same behavior when using the Helm charts to install FSM as well.

Uninstalling the mesh in both unmanaged and managed environments:

- removes FSM control plane components, including control plane pods

- removes/un-patches the conversion webhook fields from all the CRDs (which FSM adds to support multiple CR versions)

leaving behind certain FSM resources to prevent unintended consequences for the cluster after uninstalling FSM.The resources that are left behind will depend on whether FSM was uninstalled from a managed or unmanaged cluster environment.

When uninstalling FSM, both the fsm uninstall mesh command and Helm uninstallation will not delete any FSM or SMI CRD in any cluster environment (managed and unmanaged) for primarily two reasons:

- CRDs are cluster-wide resources and may be used by other service meshes or resources running in the same cluster

- deletion of a CRD will cause all custom resources corresponding to that CRD to also be deleted

To remove cluster wide resources that FSM installs (i.e. the meshconfig, secrets, FSM CRDs, SMI CRDs, and webhook configurations), the following command can be run during or after FSM’s uninstillation.

fsm uninstall mesh --delete-cluster-wide-resources

Warning: Deletion of a CRD will cause all custom resources corresponding to that CRD to also be deleted.

To troubleshoot FSM uninstallation, refer to the uninstall troubleshooting section

1.5 - Mesh configuration

FSM deploys a MeshConfig resource fsm-mesh-config as a part of its control plane (in the same namespace as that of the fsm-controller pod) which can be updated by the mesh owner/operator at any time. The purpose of this MeshConfig is to provide the mesh owner/operator the ability to update some of the mesh configurations based on their needs.

At the time of install, the FSM MeshConfig is deployed from a preset MeshConfig (preset-mesh-config) which can be found under charts/fsm/templates.

First, set an environment variable to refer to the namespace where fsm was installed.

export FSM_NAMESPACE=fsm-system # Replace fsm-system with the namespace where FSM is installed

To view your fsm-mesh-config in CLI use the kubectl get command.

kubectl get meshconfig fsm-mesh-config -n "$FSM_NAMESPACE" -o yaml

Note: Values in the MeshConfig fsm-mesh-config are persisted across upgrades.

Configure FSM MeshConfig

Kubectl Patch Command

Changes to fsm-mesh-config can be made using the kubectl patch command.

kubectl patch meshconfig fsm-mesh-config -n "$FSM_NAMESPACE" -p '{"spec":{"traffic":{"enableEgress":true}}}' --type=merge

Refer to the Config API reference for more information.

If an incorrect value is used, validations on the MeshConfig CRD will prevent the change with an error message explaining why the value is invalid.

For example, the below command shows what happens if we patch enableEgress to a non-boolean value.

kubectl patch meshconfig fsm-mesh-config -n "$FSM_NAMESPACE" -p '{"spec":{"traffic":{"enableEgress":"no"}}}' --type=merge

# Validations on the CRD will deny this change

The MeshConfig "fsm-mesh-config" is invalid: spec.traffic.enableEgress: Invalid value: "string": spec.traffic.enableEgress in body must be of type boolean: "string"

Kubectl Patch Command for Each Key Type

Note:

<fsm-namespace>refers to the namespace where the fsm control plane is installed. By default, the fsm namespace isfsm-system.

| Key | Type | Default Value | Kubectl Patch Command Examples |

|---|---|---|---|

| spec.traffic.enableEgress | bool | false | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"traffic":{"enableEgress":true}}}' --type=merge |

| spec.traffic.enablePermissiveTrafficPolicyMode | bool | false | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":true}}}' --type=merge |

| spec.traffic.useHTTPSIngress | bool | false | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"traffic":{"useHTTPSIngress":true}}}' --type=merge |

| spec.traffic.outboundPortExclusionList | array | [] | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"traffic":{"outboundPortExclusionList":6379,8080}}}' --type=merge |

| spec.traffic.outboundIPRangeExclusionList | array | [] | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"traffic":{"outboundIPRangeExclusionList":"10.0.0.0/32,1.1.1.1/24"}}}' --type=merge |

| spec.certificate.serviceCertValidityDuration | string | "24h" | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"certificate":{"serviceCertValidityDuration":"24h"}}}' --type=merge |

| spec.observability.enableDebugServer | bool | false | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"observability":{"serviceCertValidityDuration":true}}}' --type=merge |

| spec.observability.tracing.enable | bool | "jaeger.<fsm-namespace>.svc.cluster.local" | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"observability":{"tracing":{"address": "jaeger.<fsm-namespace>.svc.cluster.local"}}}}' --type=merge |

| spec.observability.tracing.address | string | "/api/v2/spans" | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"observability":{"tracing":{"endpoint":"/api/v2/spans"}}}}' --type=merge' --type=merge |

| spec.observability.tracing.endpoint | string | false | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"observability":{"tracing":{"enable":true}}}}' --type=merge |

| spec.observability.tracing.port | int | 9411 | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"observability":{"tracing":{"port":9411}}}}' --type=merge |

| spec.sidecar.enablePrivilegedInitContainer | bool | false | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"sidecar":{"enablePrivilegedInitContainer":true}}}' --type=merge |

| spec.sidecar.logLevel | string | "error" | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"sidecar":{"logLevel":"error"}}}' --type=merge |

| spec.sidecar.maxDataPlaneConnections | int | 0 | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"sidecar":{"maxDataPlaneConnections":"error"}}}' --type=merge |

| spec.sidecar.configResyncInterval | string | "0s" | kubectl patch meshconfig fsm-mesh-config -n $FSM_NAMESPACE -p '{"spec":{"sidecar":{"configResyncInterval":"30s"}}}' --type=merge |

1.6 - Reconciler Guide

This guide describes how to enable the reconciler in FSM.

How the reconciler works

The goal of building a reconciler in FSM is to ensure resources required for the correct operation of FSM’s control plane are in their desired state at all times. Resources that are installed as a part of FSM install and have the labels flomesh.io/reconcile: true and app.kubernetes.io/name: flomesh.io will be reconciled by the reconciler.

Note: The reconciler will not operate as desired if the lables flomesh.io/reconcile: true and app.kubernetes.io/name: flomesh.io are modified or deleted on the reconcilable resources.

An update or delete event on the reconcilable resources will trigger the reconciler and it will reconcile the resource back to its desired state. Only metadata changes (excluding a name change) will be permitted on the reconcilable resources.

Resources reconciled

The resources that FSM reconciles are:

- CRDs : The CRDs installed/required by FSM CRDs for FSM will be reconciled. Since FSM manages the installation and upgrade of the CRDs it needs, FSM will also reconcile them to ensure that their spec, stored and served verions are always in the state that is required by FSM.

- MutatingWebhookConfiguration : A MutatingWebhookConfiguration is deployed as a part of FSM’s control plane to enable automatic sidecar injection. As this is a very critical component for pods joining the mesh, FSM reconciles this resource.

- ValidatingWebhookConfiguration : A ValidatingWebhookConfiguration is deployed as a part of FSM’s control plane to validate various mesh configurations. This resources validates configurations being applied to the mesh, hence FSM will reconcile this resource.

How to install FSM with the reconciler

To install FSM with the reconciler, use the below command:

fsm install --set fsm.enableReconciler=true

fsm-preinstall[fsm-preinstall-zqmxm] Done

fsm-bootstrap[fsm-bootstrap-7f59b7bf7-vf96p] Done

fsm-injector[fsm-injector-787bc867db-m5wxk] Done

fsm-controller[fsm-controller-58d758b7fb-46v4k] Done

FSM installed successfully in namespace [fsm-system] with mesh name [fsm]

1.7 - Extending FSM

Extending FSM with Plugin Interface

In the latest 1.3.0 version of Flomesh service mesh FSM, we have introduced a significant feature: Plugin. This feature aims to provide developers with a way to extend the functionality of the service mesh without changing the FSM itself.

Nowadays, service mesh seems to be developing in two directions. One is like Istio, which provides a lot of ready-to-use functions and is very rich in features. The other like Linkerd, Flomesh FSM, and others that uphold the principle of simplicity and provide a minimum functional set that meets the user’s needs. There is no superiority or inferiority between the two: the former is rich in features but inevitably has the additional overhead of proxy, not only in resource consumption but also in the cost of learning and maintenance; the latter is easy to learn and use, consumes fewer resources, but the provided functions might not be enough for the immediate need of user desired functionality.

It is not difficult to imagine that the ideal solution is the low cost of the minimum functional set + the flexibility of scalability. The core of the service mesh is in the data plane, and the flexibility of scalability requires a high demand for the physique of the sidecar proxy. This is also why the Flomesh service mesh chose programmable proxy Pipy as the sidecar proxy.

Pipy is a programmable network proxy for cloud, edge, and IoT. It is flexible, fast, small, programmable, and open-source. The modular design of Pipy provides a large number of reusable filters that can be assembled into pipelines to process network data. Pipy provides a set of api and small usable filters to achieve business objectives while hiding the underlying details. Additionally, Pipy scripts (programming code that implements functional logic) can be dynamically delivered to Pipy instances over the network, enabling the proxy to be extended with new features without the need for compilation or restart.

Flomesh FSM extension solution

FSM provides three new CRDs for extensibility:

Plugin: The plugin contains the code logic for the new functionality. The default functions provided by FSM are also available as plugins, but not in the form of aPluginresource. These plugins can be adjusted through the Helm values file when installing FSM. For more information, refer to the built-in plugin list in the Helm values.yaml file.PluginChain: The plugin chain is the execution of plugins in sequence. The system provides four plugin chains:inbound-tcp,inbound-http,outbound-tcp,outbound-http. They correspond to the OSI layer-4 and layer-7 processing stages of inbound and outbound traffic, respectively.PluginConfig: The plugin configuration provides the configuration required for the plugin logic to run, which will be sent to the FSM sidecar proxy in JSON format.

For detailed information on plugin CRDs, refer to the Plugin API document.

Built-in variables

Below is a list of built-in PipyJS variables which can be imported into your custom plugins via PipyJS import keyword.

| variable | type | namespace | suited for Chains | description |

|---|---|---|---|---|

| __protocol | string | inbound | inbound-http / inbound-tcp | connection protocol indicator |

| __port | json | inbound | inbound-http / inbound-tcp | port of inbound endpoint |

| __isHTTP2 | boolean | inbound | inbound-http | whether protocol is HTTP/2 |

| __isIngress | boolean | inbound | inbound-http | Ingress mode enabled |

| __target | string | inbound/connect-tcp | inbound-http / inbound-tcp | Destination upstream |

| __plugins | json | inbound | inbound-http / inbound-tcp | JSON object of inbound plugins |

| __service | json | inbound-http-routing | inbound-http | http service json object |

| __route | json | inbound-http-routing | inbound-http | http route json object |

| __cluster | json | inbound-http-routing inbound-tcp-rouging | inbound-http inbound-tcp | target cluster json object |

| __protocol | string | outbound | outbound-http / outbound-tcp | outbound connection protocol |

| __port | json | outbound | outbound-http / outbound-tcp | outbound port json object |

| __isHTTP2 | boolean | outbound | outbound-http | whether protocol is HTTP/2 |

| __isEgress | boolean | outbound | outbound-tcp | Egress mode |

| __target | string | outbound/ | outbound-http / outbound-tcp | Upstream target |

| __plugins | json | outbound | outbound-http / outbound-tcp | outbound plugin json object |

| __service | json | outbound-http-routing | outbound-http | http service json object |

| __route | json | outbound-http-routing | outbound-http | http route json object |

| __cluster | json | outbound-http-routing outbound-tcp-routing | outbound-http outbound-tcp | target cluster json object |

Demo

For a simple demonstration of how to extend FSM via Plugins, refer to below demo:

2 - Application onboarding

The following guide describes how to onboard a Kubernetes microservice to an FSM instance.

Refer to the application requirements guide before onboarding applications.

Configure and Install Service Mesh Interface (SMI) policies

FSM conforms to the SMI specification. By default, FSM denies all traffic communications between Kubernetes services unless explicitly allowed by SMI policies. This behavior can be overridden with the

--set=fsm.enablePermissiveTrafficPolicy=trueflag on thefsm installcommand, allowing SMI policies not to be enforced while allowing traffic and services to still take advantage of features such as mTLS-encrypted traffic, metrics, and tracing.For example SMI policies, please see the following examples:

If an application in the mesh needs to communicate with the Kubernetes API server, the user needs to explicitly allow this either by using IP range exclusion or by creating an egress policy as outlined below.

First get the Kubernetes API server cluster IP:

$ kubectl get svc -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 1dOption 1: add the Kubernetes API server’s address to the list of Global outbound IP ranges for exclusion. The IP address could be a cluster IP address or a public IP address and should be appropriately excluded for connectivity to the Kubernetes API server.

Add this IP to the MeshConfig so that outbound traffic to it is excluded from interception by FSM’s sidecar:

$ kubectl patch meshconfig fsm-mesh-config -n <fsm-namespace> -p '{"spec":{"traffic":{"outboundIPRangeExclusionList":["10.0.0.1/32"]}}}' --type=merge meshconfig.config.flomesh.io/fsm-mesh-config patchedRestart the relevant pods in monitored namespaces for this change to take effect.

Option 2: apply an Egress policy to allow access to the Kubernetes API server over HTTPS

Note: when using an Egress policy, the Kubernetes API service must not be in a namespace that FSM manages

- Enable egress policy if not enabled:

kubectl patch meshconfig fsm-mesh-config -n <fsm-namespace> -p '{"spec":{"featureFlags":{"enableEgressPolicy":true}}}' --type=merge- Apply an Egress policy to allow the application’s ServiceAccount to access the Kubernetes API server cluster IP found above. For example:

kubectl apply -f - <<EOF kind: Egress apiVersion: policy.flomesh.io/v1alpha1 metadata: name: k8s-server-egress namespace: test spec: sources: - kind: ServiceAccount name: <app pod's service account name> namespace: <app pod's service account namespace> ipAddresses: - 10.0.0.1/32 ports: - number: 443 protocol: https EOFOnboard Kubernetes Namespaces to FSM

To onboard a namespace containing applications to be managed by FSM, run the

fsm namespace addcommand:$ fsm namespace add <namespace> --mesh-name <mesh-name>By default, the

fsm namespace addcommand enables automatic sidecar injection for pods in the namespace.To disable automatic sidecar injection as a part of enrolling a namespace into the mesh, use

fsm namespace add <namespace> --disable-sidecar-injection. Once a namespace has been onboarded, pods can be enrolled in the mesh by configuring automatic sidecar injection. See the Sidecar Injection document for more details.Deploy new applications or redeploy existing applications

By default, new deployments in onboarded namespaces are enabled for automatic sidecar injection. This means that when a new Pod is created in a managed namespace, FSM will automatically inject the sidecar proxy to the Pod. Existing deployments need to be restarted so that FSM can automatically inject the sidecar proxy upon Pod re-creation. Pods managed by a Deployment can be restarted using the

kubectl rollout restart deploycommand.In order to route protocol specific traffic correctly to service ports, configure the application protocol to use. Refer to the application protocol selection guide to learn more.

Note: Removing Namespaces

Namespaces can be removed from the FSM mesh with the fsm namespace remove command:

fsm namespace remove <namespace>

Please Note: The

fsm namespace removecommand only tells FSM to stop applying updates to the sidecar proxy configurations in the namespace. It does not remove the proxy sidecars. This means the existing proxy configuration will continue to be used, but it will not be updated by the FSM control plane. If you wish to remove the proxies from all pods, remove the pods’ namespaces from the FSM mesh with the CLI and reinstall all the pod workloads.

2.1 - Prerequisites

Security Contexts

- Do not run applications with the user ID (UID) value of 1500. This is reserved for the Pipy proxy sidecar container injected into pods by FSM’s sidecar injector.

- If security context

runAsNonRootis set totrueat the pod level, arunAsUservalue must be provided either for the pod or for each container. For example:If the UID is omitted, application containers may attempt to run as root user by default, causing conflict with the pod’s security context.securityContext: runAsNonRoot: true runAsUser: 1200 - Additional capabilities are not required.

Note: the FSM init container is programmed to run as root and add capability

NET_ADMINas it requires these security contexts to finish scheduling. These values are not changed by application security contexts.

Ports

Do not use the following ports as they are used by the Pipy sidecar.

| Port | Description |

|---|---|

| 15000 | Pipy Admin Port |

| 15001 | Pipy Outbound Listener Port |

| 15003 | Pipy Inbound Listener Port |

| 15010 | Pipy Prometheus Inbound Listener Port |

2.2 - Namespace addition

Overview

When setting up an FSM control plane (also referred to as a “mesh”), one can also enroll a set of Kubernetes namespaces to the mesh. Enrolling a namespace to FSM allows FSM to monitor the resources within that Namespace whether they be applications deployed in Pods, Services, or even traffic policies represented as SMI resources.

Only one mesh can monitor a namespace, so this is something to watch out for when there are multiple instances of FSM within the same Kubernetes cluster. When applying policies to applications, FSM will only assess resources in either monitored namespaces so it is important to enroll namespaces where your applications are deployed to the correct instance of FSM with the correct mesh name. Enrolling a namespace also optionally allows for metrics to be collected for resources in the given namespace and for Pods in the namespace to be automatically injected with sidecar proxy containers. These are all features that help FSM provide functionality for traffic management and observability. Scoping this functionality at the namespace level allows teams to organize which segments of their cluster should be part of which mesh.

Namespace monitoring, automatic sidecar injection, and metrics collection is controlled by adding certain labels and annotations to a Kubernetes namespace. This can be done manually or using the fsm CLI although using the fsm CLI is the recommended approach. The presence of the label flomesh.io/monitored-by=<mesh-name> allows an FSM control plane with the given mesh-name to monitor

all resources within that namespace. The annotation flomesh.io/sidecar-injection=enabled enables FSM to automatically inject sidecar proxy containers in all Pods created within that namespace. The metrics annotation flomesh.io/metrics=enabled allows FSM to collect metrics on resources within a Namespace.

See how to use the FSM CLI to manage namespace monitoring below.

Adding a Namespace to the FSM Control Plane

Add a namespace for monitoring and sidecar injection to the mesh with the following command:

fsm namespace add <namespace>

Explicitly disable sidecar injection while adding the namespace using --disable-sidecar-injection flag as shown here.

Remove a Namespace from the FSM control plane

Remove a namespace from being monitored by the mesh and disable sidecar injection with the following command:

fsm namespace remove <namespace>

This command will remove the FSM specific labels and annotations on the namespace thus removing it from the mesh.

Enable Metrics for a Namespace

fsm metrics enable --namespace <namespace>

Ignore a Namespace

There may be namespaces in a cluster that should never be part of a mesh. To explicity exclude a namespace from FSM:

fsm namespace ignore <namespace>

List Namespaces Part of a Mesh

To list namespaces within a specific mesh:

fsm namespace list --mesh-name=<mesh-name>

Troubleshooting Guide

Policy Issues

If you’re not seeing changes in SMI policies being applied to resources in a namespace, ensure the namespace is enrolled in the correct mesh:

fsm namespace list --mesh-name=<mesh-name>

NAMESPACE MESH SIDECAR-INJECTION

<namespace> fsm enabled

If the namespace does not show up, check the labels on the namespace using kubectl:

kubectl get namespace <namespace> --show-labels

NAME STATUS AGE LABELS

<namespace> Active 36s flomesh.io/monitored-by=<mesh-name>

If the label value is not the expected mesh-name, remove the namespace from the mesh and add it back using the correct mesh-name.

fsm namespace remove <namespace> --mesh-name=<current-mesh-name>

fsm namespace add <namespace> --mesh-name=<expected-mesh-name>

If the monitored-by label is not present, it was either not added to the mesh or there was an error when adding it to the mesh.

Add the namespace to the mesh either with the fsm CLI or using kubectl:

fsm namespace add <namespace> --mesh-name=<mesh-name>

kubectl label namespace <namespace> flomesh.io/monitored-by=<mesh-name>

Issues with Automatic Sidecar Injection

If you’re not seeing your Pods being automatically injected with sidecar containers, ensure that sidecar injection is enabled:

fsm namespace list --mesh-name=<mesh-name>

NAMESPACE MESH SIDECAR-INJECTION

<namespace> fsm enabled

If the namespace does not show up, check the annotations on the namespace using kubectl:

kubectl get namespace <namespace> -o=jsonpath='{.metadata.annotations.flomesh\.io\/sidecar-injection}'

If the output is anything other than enabled, either add namespace using the fsm CLI or add the annotation with kubectl:

fsm namespace add <namespace> --mesh-name=<mesh-name> --disable-sidecar-injection=false

kubectl annotate namespace <namespace> flomesh.io/sidecar-injection=enabled --overwrite

Issues with Metrics Collection

If you’re not seeing metrics for resources in a particular namespace, ensure metrics are enabled:

kubectl get namespace <namespace> -o=jsonpath='{.metadata.annotations.flomesh\.io\/metrics}'

If the output is anything other than enabled, enable the namespace usng the fsm CLI or add the annotation with kubectl:

fsm metrics enable --namespace <namespace>

kubectl annotate namespace <namespace> flomesh.io/metrics=enabled --overwrite

Other Issues

If you’re running into issues that have not been resolved with the debugging techniques above, please open a GitHub issue on the repository.

2.3 - Sidecar Injection

Services participating in the service mesh communicate via sidecar proxies installed on pods backing the services. The following sections describe the sidecar injection workflow in FSM.

Automatic Sidecar Injection

Automatic sidecar injection is currently the only way to inject sidecars into the service mesh. Sidecars can be automatically injected into applicable Kubernetes pods using a mutating webhook admission controller provided by FSM.

Automatic sidecar injection can be configured per namespace as a part of enrolling a namespace into the mesh, or later using the Kubernetes API. Automatic sidecar injection can be enabled either on a per namespace or per pod basis by annotating the namespace or pod resource with the sidecar injection annotation. Individual pods and namespaces can be explicitly configured to either enable or disable automatic sidecar injection, giving users the flexibility to control sidecar injection on pods and namespaces.

Enabling Automatic Sidecar Injection

Prerequisites:

- The namespace to which the pods belong must be a monitored namespace that is added to the mesh using the

fsm namespace addcommand. - The namespace to which the pods belong must not be set to be ignored using the

fsm namespace ignorecommand. - The namespace to which the pods belong must not have a label with key

nameand value corresponding to the FSM control plane namespace. For example, a namespace with a labelname: fsm-systemwherefsm-systemis the control plane namespace cannot have sidecar injection enabled for pods in this namespace. - The pod must not have

hostNetwork: truein the pod spec. Pods withhostNetwork: trueare not injected with a sidecar since doing so can result in routing failures in the host network.

Automatic Sidecar injection can be enabled in the following ways:

While enrolling a namespace into the mesh using

fsmcli:fsm namespace add <namespace>: Automatic sidecar injection is enabled by default with this command.Using

kubectlto annotate individual namespaces and pods to enable sidecar injection:# Enable sidecar injection on a namespace $ kubectl annotate namespace <namespace> flomesh.io/sidecar-injection=enabled# Enable sidecar injection on a pod $ kubectl annotate pod <pod> flomesh.io/sidecar-injection=enabledSetting the sidecar injection annotation to

enabledin the Kubernetes resource spec for a namespace or pod:metadata: name: test annotations: 'flomesh.io/sidecar-injection': 'enabled'Pods will be injected with a sidecar ONLY if the following conditions are met:

- The namespace to which the pod belongs is a monitored namespace.

- The pod is explicitly enabled for the sidecar injection, OR the namespace to which the pod belongs is enabled for the sidecar injection and the pod is not explicitly disabled for sidecar injection.

Explicitly Disabling Automatic Sidecar Injection on Namespaces

Namespaces can be disabled for automatic sidecar injection in the following ways:

While enrolling a namespace into the mesh using

fsmcli:fsm namespace add <namespace> --disable-sidecar-injection: If the namespace was previously enabled for sidecar injection, it will be disabled after running this command.Using

kubectlto annotate individual namespaces to disable sidecar injection:# Disable sidecar injection on a namespace $ kubectl annotate namespace <namespace> flomesh.io/sidecar-injection=disabled

Explicitly Disabling Automatic Sidecar Injection on Pods

Individual pods can be explicitly disabled for sidecar injection. This is useful when a namespace is enabled for sidecar injection but specific pods should not be injected with sidecars.

Using

kubectlto annotate individual pods to disable sidecar injection:# Disable sidecar injection on a pod $ kubectl annotate pod <pod> flomesh.io/sidecar-injection=disabledSetting the sidecar injection annotation to

disabledin the Kubernetes resource spec for the pod:metadata: name: test annotations: 'flomesh.io/sidecar-injection': 'disabled'

Automatic sidecar injection is implicitly disabled for a namespace when it is removed from the mesh using the fsm namespace remove command.

2.4 - Application Protocol Selection

FSM is capable of routing different application protocols such as HTTP, TCP, and gRPC differently. The following guide describes how to configure service ports to specify the application protocol to use for traffic filtering and routing.

Configuring the application protocol

Kubernetes services expose one or more ports. A port exposed by an application running the service can serve a specific application protocol such as HTTP, TCP, gRPC etc. Since FSM filters and routes traffic for different application protocols differently, a configuration on the Kubernetes service object is necessary to convey to FSM how traffic directed to a service port must be routed.

In order to determine the application protocol served by a service’s port, FSM expects the appProtocol field on the service’s port to be set.

FSM supports the following application protocols for service ports:

http: For HTTP based filtering and routing of traffictcp: For TCP based filtering and routing of traffictcp-server-first: For TCP based filtering and routing of traffic where the server initiates communication with a client, such as mySQL, PostgreSQL, and othersgRPC: For HTTP2 based filtering and routing of gRPC traffic

The application protocol configuration described is applicable to both SMI and Permissive traffic policy modes.

Examples

Consider the following SMI traffic access and traffic specs policies:

- A

TCPRouteresource namedtcp-routethat specifies the port TCP traffic should be allowed on. - An

HTTPRouteGroupresource namedhttp-routethat specifies the HTTP routes for which HTTP traffic should be allowed. - A

TrafficTargetresource namedtestthat allows pods in the service accountsa-2to access pods in the service accountsa-1for the specified TCP and HTTP rules.

kind: TCPRoute

metadata:

name: tcp-route

spec:

matches:

ports:

- 8080

---

kind: HTTPRouteGroup

metadata:

name: http-route

spec:

matches:

- name: version

pathRegex: "/version"

methods:

- GET

---

kind: TrafficTarget

metadata:

name: test

namespace: default

spec:

destination:

kind: ServiceAccount

name: sa-1 # There are 2 services under this service account: service-1 and service-2

namespace: default

rules:

- kind: TCPRoute

name: tcp-route

- kind: HTTPRouteGroup

name: http-route

sources:

- kind: ServiceAccount

name: sa-2

namespace: default

Kubernetes service resources should explicitly specify the application protocol being served by the service’s ports using the appProtocol field.

A service service-1 backed by a pod in service account sa-1 serving http application traffic should be defined as follows:

kind: Service

metadata:

name: service-1

namespace: default

spec:

ports:

- port: 8080

name: some-port

appProtocol: http

A service service-2 backed by a pod in service account sa-1 serving raw tcp application traffic shold be defined as follows:

kind: Service

metadata:

name: service-2

namespace: default

spec:

ports:

- port: 8080

name: some-port

appProtocol: tcp

3 - Traffic Management

3.1 - Permissive Mode

Permissive traffic policy mode in FSM is a mode where SMI traffic access policy enforcement is bypassed. In this mode, FSM automatically discovers services that are a part of the service mesh and programs traffic policy rules on each Pipy proxy sidecar to be able to communicate with these services.

When to use permissive traffic policy mode

Since permissive traffic policy mode bypasses SMI traffic access policy enforcement, it is suitable for use when connectivity between applications within the service mesh should flow as before the applications were enrolled into the mesh. This mode is suitable in environments where explicitly defining traffic access policies for connectivity between applications is not feasible.

A common use case to enable permissive traffic policy mode is to support gradual onboarding of applications into the mesh without breaking application connectivity. Traffic routing between application services is automatically set up by FSM controller through service discovery. Wildcard traffic policies are set up on each Pipy proxy sidecar to allow traffic flow to services within the mesh.

The alternative to permissive traffic policy mode is SMI traffic policy mode, where traffic between applications is denied by default and explicit SMI traffic policies are necessary to allow application connectivity. When policy enforcement is necessary, SMI traffic policy mode must be used instead.

Configuring permissive traffic policy mode

Permissive traffic policy mode can be enabled or disabled at the time of FSM install, or after FSM has been installed.

Enabling permissive traffic policy mode

Enabling permissive traffic policy mode implicitly disables SMI traffic policy mode.

During FSM install using the --set flag:

fsm install --set fsm.enablePermissiveTrafficPolicy=true

After FSM has been installed:

# Assumes FSM is installed in the fsm-system namespace

kubectl patch meshconfig fsm-mesh-config -n fsm-system -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":true}}}' --type=merge

Disabling permissive traffic policy mode

Disabling permissive traffic policy mode implicitly enables SMI traffic policy mode.

During FSM install using the --set flag:

fsm install --set fsm.enablePermissiveTrafficPolicy=false

After FSM has been installed:

# Assumes FSM is installed in the fsm-system namespace

kubectl patch meshconfig fsm-mesh-config -n fsm-system -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":false}}}' --type=merge

How it works

When permissive traffic policy mode is enabled, FSM controller discovers all services that are a part of the mesh and programs wildcard traffic routing rules on each Pipy proxy sidecar to reach every other service in the mesh. Additionally, each proxy fronting workloads that are associated with a service is configured to accept all traffic destined to the service. Depending on the application protocol of the service (HTTP, TCP, gRPC etc.), appropriate traffic routing rules are configured on the Pipy sidecar to allow all traffic for that particular type.

Refer to the Permissive traffic policy mode demo to learn more.

Pipy configurations

In permissive mode, FSM controller programs wildcard routes for client applications to communicate with services. Following are the Pipy inbound and outbound filter and route configuration snippets from the curl and httpbin sidecar proxies.

Outbound Pipy configuration on the

curlclient pod:Outbound HTTP filter chain corresponding to the

httpbinservice:{ "Outbound": { "TrafficMatches": { "14001": [ { "DestinationIPRanges": [ "10.43.103.59/32" ], "Port": 14001, "Protocol": "http", "HttpHostPort2Service": { "httpbin": "httpbin.app.svc.cluster.local", "httpbin.app": "httpbin.app.svc.cluster.local", "httpbin.app.svc": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster.local": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster.local:14001": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster:14001": "httpbin.app.svc.cluster.local", "httpbin.app.svc:14001": "httpbin.app.svc.cluster.local", "httpbin.app:14001": "httpbin.app.svc.cluster.local", "httpbin:14001": "httpbin.app.svc.cluster.local" }, "HttpServiceRouteRules": { "httpbin.app.svc.cluster.local": { ".*": { "Headers": null, "Methods": null, "TargetClusters": { "app/httpbin|14001": 100 }, "AllowedServices": null } } }, "TargetClusters": null, "AllowedEgressTraffic": false, "ServiceIdentity": "default.app.cluster.local" } ] } } }Outbound route configuration:

"HttpServiceRouteRules": { "httpbin.app.svc.cluster.local": { ".*": { "Headers": null, "Methods": null, "TargetClusters": { "app/httpbin|14001": 100 }, "AllowedServices": null } } }Inbound Pipy configuration on the

httpbinservice pod:Inbound HTTP filter chain corresponding to the

httpbinservice:{ "Inbound": { "TrafficMatches": { "14001": { "SourceIPRanges": null, "Port": 14001, "Protocol": "http", "HttpHostPort2Service": { "httpbin": "httpbin.app.svc.cluster.local", "httpbin.app": "httpbin.app.svc.cluster.local", "httpbin.app.svc": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster.local": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster.local:14001": "httpbin.app.svc.cluster.local", "httpbin.app.svc.cluster:14001": "httpbin.app.svc.cluster.local", "httpbin.app.svc:14001": "httpbin.app.svc.cluster.local", "httpbin.app:14001": "httpbin.app.svc.cluster.local", "httpbin:14001": "httpbin.app.svc.cluster.local" }, "HttpServiceRouteRules": { "httpbin.app.svc.cluster.local": { ".*": { "Headers": null, "Methods": null, "TargetClusters": { "app/httpbin|14001|local": 100 }, "AllowedServices": null } } }, "TargetClusters": null, "AllowedEndpoints": null } } } }Inbound route configuration:

"HttpServiceRouteRules": { "httpbin.app.svc.cluster.local": { ".*": { "Headers": null, "Methods": null, "TargetClusters": { "app/httpbin|14001|local": 100 }, "AllowedServices": null } } }

3.2 - Traffic Redirection

iptables is a traffic interception tool based on the Linux kernel. It can control traffic by filtering rules. Its advantages include:

- Universality: The iptables tool has been widely used in Linux operating systems, so most Linux users are familiar with its usage.

- Stability: iptables has long been part of the Linux kernel, so it has a high degree of stability.

- Flexibility: iptables can be flexibly configured according to needs to control network traffic.

However, iptables also has some disadvantages:

- Difficult to debug: Due to the complexity of the iptables tool itself, it is relatively difficult to debug.

- Performance issues: Unpredictable latency and reduced performance as the number of services grows.

- Issues with handling complex traffic: When it comes to handling complex traffic, iptables may not be suitable because its rule processing is not flexible enough.

eBPF is an advanced traffic interception tool that can intercept and analyze traffic in the Linux kernel through custom programs. The advantages of eBPF include:

- Flexibility: eBPF can use custom programs to intercept and analyze traffic, so it has higher flexibility.

- Scalability: eBPF can dynamically load and unload programs, so it has higher scalability.

- Efficiency: eBPF can perform processing in the kernel space, so it has higher performance.

However, eBPF also has some disadvantages:

- Higher learning curve: eBPF is relatively new compared to iptables, so it requires some learning costs.

- Complexity: Developing custom eBPF programs may be more complex.

Overall, iptables is more suitable for simple traffic filtering and management, while eBPF is more suitable for complex traffic interception and analysis scenarios that require higher flexibility and performance.

3.2.1 - Iptables Redirection

FSM leverages iptables to intercept and redirect traffic to and from pods participating in the service mesh to the Pipy proxy sidecar container running on each pod. Traffic redirected to the Pipy proxy sidecar is filtered and routed based on service mesh traffic policies.

For more details of comparison between iptables and eBPF, you can refer to Traffic Redirection.

How it works

FSM sidecar injector service fsm-injector injects an Pipy proxy sidecar on every pod created within the service mesh. Along with the Pipy proxy sidecar, fsm-injector also injects an init container, a specialized container that runs before any application containers in a pod. The injected init container is responsible for bootstrapping the application pods with traffic redirection rules such that all outbound TCP traffic from a pod and all inbound traffic TCP traffic to a pod are redirected to the pipy proxy sidecar running on that pod. This redirection is set up by the init container by running a set of iptables commands.

Ports reserved for traffic redirection

FSM reserves a set of port numbers to perform traffic redirection and provide admin access to the Pipy proxy sidecar. It is essential to note that these port numbers must not be used by application containers running in the mesh. Using any of these reserved port numbers will lead to the Pipy proxy sidecar not functioning correctly.

Following are the port numbers that are reserved for use by FSM:

15000: used by the Pipy admin interface exposed overlocalhostto return current configuration files.15001: used by the Pipy outbound listener to accept and proxy outbound traffic sent by applications within the pod15003: used by the Pipy inbound listener to accept and proxy inbound traffic entering the pod destined to applications within the pod15010: used by the Pipy inbound Prometheus listener to accept and proxy inbound traffic pertaining to scraping Pipy’s Prometheus metrics15901: used by Pipy to serve rewritten HTTP liveness probes15902: used by Pipy to serve rewritten HTTP readiness probes15903: used by Pipy to serve rewritten HTTP startup probes

The following are the port numbers that are reserved for use by FSM and allow traffic to bypass Pipy:

15904: used byfsm-healthcheckto servetcpSockethealth probes rewritten tohttpGethealth probes

Application User ID (UID) reserved for traffic redirection

FSM reserves the user ID (UID) value 1500 for the Pipy proxy sidecar container. This user ID is of utmost importance while performing traffic interception and redirection to ensure the redirection does not result in a loop. The user ID value 1500 is used to program redirection rules to ensure redirected traffic from Pipy is not redirected back to itself!

Application containers must not used the reserved user ID value of 1500.

Types of traffic intercepted

Currently, FSM programs the Pipy proxy sidecar on each pod to only intercept inbound and outbound TCP traffic. This includes raw TCP traffic and any application traffic that uses TCP as the underlying transport protocol, such as HTTP, gRPC etc. This implies UDP and ICMP traffic which can be intercepted by iptables are not intercepted and redirected to the Pipy proxy sidecar.

Iptables chains and rules

FSM’s fsm-injector service programs the init container to set up a set of iptables chains and rules to perform traffic interception and redirection. The following section provides details on the responsibility of these chains and rules.

FSM leverages four chains to perform traffic interception and redirection:

PROXY_INBOUND: chain to intercept inbound traffic entering the podPROXY_IN_REDIRECT: chain to redirect intercepted inbound traffic to the sidecar proxy’s inbound listenerPROXY_OUTPUT: chain to intercept outbound traffic from applications within the podPROXY_REDIRECT: chain to redirect intercepted outbound traffic to the sidecar proxy’s outbound listener

Each of the chains above are programmed with rules to intercept and redirect application traffic via the Pipy proxy sidecar.

Outbound IP range exclusions

Outbound TCP based traffic from applications is by default intercepted using the iptables rules programmed by FSM, and redirected to the Pipy proxy sidecar. In some cases, it might be desirable to not subject certain IP ranges to be redirected and routed by the Pipy proxy sidecar based on service mesh policies. A common use case to exclude IP ranges is to not route non-application logic based traffic via the Pipy proxy, such as traffic destined to the Kubernetes API server, or traffic destined to a cloud provider’s instance metadata service. In such scenarios, excluding certain IP ranges from being subject to service mesh traffic routing policies becomes necessary.

Outbound IP ranges can be excluded at a global mesh scope or per pod scope.

1. Global outbound IP range exclusions

FSM provides the means to specify a global list of IP ranges to exclude from outbound traffic interception applicable to all pods in the mesh, as follows:

During FSM install using the

--setoption:# To exclude the IP ranges 1.1.1.1/32 and 2.2.2.2/24 from outbound interception fsm install --set=fsm.outboundIPRangeExclusionList="{1.1.1.1/32,2.2.2.2/24}"By setting the

outboundIPRangeExclusionListfield in thefsm-mesh-configresource:## Assumes FSM is installed in the fsm-system namespace kubectl patch meshconfig fsm-mesh-config -n fsm-system -p '{"spec":{"traffic":{"outboundIPRangeExclusionList":["1.1.1.1/32", "2.2.2.2/24"]}}}' --type=mergeWhen IP ranges are set for exclusion post-install, make sure to restart the pods in monitored namespaces for this change to take effect.

Globally excluded IP ranges are stored in the fsm-mesh-config MeshConfig custom resource and are read at the time of sidecar injection by fsm-injector. These dynamically configurable IP ranges are programmed by the init container along with the static rules used to intercept and redirect traffic via the Pipy proxy sidecar. Excluded IP ranges will not be intercepted for traffic redirection to the Pipy proxy sidecar. Refer to the outbound IP range exclusion demo to learn more.

2. Pod scoped outbound IP range exclusions

Outbound IP range exclusions can be configured at pod scope by annotating the pod to specify a comma separated list of IP CIDR ranges as flomesh.io/outbound-ip-range-exclusion-list=<comma separated list of IP CIDRs>.

# To exclude the IP ranges 10.244.0.0/16 and 10.96.0.0/16 from outbound interception on the pod

kubectl annotate pod <pod> flomesh.io/outbound-ip-range-exclusion-list="10.244.0.0/16,10.96.0.0/16"

When IP ranges are annotated post pod creation, make sure to restart the corresponding pods for this change to take effect.

Outbound IP range inclusions

Outbound TCP based traffic from applications is by default intercepted using the iptables rules programmed by FSM, and redirected to the Pipy proxy sidecar. In some cases, it might be desirable to only subject certain IP ranges to be redirected and routed by the Pipy proxy sidecar based on service mesh policies, and have remaining traffic not proxied to the sidecar. In such scenarios, inclusion IP ranges can be specified.

Outbound inclusion IP ranges can be specified at a global mesh scope or per pod scope.

1. Global outbound IP range inclusions

FSM provides the means to specify a global list of IP ranges to include for outbound traffic interception applicable to all pods in the mesh, as follows:

During FSM install using the

--setoption:# To include the IP ranges 1.1.1.1/32 and 2.2.2.2/24 for outbound interception fsm install --set=fsm.outboundIPRangeInclusionList="[1.1.1.1/32,2.2.2.2/24]"By setting the

outboundIPRangeInclusionListfield in thefsm-mesh-configresource:## Assumes FSM is installed in the fsm-system namespace kubectl patch meshconfig fsm-mesh-config -n fsm-system -p '{"spec":{"traffic":{"outboundIPRangeInclusionList":["1.1.1.1/32", "2.2.2.2/24"]}}}' --type=mergeWhen IP ranges are set for inclusion post-install, make sure to restart the pods in monitored namespaces for this change to take effect.

Globally included IP ranges are stored in the fsm-mesh-config MeshConfig custom resource and are read at the time of sidecar injection by fsm-injector. These dynamically configurable IP ranges are programmed by the init container along with the static rules used to intercept and redirect traffic via the Pipy proxy sidecar. IP addresses outside the specified inclusion IP ranges will not be intercepted for traffic redirection to the Pipy proxy sidecar.

2. Pod scoped outbound IP range inclusions

Outbound IP range inclusions can be configured at pod scope by annotating the pod to specify a comma separated list of IP CIDR ranges as flomesh.io/outbound-ip-range-inclusion-list=<comma separated list of IP CIDRs>.

# To include the IP ranges 10.244.0.0/16 and 10.96.0.0/16 for outbound interception on the pod

kubectl annotate pod <pod> flomesh.io/outbound-ip-range-inclusion-list="10.244.0.0/16,10.96.0.0/16"

When IP ranges are annotated post pod creation, make sure to restart the corresponding pods for this change to take effect.

Outbound port exclusions

Outbound TCP based traffic from applications is by default intercepted using the iptables rules programmed by FSM, and redirected to the Pipy proxy sidecar. In some cases, it might be desirable to not subject certain ports to be redirected and routed by the Pipy proxy sidecar based on service mesh policies. A common use case to exclude ports is to not route non-application logic based traffic via the Pipy proxy, such as control plane traffic. In such scenarios, excluding certain ports from being subject to service mesh traffic routing policies becomes necessary.

Outbound ports can be excluded at a global mesh scope or per pod scope.

1. Global outbound port exclusions

FSM provides the means to specify a global list of ports to exclude from outbound traffic interception applicable to all pods in the mesh, as follows:

During FSM install using the

--setoption:# To exclude the ports 6379 and 7070 from outbound sidecar interception fsm install --set=fsm.outboundPortExclusionList="{6379,7070}"By setting the

outboundPortExclusionListfield in thefsm-mesh-configresource:## Assumes FSM is installed in the fsm-system namespace kubectl patch meshconfig fsm-mesh-config -n fsm-system -p '{"spec":{"traffic":{"outboundPortExclusionList":[6379, 7070]}}}' --type=mergeWhen ports are set for exclusion post-install, make sure to restart the pods in monitored namespaces for this change to take effect.

Globally excluded ports are are stored in the fsm-mesh-config MeshConfig custom resource and are read at the time of sidecar injection by fsm-injector. These dynamically configurable ports are programmed by the init container along with the static rules used to intercept and redirect traffic via the Pipy proxy sidecar. Excluded ports will not be intercepted for traffic redirection to the Pipy proxy sidecar.

2. Pod scoped outbound port exclusions

Outbound port exclusions can be configured at pod scope by annotating the pod with a comma separated list of ports as flomesh.io/outbound-port-exclusion-list=<comma separated list of ports>:

# To exclude the ports 6379 and 7070 from outbound interception on the pod

kubectl annotate pod <pod> flomesh.io/outbound-port-exclusion-list=6379,7070

When ports are annotated post pod creation, make sure to restart the corresponding pods for this change to take effect.