This is the multi-page printable view of this section. Click here to print.

Multi Cluster

1 - Multi-cluster services access control

Pre-requisites

- Tools and clusters created in demo Multi-cluster services discovery & communication

This guide will expand on the knowledge we covered in previous guides and demonstrate how to configure and enable cross-cluster access control based on SMI. With FSM support for multi-clusters, users can define and enforce fine-grained access policies for services running across multiple Kubernetes clusters. This allows users to easily and securely manage access to services and resources, ensuring that only authorized users and applications have access to the appropriate services and resources.

Before we start, let’s review the SMI Access Control Specification. There are two forms of traffic policies: Permissive Mode and Traffic Policy Mode. The former allows services in the mesh to access each other, while the latter requires the provision of the appropriate traffic policy to be accessible.

SMI Access Control Policy

In traffic policy mode, SMI defines ServiceAccount-based access control through the Kubernetes Custom Resource Definition(CRD) TrafficTarget, which defines traffic sources (sources), destinations (destinations), and rules (rules). What is expressed is that applications that use the ServiceAccount specified in sources can access applications that have the ServiceAccount specified in destinations, and the accessible traffic is specified by rules.

For example, the following example represents a load running with ServiceAccount promethues sending a GET request to the /metrics endpoint of a load running with ServiceAccount service-a. The HTTPRouteGroup defines the identity of the traffic: i.e. the GET request to access the endpoint /metrics.

kind: HTTPRouteGroup

metadata:

name: the-routes

spec:

matches:

- name: metrics

pathRegex: "/metrics"

methods:

- GET

---

kind: TrafficTarget

metadata:

name: path-specific

namespace: default

spec:

destination:

kind: ServiceAccount

name: service-a

namespace: default

rules:

- kind: HTTPRouteGroup

name: the-routes

matches:

- metrics

sources:

- kind: ServiceAccount

name: prometheus

namespace: default

So how does access control perform in a multi-cluster?

FSM’s ServiceExport

FSM’s ServiceExport is used to export services to other clusters, which is the process of service registration. The field spec.serviceAccountName of ServiceExport can be used to specify the ServiceAccount used for the service load.

apiVersion: flomesh.io/v1alpha1

kind: ServiceExport

metadata:

namespace: httpbin

name: httpbin

spec:

serviceAccountName: "*"

rules:

- portNumber: 8080

path: "/cluster-1/httpbin-mesh"

pathType: Prefix

Deploy the application

Deploy the sample application

Deploy the httpbin application under the httpbin namespace (managed by the mesh, which injects sidecar) in clusters cluster-1 and cluster-3. Here we specify ServiceAccount as httpbin.

export NAMESPACE=httpbin

for CLUSTER_NAME in cluster-1 cluster-3

do

kubectx k3d-${CLUSTER_NAME}

kubectl create namespace ${NAMESPACE}

fsm namespace add ${NAMESPACE}

kubectl apply -n ${NAMESPACE} -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: httpbin

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

labels:

app: pipy

spec:

replicas: 1

selector:

matchLabels:

app: pipy

template:

metadata:

labels:

app: pipy

spec:

serviceAccountName: httpbin

containers:

- name: pipy

image: flomesh/pipy:latest

ports:

- containerPort: 8080

command:

- pipy

- -e

- |

pipy()

.listen(8080)

.serveHTTP(new Message('Hi, I am from ${CLUSTER_NAME} and controlled by mesh!\n'))

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

---

apiVersion: v1

kind: Service

metadata:

name: httpbin-${CLUSTER_NAME}

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

EOF

sleep 3

kubectl wait --for=condition=ready pod -n ${NAMESPACE} --all --timeout=60s

done

Deploy the httpbin application under the cluster-2 namespace httpbin, but do not specify a ServiceAccount and use the default ServiceAccount default.

export NAMESPACE=httpbin

export CLUSTER_NAME=cluster-2

kubectx k3d-${CLUSTER_NAME}

kubectl create namespace ${NAMESPACE}

fsm namespace add ${NAMESPACE}

kubectl apply -n ${NAMESPACE} -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

labels:

app: pipy

spec:

replicas: 1

selector:

matchLabels:

app: pipy

template:

metadata:

labels:

app: pipy

spec:

containers:

- name: pipy

image: flomesh/pipy:latest

ports:

- containerPort: 8080

command:

- pipy

- -e

- |

pipy()

.listen(8080)

.serveHTTP(new Message('Hi, I am from ${CLUSTER_NAME}! and controlled by mesh!\n'))

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

---

apiVersion: v1

kind: Service

metadata:

name: httpbin-${CLUSTER_NAME}

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

EOF

sleep 3

kubectl wait --for=condition=ready pod -n ${NAMESPACE} --all --timeout=60s

Deploy the curl application under the namespace curl in cluster cluster-2, which is managed by the mesh, and the injected sidecar will be fully traffic dispatched across the cluster. Specify here to use ServiceAccout curl.

export NAMESPACE=curl

kubectx k3d-cluster-2

kubectl create namespace ${NAMESPACE}

fsm namespace add ${NAMESPACE}

kubectl apply -n ${NAMESPACE} -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: curl

---

apiVersion: v1

kind: Service

metadata:

name: curl

labels:

app: curl

service: curl

spec:

ports:

- name: http

port: 80

selector:

app: curl

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: curl

spec:

replicas: 1

selector:

matchLabels:

app: curl

template:

metadata:

labels:

app: curl

spec:

serviceAccountName: curl

containers:

- image: curlimages/curl

imagePullPolicy: IfNotPresent

name: curl

command: ["sleep", "365d"]

EOF

sleep 3

kubectl wait --for=condition=ready pod -n ${NAMESPACE} --all --timeout=60s

Export service

export NAMESPACE_MESH=httpbin

for CLUSTER_NAME in cluster-1 cluster-3

do

kubectx k3d-${CLUSTER_NAME}

kubectl apply -f - <<EOF

apiVersion: flomesh.io/v1alpha1

kind: ServiceExport

metadata:

namespace: ${NAMESPACE_MESH}

name: httpbin

spec:

serviceAccountName: "httpbin"

rules:

- portNumber: 8080

path: "/${CLUSTER_NAME}/httpbin-mesh"

pathType: Prefix

---

apiVersion: flomesh.io/v1alpha1

kind: ServiceExport

metadata:

namespace: ${NAMESPACE_MESH}

name: httpbin-${CLUSTER_NAME}

spec:

serviceAccountName: "httpbin"

rules:

- portNumber: 8080

path: "/${CLUSTER_NAME}/httpbin-mesh-${CLUSTER_NAME}"

pathType: Prefix

EOF

sleep 1

done

Test

We switch back to the cluster cluster-2.

kubectx k3d-cluster-2

The default route type is Locality and we need to create an ActiveActive policy to allow requests to be processed using service instances from other clusters.

kubectl apply -n httpbin -f - <<EOF

apiVersion: flomesh.io/v1alpha1

kind: GlobalTrafficPolicy

metadata:

name: httpbin

spec:

lbType: ActiveActive

targets:

- clusterKey: default/default/default/cluster-1

- clusterKey: default/default/default/cluster-3

EOF

In the curl application of the cluster-2 cluster, we send a request to httpbin.httpbin.

curl_client="$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')"

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

A few more requests will see the following response.

Hi, I am from cluster-1 and controlled by mesh!

Hi, I am from cluster-2 and controlled by mesh!

Hi, I am from cluster-3 and controlled by mesh!

Hi, I am from cluster-1 and controlled by mesh!

Hi, I am from cluster-2 and controlled by mesh!

Hi, I am from cluster-3 and controlled by mesh!

Demo

Adjusting the traffic policy mode

Let’s adjust the traffic policy mode of cluster cluster-2 so that the traffic policy can be applied.

kubectx k3d-cluster-2

export fsm_namespace=fsm-system

kubectl patch meshconfig fsm-mesh-config -n "$fsm_namespace" -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":false}}}' --type=merge

In this case, if you try to send the request again, you will find that the request fails. This is because in traffic policy mode, inter-application access is prohibited if no policy is configured.

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

command terminated with exit code 52

Application Access Control Policy

The access control policy of SMI is based on ServiceAccount as mentioned at the beginning of the guide, that’s why our deployed httpbin service uses different ServiceAccount in cluster cluster-1, cluster-3, and cluster cluster-2.

- cluster-1:

httpbin - cluster-2:

default - cluster-3:

httpbin

Next, we will set different access control policies TrafficTarget for in-cluster and out-of-cluster services, differentiated by the ServiceAccount of the target load in TrafficTarget.

Execute the following command to create a traffic policy curl-to-httpbin that allows curl to access loads under the namespace httpbin that uses ServiceAccount default.

kubectl apply -n httpbin -f - <<EOF

apiVersion: specs.smi-spec.io/v1alpha4

kind: HTTPRouteGroup

metadata:

name: httpbin-route

spec:

matches:

- name: all

pathRegex: "/"

methods:

- GET

---

kind: TrafficTarget

apiVersion: access.smi-spec.io/v1alpha3

metadata:

name: curl-to-httpbin

spec:

destination:

kind: ServiceAccount

name: default

namespace: httpbin

rules:

- kind: HTTPRouteGroup

name: httpbin-route

matches:

- all

sources:

- kind: ServiceAccount

name: curl

namespace: curl

EOF

Multiple request attempts are sent and the service of cluster cluster-2 responds, while clusters cluster-1 and cluster-3 will not participate in the service.

Hi, I am from cluster-2 and controlled by mesh!

Hi, I am from cluster-2 and controlled by mesh!

Hi, I am from cluster-2 and controlled by mesh!

Execute the following command to check ServiceImports and you can see that cluster-1 and cluster-3 export services using ServiceAccount httpbin.

kubectl get serviceimports httpbin -n httpbin -o jsonpath='{.spec}' | jq

{

"ports": [

{

"endpoints": [

{

"clusterKey": "default/default/default/cluster-1",

"target": {

"host": "192.168.1.110",

"ip": "192.168.1.110",

"path": "/cluster-1/httpbin-mesh",

"port": 81

}

},

{

"clusterKey": "default/default/default/cluster-3",

"target": {

"host": "192.168.1.110",

"ip": "192.168.1.110",

"path": "/cluster-3/httpbin-mesh",

"port": 83

}

}

],

"port": 8080,

"protocol": "TCP"

}

],

"serviceAccountName": "httpbin",

"type": "ClusterSetIP"

}

So, we create another TrafficTarget curl-to-ext-httpbin that allows curl to access the load using ServiceAccount httpbin.

kubectl apply -n httpbin -f - <<EOF

kind: TrafficTarget

apiVersion: access.smi-spec.io/v1alpha3

metadata:

name: curl-to-ext-httpbin

spec:

destination:

kind: ServiceAccount

name: httpbin

namespace: httpbin

rules:

- kind: HTTPRouteGroup

name: httpbin-route

matches:

- all

sources:

- kind: ServiceAccount

name: curl

namespace: curl

EOF

After applying the policy, test it again and all requests are successful.

Hi, I am from cluster-2 and controlled by mesh!

Hi, I am from cluster-1 and controlled by mesh!

Hi, I am from cluster-3 and controlled by mesh!

2 - Multi-cluster services discovery & communication

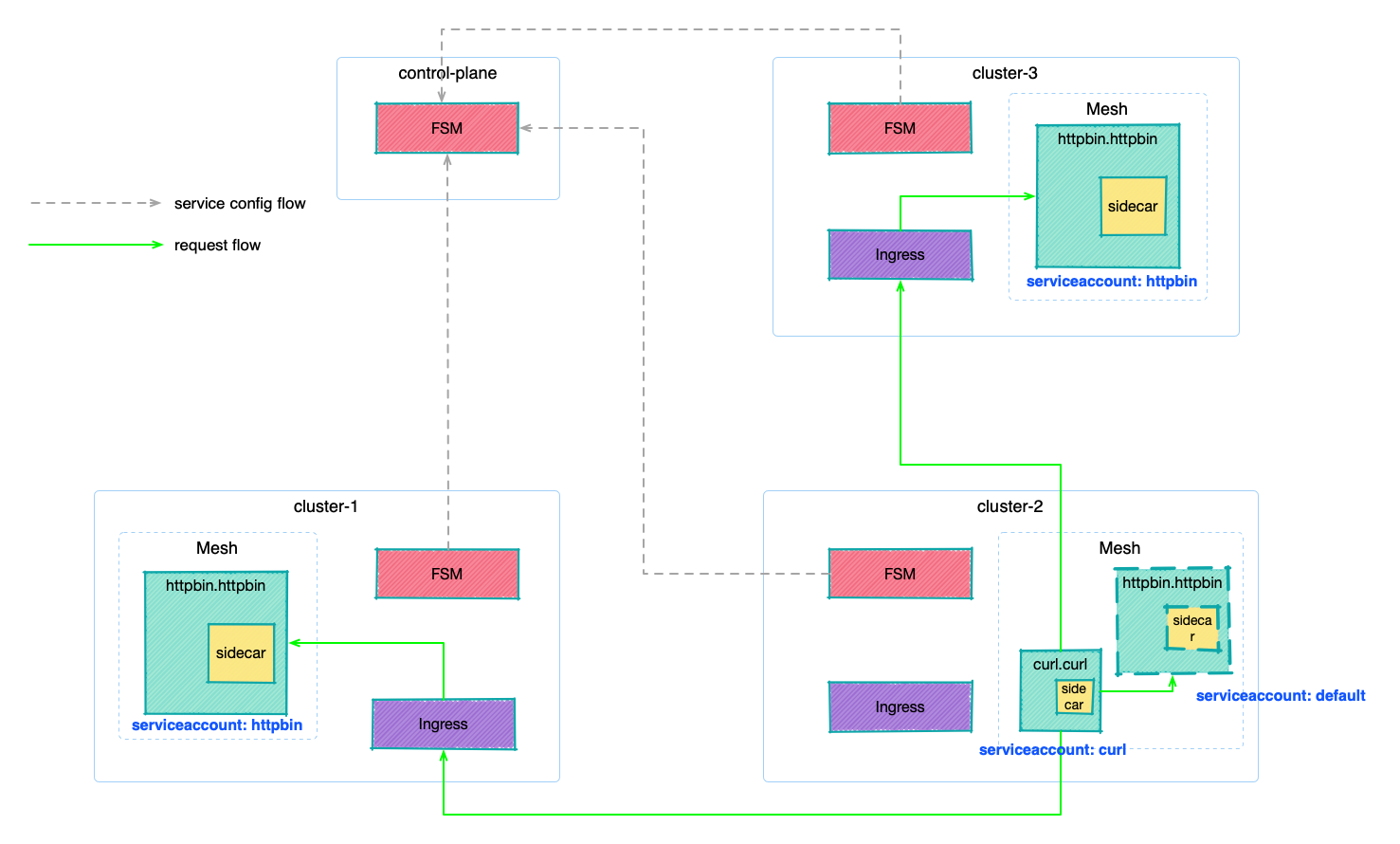

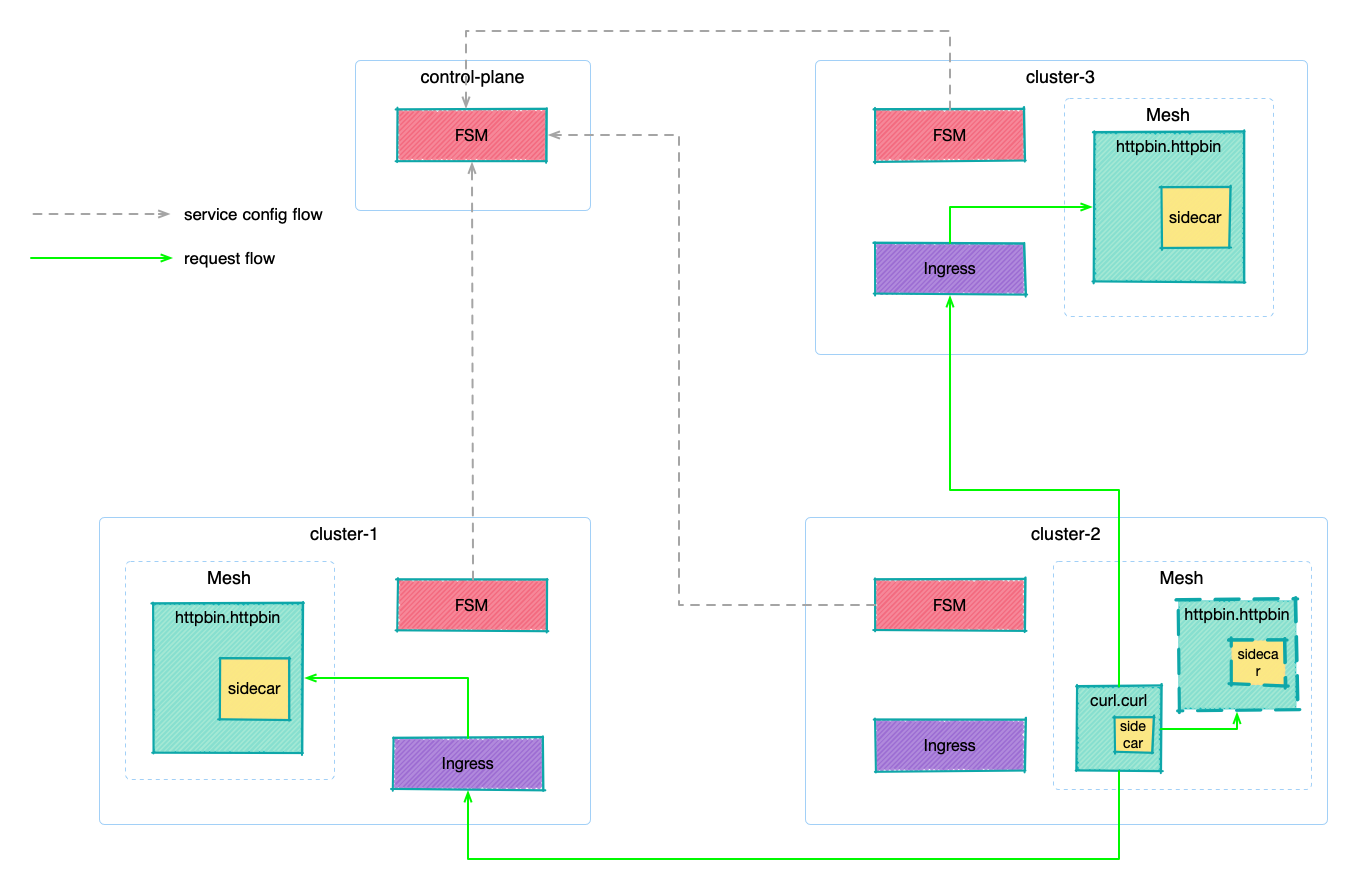

Demo Architecture

For demonstration purposes we will be creating 4 Kubernetes clusters and high-level architecture will look something like the below:

As a convention and for this demo we will be creating a separate stand-alone cluster to serve as a control plane cluster, but that isn’t strictly required as a separate cluster and it could be one of any existing cluster.

Pre-requisites

kubectx: for switching between multiplekubeconfig contexts(clusters)k3d: for creating multiplek3sclusters locally using containers- FSM CLI: for deploying

FSM docker: required to runk3d- Have

fsmCLI available for managing the service mesh. - FSM version >= v1.2.0.

Demo clusters & environment setup

In this demo, we will be using k3d a lightweight wrapper to run k3s (Rancher Lab’s minimal Kubernetes distribution) in docker, to create 4 separate clusters named control-plane, cluster-1, cluster-2, and cluster-3 respectively.

We will be using the HOST machine IP address and separate ports during the installation, for us to easily access the individual clusters. My demo host machine IP address is 192.168.1.110 (it might be different for your machine).

| cluster | cluster ip | api-server port | LB external-port | description |

|---|---|---|---|---|

| control-plane | HOST_IP(192.168.1.110) | 6444 | N/A | control-plane cluster |

| cluster-1 | HOST_IP(192.168.1.110) | 6445 | 81 | application-cluster |

| cluster-2 | HOST_IP(192.168.1.110) | 6446 | 82 | Application Cluster |

| cluster-3 | HOST_IP(192.168.1.110) | 6447 | 83 | Application Cluster |

Network

Creates a docker bridge type network named multi-clusters, which run all containers.

docker network create multi-clusters

Find your machine host IP address, mine is 192.168.1.110, and export that as an environment variable to be used later.

export HOST_IP=192.168.1.110

Cluster creation

We are going to use k3d to create 4 clusters.

API_PORT=6444 #6444 6445 6446 6447

PORT=80 #81 82 83

for CLUSTER_NAME in control-plane cluster-1 cluster-2 cluster-3

do

k3d cluster create ${CLUSTER_NAME} \

--image docker.io/rancher/k3s:v1.23.8-k3s2 \

--api-port "${HOST_IP}:${API_PORT}" \

--port "${PORT}:80@server:0" \

--servers-memory 4g \

--k3s-arg "--disable=traefik@server:0" \

--network multi-clusters \

--timeout 120s \

--wait

((API_PORT=API_PORT+1))

((PORT=PORT+1))

done

Install FSM

Install the service mesh FSM to the clusters cluster-1, cluster-2, and cluster-3. The control plane does not handle application traffic and does not need to be installed.

export FSM_NAMESPACE=fsm-system

export FSM_MESH_NAME=fsm

for CONFIG in kubeconfig_cp kubeconfig_c1 kubeconfig_c2 kubeconfig_c3; do

DNS_SVC_IP="$(kubectl --kubeconfig ${!CONFIG} get svc -n kube-system -l k8s-app=kube-dns -o jsonpath='{.items[0].spec.clusterIP}')"

CLUSTER_NAME=$(if [ "${CONFIG}" == "kubeconfig_c1" ]; then echo "cluster-1"; elif [ "${CONFIG}" == "kubeconfig_c2" ]; then echo "cluster-2"; else echo "cluster-3"; fi)

desc "Installing fsm service mesh in cluster ${CLUSTER_NAME}"

KUBECONFIG=${!CONFIG} $fsm_binary install \

--mesh-name "$FSM_MESH_NAME" \

--fsm-namespace "$FSM_NAMESPACE" \

--set=fsm.certificateProvider.kind=tresor \

--set=fsm.image.pullPolicy=Always \

--set=fsm.sidecarLogLevel=error \

--set=fsm.controllerLogLevel=warn \

--set=fsm.fsmIngress.enabled=true \

--timeout=900s \

--set=fsm.localDNSProxy.enable=true \

--set=fsm.localDNSProxy.primaryUpstreamDNSServerIPAddr="${DNS_SVC_IP}"

kubectl --kubeconfig ${!CONFIG} wait --for=condition=ready pod --all -n $FSM_NAMESPACE --timeout=120s

done

We have our clusters ready, now we need to federate them together, but before we do that, let’s first understand the mechanics on how FSM is configured.

Federate clusters

We will enroll clusters cluster-1, cluster-2, and cluster-3 into the management of control-plane cluster.

export HOST_IP=192.168.1.110

kubectx k3d-control-plane

sleep 1

PORT=81

for CLUSTER_NAME in cluster-1 cluster-2 cluster-3

do

cat <<EOF

apiVersion: flomesh.io/v1alpha1

kind: Cluster

metadata:

name: ${CLUSTER_NAME}

spec:

gatewayHost: ${HOST_IP}

gatewayPort: ${PORT}

fsmMeshConfigName: ${FSM_NAMESPACE}

kubeconfig: |+

`k3d kubeconfig get ${CLUSTER_NAME} | sed 's|^| |g' | sed "s|0.0.0.0|$HOST_IP|g"`

EOF

((PORT=PORT+1))

done

Deploy Demo application

Deploying mesh-managed applications

Deploy the httpbin application under the httpbin namespace of clusters cluster-1 and cluster-3 (which are managed by the mesh and will inject sidecar). Here the httpbin application is implemented by Pipy and will return the current cluster name.

export NAMESPACE=httpbin

for CLUSTER_NAME in cluster-1 cluster-3

do

kubectx k3d-${CLUSTER_NAME}

kubectl create namespace ${NAMESPACE}

fsm namespace add ${NAMESPACE}

kubectl apply -n ${NAMESPACE} -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

labels:

app: pipy

spec:

replicas: 1

selector:

matchLabels:

app: pipy

template:

metadata:

labels:

app: pipy

spec:

containers:

- name: pipy

image: flomesh/pipy:latest

ports:

- containerPort: 8080

command:

- pipy

- -e

- |

pipy()

.listen(8080)

.serveHTTP(new Message('Hi, I am from ${CLUSTER_NAME} and controlled by mesh!\n'))

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

---

apiVersion: v1

kind: Service

metadata:

name: httpbin-${CLUSTER_NAME}

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

EOF

sleep 3

kubectl wait --for=condition=ready pod -n ${NAMESPACE} --all --timeout=60s

done

Deploy the curl application under the namespace curl in cluster cluster-2, which is managed by the mesh.

export NAMESPACE=curl

kubectx k3d-cluster-2

kubectl create namespace ${NAMESPACE}

fsm namespace add ${NAMESPACE}

kubectl apply -n ${NAMESPACE} -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: curl

---

apiVersion: v1

kind: Service

metadata:

name: curl

labels:

app: curl

service: curl

spec:

ports:

- name: http

port: 80

selector:

app: curl

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: curl

spec:

replicas: 1

selector:

matchLabels:

app: curl

template:

metadata:

labels:

app: curl

spec:

serviceAccountName: curl

containers:

- image: curlimages/curl

imagePullPolicy: IfNotPresent

name: curl

command: ["sleep", "365d"]

EOF

sleep 3

kubectl wait --for=condition=ready pod -n ${NAMESPACE} --all --timeout=60s

Export Service

Let’s export services in cluster-1 and cluster-3

export NAMESPACE_MESH=httpbin

for CLUSTER_NAME in cluster-1 cluster-3

do

kubectx k3d-${CLUSTER_NAME}

kubectl apply -f - <<EOF

apiVersion: flomesh.io/v1alpha1

kind: ServiceExport

metadata:

namespace: ${NAMESPACE_MESH}

name: httpbin

spec:

serviceAccountName: "*"

rules:

- portNumber: 8080

path: "/${CLUSTER_NAME}/httpbin-mesh"

pathType: Prefix

---

apiVersion: flomesh.io/v1alpha1

kind: ServiceExport

metadata:

namespace: ${NAMESPACE_MESH}

name: httpbin-${CLUSTER_NAME}

spec:

serviceAccountName: "*"

rules:

- portNumber: 8080

path: "/${CLUSTER_NAME}/httpbin-mesh-${CLUSTER_NAME}"

pathType: Prefix

EOF

sleep 1

done

After exporting the services, FSM will automatically create Ingress rules for them, and with the rules, you can access these services through Ingress.

for CLUSTER_NAME_INDEX in 1 3

do

CLUSTER_NAME=cluster-${CLUSTER_NAME_INDEX}

((PORT=80+CLUSTER_NAME_INDEX))

kubectx k3d-${CLUSTER_NAME}

echo "Getting service exported in cluster ${CLUSTER_NAME}"

echo '-----------------------------------'

kubectl get serviceexports.flomesh.io -A

echo '-----------------------------------'

curl -s "http://${HOST_IP}:${PORT}/${CLUSTER_NAME}/httpbin-mesh"

curl -s "http://${HOST_IP}:${PORT}/${CLUSTER_NAME}/httpbin-mesh-${CLUSTER_NAME}"

echo '-----------------------------------'

done

To view one of the ServiceExports resources.

kubectl get serviceexports httpbin -n httpbin -o jsonpath='{.spec}' | jq

{

"loadBalancer": "RoundRobinLoadBalancer",

"rules": [

{

"path": "/cluster-3/httpbin-mesh",

"pathType": "Prefix",

"portNumber": 8080

}

],

"serviceAccountName": "*"

}

The exported services can be imported into other managed clusters. For example, if we look at the cluster cluster-2, we can have multiple services imported.

kubectx k3d-cluster-2

kubectl get serviceimports -A

NAMESPACE NAME AGE

httpbin httpbin-cluster-1 13m

httpbin httpbin-cluster-3 13m

httpbin httpbin 13m

Testing

Staying in the cluster-2 cluster (kubectx k3d-cluster-2), we test if we can access these imported services from the curl application in the mesh.

Get the pod of the curl application, from which we will later launch requests to simulate service access.

curl_client="$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')"

At this point you will find that it is not accessible.

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

command terminated with exit code 7

Note that this is normal, by default no other cluster instance will be used to respond to requests, which means no calls to other clusters will be made by default. So how to access it, then we need to be clear about the global traffic policy GlobalTrafficPolicy.

Global Traffic Policy

Note that all global traffic policies are set on the user’s side, so this demo is about setting global traffic policies on the cluster cluster-2 side. So before you start, switch to cluster cluster-2: kubectx k3d-cluster-2.

The global traffic policy is set via CRD GlobalTrafficPolicy.

type GlobalTrafficPolicy struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec GlobalTrafficPolicySpec `json:"spec,omitempty"`

Status GlobalTrafficPolicyStatus `json:"status,omitempty"`

}

type GlobalTrafficPolicySpec struct {

LbType LoadBalancerType `json:"lbType"`

LoadBalanceTarget []TrafficTarget `json:"targets"`

}

Global load balancing types .spec.lbType There are three types.

Locality: uses only the services of this cluster, and is also the default type. This is why accessing thehttpbinapplication fails when we don’t provide any global policy, because there is no such service in clustercluster-2.FailOver: proxies to other clusters only when access to this cluster fails, which is often referred to as failover, similar to primary backup.ActiveActive: Proxy to other clusters under normal conditions, similar to multi-live.

The FailOver and ActiveActive policies are used with the targets field to specify the id of the standby cluster, which is the cluster that can be routed to in case of failure or load balancing. ** For example, if you look at the import service httpbin/httpbin in cluster cluster-2, you can see that it has two endpoints for the outer cluster, note that endpoints here is a different concept than the native endpoints.v1 and will contain more information. In addition, there is the cluster id clusterKey.

kubectl get serviceimports httpbin -n httpbin -o jsonpath='{.spec}' | jq

{

"ports": [

{

"endpoints": [

{

"clusterKey": "default/default/default/cluster-1",

"target": {

"host": "192.168.1.110",

"ip": "192.168.1.110",

"path": "/cluster-1/httpbin-mesh",

"port": 81

}

},

{

"clusterKey": "default/default/default/cluster-3",

"target": {

"host": "192.168.1.110",

"ip": "192.168.1.110",

"path": "/cluster-3/httpbin-mesh",

"port": 83

}

}

],

"port": 8080,

"protocol": "TCP"

}

],

"serviceAccountName": "*",

"type": "ClusterSetIP"

}

Routing Type - Locality

The default routing type is Locality, and as tested above, traffic cannot be dispatched to other clusters.

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

command terminated with exit code 7

Routing Type - FailOver

Since setting a global traffic policy for causes access failure, we start by enabling FailOver mode. Note that the global policy traffic, to be consistent with the target service name and namespace. For example, if we want to access http://httpbin.httpbin:8080/, we need to create GlobalTrafficPolicy resource named httpbin under the namespace httpbin.

kubectl apply -n httpbin -f - <<EOF

apiVersion: flomesh.io/v1alpha1

kind: GlobalTrafficPolicy

metadata:

name: httpbin

spec:

lbType: FailOver

targets:

- clusterKey: default/default/default/cluster-1

- clusterKey: default/default/default/cluster-3

EOF

After setting the policy, let’s try it again by requesting.

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

Hi, I am from cluster-1!

The request is successful and the request is proxied to the service in cluster cluster-1. Another request is made, and it is proxied to cluster cluster-3, as expected for load balancing.

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

Hi, I am from cluster-3!

What will happen if we deploy the application httpbin in the namespace httpbin of the cluster cluster-2?

export NAMESPACE=httpbin

export CLUSTER_NAME=cluster-2

kubectx k3d-${CLUSTER_NAME}

kubectl create namespace ${NAMESPACE}

fsm namespace add ${NAMESPACE}

kubectl apply -n ${NAMESPACE} -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

labels:

app: pipy

spec:

replicas: 1

selector:

matchLabels:

app: pipy

template:

metadata:

labels:

app: pipy

spec:

containers:

- name: pipy

image: flomesh/pipy:latest

ports:

- containerPort: 8080

command:

- pipy

- -e

- |

pipy()

.listen(8080)

.serveHTTP(new Message('Hi, I am from ${CLUSTER_NAME}!\n'))

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

---

apiVersion: v1

kind: Service

metadata:

name: httpbin-${CLUSTER_NAME}

spec:

ports:

- port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

EOF

sleep 3

kubectl wait --for=condition=ready pod -n ${NAMESPACE} --all --timeout=60s

After the application is running normally, this time we send the request to test again. From the results, it looks like the request is processed in the current cluster.

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

Hi, I am from cluster-2!

Even if the request is repeated multiple times, it will always return Hi, I am from cluster-2!, which indicates that the services of same cluster are used in preference to the services imported from other clusters.

In some cases, we also want other clusters to participate in the service as well, because the resources of other clusters are wasted if only the services of this cluster are used. This is where the ActiveActive routing type comes into play.

Routing Type - ActiveActive

Moving on from the status above, let’s test the ActiveActive type by modifying the policy created earlier and updating it to ActiveActive: `ActiveActive

kubectl apply -n httpbin -f - <<EOF

apiVersion: flomesh.io/v1alpha1

kind: GlobalTrafficPolicy

metadata:

name: httpbin

spec:

lbType: ActiveActive

targets:

- clusterKey: default/default/default/cluster-1

- clusterKey: default/default/default/cluster-3

EOF

Multiple requests will show that httpbin from all three clusters will participate in the service. This indicates that the load is being proxied to multiple clusters in a balanced manner.

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

Hi, I am from cluster-1 and controlled by mesh!

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

Hi, I am from cluster-2!

kubectl exec "${curl_client}" -n curl -c curl -- curl -s http://httpbin.httpbin:8080/

Hi, I am from cluster-3 and controlled by mesh!