This is the multi-page printable view of this section. Click here to print.

Demos

- 1: Traffic Management

- 1.1: Outbound Traffic IP Range Exclusions

- 1.2: Retry Policy

- 1.3: TCP Traffic Routing

- 1.4: Canary Rollouts using SMI Traffic Split

- 1.5: Circuit breaking for destinations within the mesh

- 1.6: Local rate limiting of L4 connections

- 1.7: Local rate limiting of HTTP requests

- 2: Integration

- 3: Ingress

- 3.1: Ingress with Service Mesh

- 3.2: Ingress Controller - Basics

- 3.3: Ingress Controller - Advanced TLS

- 3.4: Ingress with Kubernetes Nginx Ingress Controller

- 3.5: Ingress with Traefik

- 3.6: FSM Ingress Controller - SSL Passthrough

- 4: Egress

- 4.1: Egress Passthrough to Unknown Destinations

- 4.2: Egress Gateway Passthrough to Unknown Destinations

- 4.3: Egress Policy

- 4.4: Egress Gateway Policy

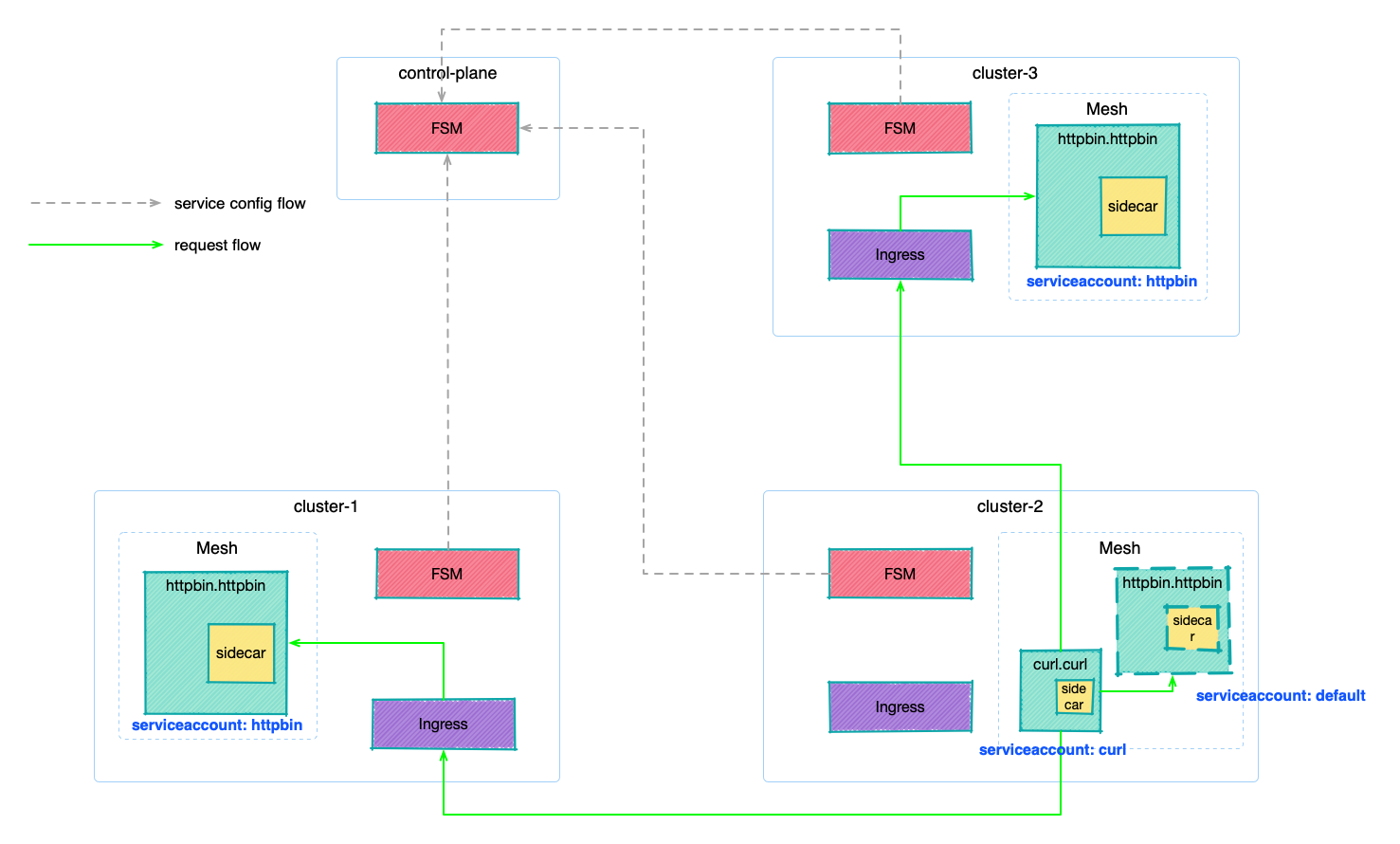

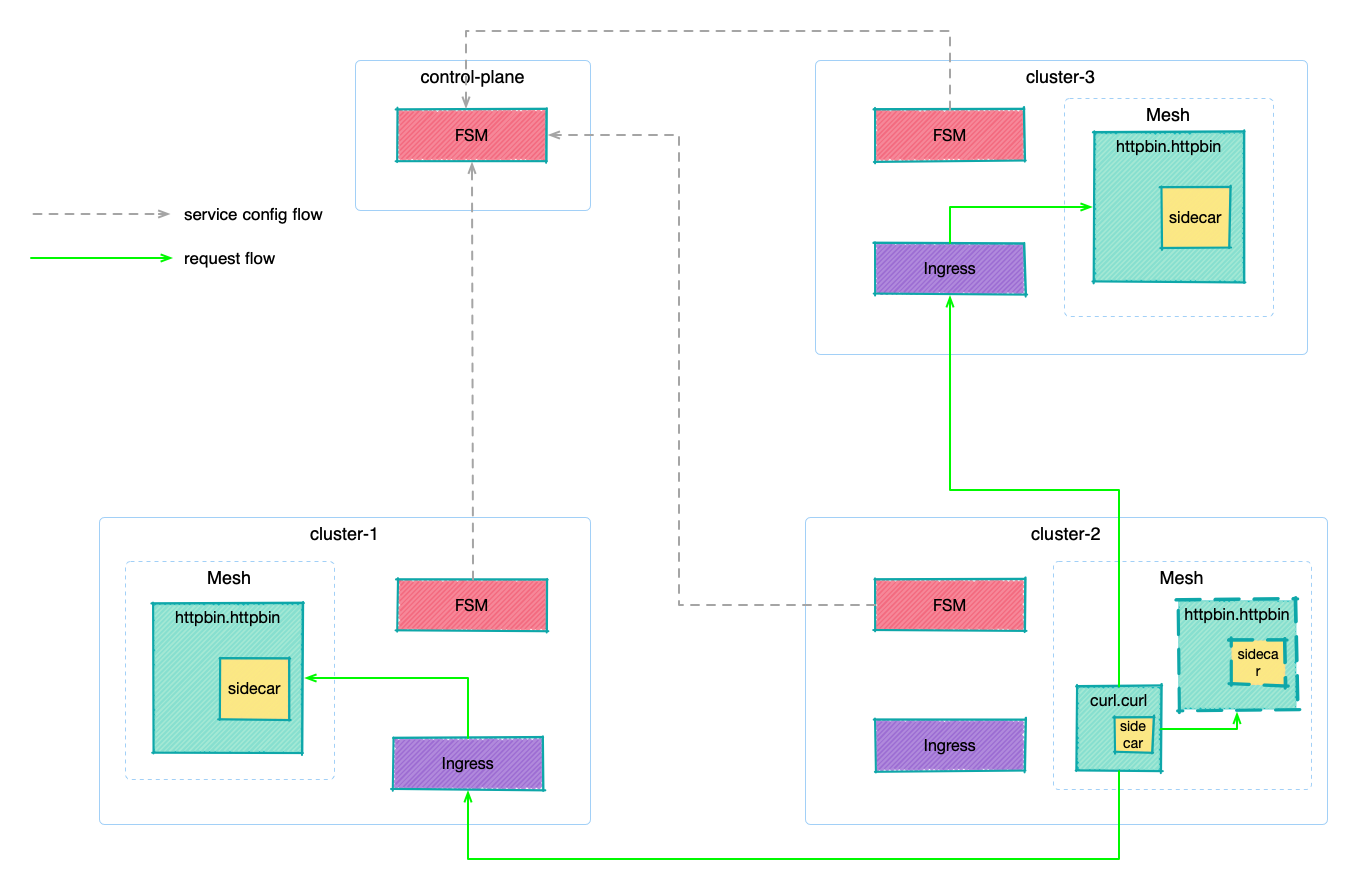

- 5: Multi Cluster

- 6: Security

- 6.1: Permissive Traffic Policy Mode

- 6.2: Bi-direction TLS with FSM Ingress

- 6.3: Service-based access control

- 6.4: IP range-based access control

- 6.5: Cert-manager Certificate Provider

- 7: Observability

- 7.1: Distributed Tracing Collaboration between FSM and OpenTelemetry

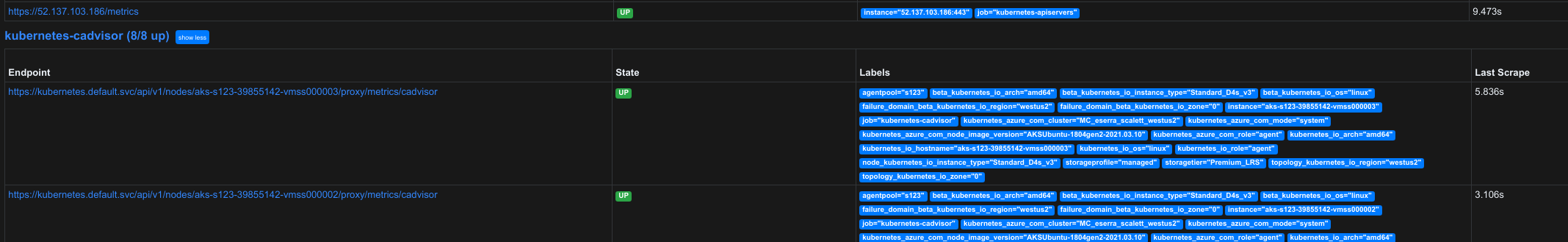

- 7.2: Integrate FSM with Prometheus and Grafana

- 8: Extending FSM with Plugins

1 - Traffic Management

1.1 - Outbound Traffic IP Range Exclusions

This guide demonstrates how outbound IP address ranges can be excluded from being intercepted by FSM’s proxy sidecar, so as to not subject them to service mesh filtering and routing policies.

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater.

- Have FSM installed.

- Have

kubectlavailable to interact with the API server. - Have

fsmCLI available for managing the service mesh.

Demo

The following demo shows an HTTP curl client making HTTP requests to the httpbin.org website directly using its IP address. We will explicitly disable the egress functionality to ensure traffic to a non-mesh destination (httpbin.org in this demo) is not able to egress the pod.

Disable mesh-wide egress passthrough.

export fsm_namespace=fsm-system # Replace fsm-system with the namespace where FSM is installed kubectl patch meshconfig fsm-mesh-config -n "$fsm_namespace" -p '{"spec":{"traffic":{"enableEgress":false}}}' --type=mergeDeploy the

curlclient into thecurlnamespace after enrolling its namespace to the mesh.# Create the curl namespace kubectl create namespace curl # Add the namespace to the mesh fsm namespace add curl # Deploy curl client in the curl namespace kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/curl/curl.yaml -n curlConfirm the

curlclient pod is up and running.$ kubectl get pods -n curl NAME READY STATUS RESTARTS AGE curl-54ccc6954c-9rlvp 2/2 Running 0 20sRetrieve the public IP address for the

httpbin.orgwebsite. For the purpose of this demo, we will test with a single IP range to be excluded from traffic interception. In this example, we will use the IP address54.91.118.50represented by the IP range54.91.118.50/32, to make HTTP requests with and without outbound IP range exclusions configured.$ nslookup httpbin.org Server: 172.23.48.1 Address: 172.23.48.1#53 Non-authoritative answer: Name: httpbin.org Address: 54.91.118.50 Name: httpbin.org Address: 54.166.163.67 Name: httpbin.org Address: 34.231.30.52 Name: httpbin.org Address: 34.199.75.4Note: Replace

54.91.118.50with a valid IP address returned by the above command in subsequent steps.Confirm the

curlclient is unable to make successful HTTP requests to thehttpbin.orgwebsite running onhttp://54.91.118.50:80.$ kubectl exec -n curl -ti "$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" -c curl -- curl -I http://54.91.118.50:80 curl: (7) Failed to connect to 54.91.118.50 port 80: Connection refused command terminated with exit code 7The failure above is expected because by default outbound traffic is redirected via the Pipy proxy sidecar running on the

curlclient’s pod, and the proxy subjects this traffic to service mesh policies which does not allow this traffic.Program FSM to exclude the IP range

54.91.118.50/32IP rangekubectl patch meshconfig fsm-mesh-config -n "$fsm_namespace" -p '{"spec":{"traffic":{"outboundIPRangeExclusionList":["54.91.118.50/32"]}}}' --type=mergeConfirm the MeshConfig has been updated as expected

# 54.91.118.50 is one of the IP addresses of httpbin.org $ kubectl get meshconfig fsm-mesh-config -n "$fsm_namespace" -o jsonpath='{.spec.traffic.outboundIPRangeExclusionList}{"\n"}' ["54.91.118.50/32"]Restart the

curlclient pod so the updated outbound IP range exclusions can be configured. It is important to note that existing pods must be restarted to pick up the updated configuration because the traffic interception rules are programmed by the init container only at the time of pod creation.kubectl rollout restart deployment curl -n curlWait for the restarted pod to be up and running.

Confirm the

curlclient is able to make successful HTTP requests to thehttpbin.orgwebsite running onhttp://54.91.118.50:80# 54.91.118.50 is one of the IP addresses for httpbin.org $ kubectl exec -n curl -ti "$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" -c curl -- curl -I http://54.91.118.50:80 HTTP/1.1 200 OK Date: Thu, 18 Mar 2021 23:17:44 GMT Content-Type: text/html; charset=utf-8 Content-Length: 9593 Connection: keep-alive Server: gunicorn/19.9.0 Access-Control-Allow-Origin: * Access-Control-Allow-Credentials: trueConfirm that HTTP requests to other IP addresses of the

httpbin.orgwebsite that are not excluded fail# 34.199.75.4 is one of the IP addresses for httpbin.org $ kubectl exec -n curl -ti "$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" -c curl -- curl -I http://34.199.75.4:80 curl: (7) Failed to connect to 34.199.75.4 port 80: Connection refused command terminated with exit code 7

1.2 - Retry Policy

This guide demonstrates how to configure retry policy for a client and server application within the service mesh.

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater.

- Have

kubectlavailable to interact with the API server. - Have

fsmCLI available for managing the service mesh.

Demo

Install FSM with permissive mode and retry policy enabled.

fsm install --set=fsm.enablePermissiveTrafficPolicy=true --set=fsm.featureFlags.enableRetryPolicy=trueDeploy the

httpbinservice into thehttpbinnamespace after enrolling its namespace to the mesh. Thehttpbinservice runs on port14001.kubectl create namespace httpbin fsm namespace add httpbin kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/httpbin/httpbin.yaml -n httpbinConfirm the

httpbinservice and pods are up and running.kubectl get svc,pod -n httpbinShould look similar to below

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE httpbin ClusterIP 10.96.198.23 <none> 14001/TCP 20s NAME READY STATUS RESTARTS AGE httpbin-5b8b94b9-lt2vs 2/2 Running 0 20sDeploy the

curlinto thecurlnamespace after enrolling its namespace to the mesh.kubectl create namespace curl fsm namespace add curl kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/curl/curl.yaml -n curlConfirm the

curlpod is up and running.kubectl get pods -n curlShould look similar to below.

NAME READY STATUS RESTARTS AGE curl-54ccc6954c-9rlvp 2/2 Running 0 20sApply the Retry policy to retry when the

curlServiceAccount receives a5xxcode when sending a request tohttpbinService.kubectl apply -f - <<EOF kind: Retry apiVersion: policy.flomesh.io/v1alpha1 metadata: name: retry namespace: curl spec: source: kind: ServiceAccount name: curl namespace: curl destinations: - kind: Service name: httpbin namespace: httpbin retryPolicy: retryOn: "5xx" perTryTimeout: 1s numRetries: 5 retryBackoffBaseInterval: 1s EOFSend a HTTP request that returns status code

503from thecurlpod to thehttpbinservice.curl_client="$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" kubectl exec "$curl_client" -n curl -c curl -- curl -sI httpbin.httpbin.svc.cluster.local:14001/status/503Returned result might look like

HTTP/1.1 503 SERVICE UNAVAILABLE server: gunicorn date: Tue, 14 Feb 2023 11:11:51 GMT content-type: text/html; charset=utf-8 access-control-allow-origin: * access-control-allow-credentials: true content-length: 0 connection: keep-aliveQuery for the stats between

curltohttpbin.curl_client="$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" fsm proxy get stats -n curl "$curl_client" | grep upstream_rq_retryThe number of times the request from the

curlpod to thehttpbinpod was retried using the exponential backoff retry should be equal to thenumRetriesfield in the retry policy.The

upstream_rq_retry_limit_exceededstat shows the number of requests not retried because it’s more than the maximum retries allowed -numRetries.cluster.httpbin/httpbin|14001.upstream_rq_retry: 4 cluster.httpbin/httpbin|14001.upstream_rq_retry_backoff_exponential: 4 cluster.httpbin/httpbin|14001.upstream_rq_retry_backoff_ratelimited: 0 cluster.httpbin/httpbin|14001.upstream_rq_retry_limit_exceeded: 1 cluster.httpbin/httpbin|14001.upstream_rq_retry_overflow: 0 cluster.httpbin/httpbin|14001.upstream_rq_retry_success: 0Send a HTTP request that returns a non-5xx status code from the

curlpod to thehttpbinservice.curl_client="$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" kubectl exec "$curl_client" -n curl -c curl -- curl -sI httpbin.httpbin.svc.cluster.local:14001/status/404Returned result might look something like

HTTP/1.1 404 NOT FOUND server: gunicorn date: Tue, 14 Feb 2023 11:18:56 GMT content-type: text/html; charset=utf-8 access-control-allow-origin: * access-control-allow-credentials: true content-length: 0 connection: keep-alive

1.3 - TCP Traffic Routing

This guide demonstrates a TCP client and server application within the service mesh communicating using FSM’s TCP routing capability.

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater.

- Have FSM installed.

- Have

kubectlavailable to interact with the API server. - Have

fsmCLI available for managing the service mesh.

Demo

The following demo shows a TCP client sending data to a tcp-echo server, which then echoes back the data to the client over a TCP connection.

Set the namespace where FSM is installed.

fsm_namespace=fsm-system # Replace fsm-system with the namespace where FSM is installed if differentDeploy the

tcp-echoservice in thetcp-demonamespace. Thetcp-echoservice runs on port9000with theappProtocolfield set totcp, which indicates to FSM that TCP routing must be used for traffic directed to thetcp-echoservice on port9000.# Create the tcp-demo namespace kubectl create namespace tcp-demo # Add the namespace to the mesh fsm namespace add tcp-demo # Deploy the service kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/apps/tcp-echo.yaml -n tcp-demoConfirm the

tcp-echoservice and pod is up and running.$ kubectl get svc,po -n tcp-demo NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/tcp-echo ClusterIP 10.0.216.68 <none> 9000/TCP 97s NAME READY STATUS RESTARTS AGE pod/tcp-echo-6656b7c4f8-zt92q 2/2 Running 0 97sDeploy the

curlclient into thecurlnamespace.# Create the curl namespace kubectl create namespace curl # Add the namespace to the mesh fsm namespace add curl # Deploy curl client in the curl namespace kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/curl/curl.yaml -n curlConfirm the

curlclient pod is up and running.$ kubectl get pods -n curl NAME READY STATUS RESTARTS AGE curl-54ccc6954c-9rlvp 2/2 Running 0 20s

Using Permissive Traffic Policy Mode

We will enable service discovery using permissive traffic policy mode, which allows application connectivity to be established without the need for explicit SMI policies.

Enable permissive traffic policy mode

kubectl patch meshconfig fsm-mesh-config -n "$fsm_namespace" -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":true}}}' --type=mergeConfirm the

curlclient is able to send and receive a response from thetcp-echoservice using TCP routing.$ kubectl exec -n curl -ti "$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" -c curl -- sh -c 'echo hello | nc tcp-echo.tcp-demo 9000' echo response: helloThe

tcp-echoservice should echo back the data sent by the client. In the above example, the client sendshello, and thetcp-echoservice responds withecho response: hello.

Using SMI Traffic Policy Mode

When using SMI traffic policy mode, explicit traffic policies must be configured to allow application connectivity. We will set up SMI policies to allow the curl client to communicate with the tcp-echo service on port 9000.

Enable SMI traffic policy mode by disabling permissive traffic policy mode

kubectl patch meshconfig fsm-mesh-config -n "$fsm_namespace" -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":false}}}' --type=mergeConfirm the

curlclient is unable to send and receive a response from thetcp-echoservice in the absence of SMI policies.$ kubectl exec -n curl -ti "$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" -c curl -- sh -c 'echo hello | nc tcp-echo.tcp-demo 9000' command terminated with exit code 1Configure SMI traffic access and routing policies.

kubectl apply -f - <<EOF # TCP route to allows access to tcp-echo:9000 apiVersion: specs.smi-spec.io/v1alpha4 kind: TCPRoute metadata: name: tcp-echo-route namespace: tcp-demo spec: matches: ports: - 9000 --- # Traffic target to allow curl app to access tcp-echo service using a TCPRoute kind: TrafficTarget apiVersion: access.smi-spec.io/v1alpha3 metadata: name: tcp-access namespace: tcp-demo spec: destination: kind: ServiceAccount name: tcp-echo namespace: tcp-demo sources: - kind: ServiceAccount name: curl namespace: curl rules: - kind: TCPRoute name: tcp-echo-route EOFConfirm the

curlclient is able to send and receive a response from thetcp-echoservice using SMI TCP route.$ kubectl exec -n curl -ti "$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')" -c curl -- sh -c 'echo hello | nc tcp-echo.tcp-demo 9000' echo response: hello

1.4 - Canary Rollouts using SMI Traffic Split

This guide demonstrates how to perform Canary rollouts using the SMI Traffic Split configuration.

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater.

- Have FSM installed.

- Have

kubectlavailable to interact with the API server. - Have

fsmCLI available for managing the service mesh.

Demonstration

Explanation

In this demo, we use two applications, curl and httpbin implemented with Pipy, to act as client and server respectively. The service has two versions, v1 and v2, which are simulated by deploying httpbin-v1 and httpbin-v2.

Observant viewers may notice the frequent use of Pipy to implement httpbin functionalities in demonstrations. This is because web services implemented with Pipy can easily customize response content, facilitating the observation of test results.

Prerequisites

- Kubernetes cluster

- kubectl CLI

Installing the Service Mesh

Download the FSM CLI.

system=$(uname -s | tr '[:upper:]' '[:lower:]')

arch=$(uname -m | sed -E 's/x86_/amd/' | sed -E 's/aarch/arm/')

release=v1.3.3

curl -L https://github.com/flomesh-io/fsm/releases/download/${release}/fsm-${release}-${system}-${arch}.tar.gz | tar -vxzf -

cp ./${system}-amd64/fsm /usr/local/bin/fsm

Install the service mesh and wait for all components to run successfully.

fsm install --timeout 120s

Deploying the Sample Application

The curl and httpbin applications run in their respective namespaces, which are managed by the service mesh through the fsm namespace add xxx command.

kubectl create ns httpbin

kubectl create ns curl

fsm namespace add httpbin curl

Deploy the v1 version of httpbin which returns Hi, I am v1! for all HTTP requests. Other applications access httpbin through the Service httpbin.

kubectl apply -n httpbin -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: httpbin

spec:

ports:

- name: pipy

port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-v1

labels:

app: pipy

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: pipy

version: v1

template:

metadata:

labels:

app: pipy

version: v1

spec:

containers:

- name: pipy

image: flomesh/pipy:latest

ports:

- name: pipy

containerPort: 8080

command:

- pipy

- -e

- |

pipy()

.listen(8080)

.serveHTTP(new Message('Hi, I am v1!\n'))

EOF

Deploy the curl application.

kubectl apply -n curl -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: curl

labels:

app: curl

service: curl

spec:

ports:

- name: http

port: 80

selector:

app: curl

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: curl

spec:

replicas: 1

selector:

matchLabels:

app: curl

template:

metadata:

labels:

app: curl

spec:

containers:

- image: curlimages/curl

imagePullPolicy: IfNotPresent

name: curl

command: ["sleep", "365d"]

EOF

Wait for all applications to run successfully.

kubectl wait --for=condition=ready pod --all -A

Test application access.

curl_client="$(kubectl get pod -n curl -l app=curl -o jsonpath='{.items[0].metadata.name}')"

# send four request

kubectl exec "$curl_client" -n curl -c curl -- curl -s httpbin.httpbin:8080 httpbin.httpbin:8080 httpbin.httpbin:8080 httpbin.httpbin:8080

Expected results are displayed.

Hi, I am v1!

Hi, I am v1!

Hi, I am v1!

Hi, I am v1!

Next, deploy the v2 version of httpbin.

Deploying Version v2

The v2 version of httpbin returns Hi, I am v2! for all HTTP requests. Before deployment, we need to set the default traffic split strategy, otherwise, the new version instances would be accessible through the Service httpbin.

Create Service httpbin-v1 with an additional version tag in its selector compared to Service httpbin. Currently, both have the same endpoints.

kubectl apply -n httpbin -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: httpbin-v1

spec:

ports:

- name: pipy

port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

version: v1

EOF

Apply the TrafficSplit strategy to route all traffic to httpbin-v1.

kubectl apply -n httpbin -f - <<EOF

apiVersion: split.smi-spec.io/v1alpha4

kind: TrafficSplit

metadata:

name: httpbin-split

spec:

service: httpbin

backends:

- service: httpbin-v1

weight: 100

EOF

Then deploy the new version.

kubectl apply -n httpbin -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: httpbin-v2

spec:

ports:

- name: pipy

port: 8080

targetPort: 8080

protocol: TCP

selector:

app: pipy

version: v2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-v2

labels:

app: pipy

version: v2

spec:

replicas: 1

selector:

matchLabels:

app: pipy

version: v2

template:

metadata:

labels:

app: pipy

version: v2

spec:

containers:

- name: pipy

image: flomesh/pipy:latest

ports:

- name: pipy

containerPort: 8080

command:

- pipy

- -e

- |

pipy()

.listen(8080)

.serveHTTP(new Message('Hi, I am v2!\n'))

EOF

Wait for the new version to run successfully.

kubectl wait --for=condition=ready pod -n httpbin -l version=v2

If you send requests again, v1 httpbin still handles them.

kubectl exec "$curl_client" -n curl -c curl -- curl -s httpbin.httpbin:8080 httpbin.httpbin:8080 httpbin.httpbin:8080 httpbin.httpbin:8080

Hi, I am v1!

Hi, I am v1!

Hi, I am v1!

Hi, I am v1!

Canary Release

Modify the traffic split strategy to direct 25% of the traffic to version v2.

kubectl apply -n httpbin -f - <<EOF

apiVersion: split.smi-spec.io/v1alpha4

kind: TrafficSplit

metadata:

name: httpbin-split

spec:

service: httpbin

backends:

- service: httpbin-v1

weight: 75

- service: httpbin-v2

weight: 25

EOF

Sending requests again shows that 1/4 of the traffic is handled by version v2.

kubectl exec "$curl_client" -n curl -c curl -- curl -s httpbin.httpbin:8080 httpbin.httpbin:8080 httpbin.httpbin:8080 httpbin.httpbin:8080

Hi, I am v1!

Hi, I am v2!

Hi, I am v1!

Hi, I am v1!

Advanced Canary Release

Suppose in version v2, /test endpoint functionality has been updated. For risk control, we want only a portion of the traffic to access the /test endpoint of the v2 version, while other endpoints like /demo should only access the v1 version.

We need to introduce another resource to define the traffic visiting the /test endpoint: a route definition. Here we define two routes:

httpbin-test: Traffic using theGETmethod to access/test.httpbin-all: Traffic using theGETmethod to access/, note this is a prefix match.

kubectl apply -n httpbin -f - <<EOF

apiVersion: specs.smi-spec.io/v1alpha4

kind: HTTPRouteGroup

metadata:

name: httpbin-test

spec:

matches:

- name: test

pathRegex: "/test"

methods:

- GET

---

apiVersion: specs.smi-spec.io/v1alpha4

kind: HTTPRouteGroup

metadata:

name: httpbin-all

spec:

matches:

- name: test

pathRegex: ".*"

methods:

- GET

EOF

Then

update the traffic split strategy, associating the routes to the split. Also, create a new policy.

kubectl apply -n httpbin -f - <<EOF

apiVersion: split.smi-spec.io/v1alpha4

kind: TrafficSplit

metadata:

name: httpbin-split

spec:

service: httpbin

matches:

- name: httpbin-test

kind: HTTPRouteGroup

backends:

- service: httpbin-v1

weight: 75

- service: httpbin-v2

weight: 25

---

apiVersion: split.smi-spec.io/v1alpha4

kind: TrafficSplit

metadata:

name: httpbin-all

spec:

service: httpbin

matches:

- name: httpbin-all

kind: HTTPRouteGroup

backends:

- service: httpbin-v1

weight: 100

EOF

Now, when accessing the /test endpoint, only 25% of the traffic goes to the new version.

kubectl exec "$curl_client" -n curl -c curl -- curl -s httpbin.httpbin:8080/test httpbin.httpbin:8080/test httpbin.httpbin:8080/test httpbin.httpbin:8080/test

Hi, I am v1!

Hi, I am v2!

Hi, I am v1!

Hi, I am v1!

And requests to the /demo endpoint all go to the old v1 version.

kubectl exec "$curl_client" -n curl -c curl -- curl -s httpbin.httpbin:8080/demo httpbin.httpbin:8080/demo httpbin.httpbin:8080/demo httpbin.httpbin:8080/demo

Hi, I am v1!

Hi, I am v1!

Hi, I am v1!

Hi, I am v1!

This meets the expectations.

1.5 - Circuit breaking for destinations within the mesh

This guide demonstrates how to configure circuit breaking for destinations that are a part of an FSM managed service mesh.

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater.

- Have FSM installed.

- Have

kubectlavailable to interact with the API server. - Have

fsmCLI available for managing the service mesh. - FSM version >= v1.0.0.

Demo

The following demo shows a load-testing client fortio sending traffic to the httpbin service. We will see how applying circuit breakers for traffic to the httpbin service impacts the fortio client when the configured circuit breaking limits trip.

For simplicity, enable permissive traffic policy mode so that explicit SMI traffic access policies are not required for application connectivity within the mesh.

export FSM_NAMESPACE=fsm-system # Replace fsm-system with the namespace where FSM is installed

kubectl patch meshconfig fsm-mesh-config -n "$FSM_NAMESPACE" -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":true}}}' --type=merge

Deploy services

Deploy server service.

kubectl create namespace server

fsm namespace add server

kubectl apply -n server -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: fortio

labels:

app: fortio

service: fortio

spec:

ports:

- port: 8080

name: http-8080

- port: 8078

name: tcp-8078

- port: 8079

name: grpc-8079

selector:

app: fortio

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fortio

spec:

replicas: 1

selector:

matchLabels:

app: fortio

template:

metadata:

labels:

app: fortio

spec:

containers:

- name: fortio

image: fortio/fortio:latest_release

imagePullPolicy: Always

ports:

- containerPort: 8080

name: http

- containerPort: 8078

name: tcp

- containerPort: 8079

name: grpc

EOF

Deploy client service.

kubectl create namespace client

fsm namespace add client

kubectl apply -n client -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: fortio-client

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fortio-client

spec:

replicas: 1

selector:

matchLabels:

app: fortio-client

template:

metadata:

labels:

app: fortio-client

spec:

serviceAccountName: fortio-client

containers:

- name: fortio-client

image: fortio/fortio:latest_release

imagePullPolicy: Always

EOF

Test

Confirm the fortio-client is able to successfully make HTTP requests to the fortio-server service on port 8080. We call the service with 10 concurrent connections (-c 10) and send 1000 requests (-n 1000). Service is configured to return HTTP status code of 511 for 20% requests.

fortio_client=`kubectl get pod -n client -l app=fortio-client -o jsonpath='{.items[0].metadata.name}'`

kubectl exec "$fortio_client" -n client -c fortio-client -- fortio load -quiet -c 10 -n 1000 -qps 200 -p 99.99 http://fortio.server.svc.cluster.local:8080/echo?status=511:20

Returned result might look something like below:

Sockets used: 205 (for perfect keepalive, would be 10)

Uniform: false, Jitter: false, Catchup allowed: true

IP addresses distribution:

10.43.43.151:8080: 205

Code 200 : 804 (80.4 %)

Code 511 : 196 (19.6 %)

All done 1000 calls (plus 0 warmup) 2.845 ms avg, 199.8 qps

Next, apply a circuit breaker configuration using the UpstreamTrafficSetting resource for traffic directed to the fortio-server service.

Policy: Error Request Count Triggers Circuit Breaker

The error request count trigger threshold is set to errorAmountThreshold=100, the circuit breaker is triggered when the error request count reaches 100, returning 503 Service Unavailable!, the circuit breaker lasts for 10s

kubectl apply -f - <<EOF

apiVersion: policy.flomesh.io/v1alpha1

kind: UpstreamTrafficSetting

metadata:

name: http-circuit-breaking

namespace: server

spec:

host: fortio.server.svc.cluster.local

connectionSettings:

http:

circuitBreaking:

statTimeWindow: 1m

minRequestAmount: 200

errorAmountThreshold: 100

degradedTimeWindow: 10s

degradedStatusCode: 503

degradedResponseContent: 'Service Unavailable!'

EOF

Set 20% of the server’s responses to return error code 511. When the error request count reaches 100, the number of successful requests should be around 400.

kubectl exec "$fortio_client" -n client -c fortio-client -- fortio load -quiet -c 10 -n 1000 -qps 200 -p 99.99 http://fortio.server.svc.cluster.local:8080/echo\?status\=511:20

From the results, it meets the expectations. After circuit breaker, the requests return the 503 error code.

Sockets used: 570 (for perfect keepalive, would be 10)

Uniform: false, Jitter: false, Catchup allowed: true

IP addresses distribution:

10.43.43.151:8080: 570

Code 200 : 430 (43.0 %)

Code 503 : 470 (47.0 %)

Code 511 : 100 (10.0 %)

All done 1000 calls (plus 0 warmup) 3.376 ms avg, 199.8 qps

Checking the sidecar logs, the error count reached 100 at the 530th request, triggering the circuit breaker.

2023-02-08 01:08:01.456 [INF] [circuit_breaker] total/slowAmount/errorAmount (open) server/fortio|8080 530 0 100

Policy: Error Rate Triggers Circuit Breaker

Here we change the trigger from error count to error rate: errorRatioThreshold=0.10, the circuit breaker is triggered when the error rate reaches 10%, it lasts for 10s and returns 503 Service Unavailable!. Note that the minimum number of requests is still 200.

kubectl apply -f - <<EOF

apiVersion: policy.flomesh.io/v1alpha1

kind: UpstreamTrafficSetting

metadata:

name: http-circuit-breaking

namespace: server

spec:

host: fortio.server.svc.cluster.local

connectionSettings:

http:

circuitBreaking:

statTimeWindow: 1m

minRequestAmount: 200

errorRatioThreshold: 0.10

degradedTimeWindow: 10s

degradedStatusCode: 503

degradedResponseContent: 'Service Unavailable!'

EOF

Set 20% of the server’s responses to return error code 511.

kubectl exec "$fortio_client" -n client -c fortio-client -- fortio load -quiet -c 10 -n 1000 -qps 200 -p 99.99 http://fortio.server.svc.cluster.local:8080/echo\?status\=511:20

From the output results, it can be seen that after 200 requests, the circuit breaker was triggered, and the number of circuit breaker requests was 800.

Sockets used: 836 (for perfect keepalive, would be 10)

Uniform: false, Jitter: false, Catchup allowed: true

IP addresses distribution:

10.43.43.151:8080: 836

Code 200 : 164 (16.4 %)

Code 503 : 800 (80.0 %)

Code 511 : 36 (3.6 %)

All done 1000 calls (plus 0 warmup) 3.605 ms avg, 199.8 qps

Checking the sidecar logs, after satisfying the minimum number of requests to trigger the circuit breaker (200), the error rate also reached the threshold, triggering the circuit breaker.

2023-02-08 01:19:25.874 [INF] [circuit_breaker] total/slowAmount/errorAmount (close) server/fortio|8080 200 0 36

Policy: Slow Call Request Count Triggers Circuit Breaker

Testing slow calls, add a 200ms delay to 20% of the requests.

kubectl exec "$fortio_client" -n client -c fortio-client -- fortio load -quiet -c 10 -n 1000 -qps 200 -p 50,78,79,80,81,82,90,95 http://fortio.server.svc.cluster.local:8080/echo\?delay\=200ms:20

A similar effect is achieved, with nearly 80% of requests taking less than 200ms.

# target 50% 0.000999031

# target 78% 0.0095

# target 79% 0.200175

# target 80% 0.200467

# target 81% 0.200759

# target 82% 0.20105

# target 90% 0.203385

# target 95% 0.204844

285103 max 0.000285103 sum 0.000285103

Sockets used: 10 (for perfect keepalive, would be 10)

Uniform: false, Jitter: false, Catchup allowed: true

IP addresses distribution:

10.43.43.151:8080: 10

Code 200 : 1000 (100.0 %)

All done 1000 calls (plus 0 warmup) 44.405 ms avg, 149.6 qps

Setting strategy:

- Set the slow call request time threshold to

200ms - Set the number of slow call requests to

100

kubectl apply -f - <<EOF

apiVersion: policy.flomesh.io/v1alpha1

kind: UpstreamTrafficSetting

metadata:

name: http-circuit-breaking

namespace: server

spec:

host: fortio.server.svc.cluster.local

connectionSettings:

http:

circuitBreaking:

statTimeWindow: 1m

minRequestAmount: 200

slowTimeThreshold: 200ms

slowAmountThreshold: 100

degradedTimeWindow: 10s

degradedStatusCode: 503

degradedResponseContent: 'Service Unavailable!'

EOF

Inject a 200ms delay for 20% of requests.

kubectl exec "$fortio_client" -n client -c fortio-client -- fortio load -quiet -c 10 -n 1000 -qps 200 -p 50,78,79,80,81,82,90,95 http://fortio.server.svc.cluster.local:8080/echo\?delay\=200ms:20

The number of successful requests is 504, with 20% taking 200ms, and the number of slow requests has reached the threshold to trigger a circuit breaker.

# target 50% 0.00246111

# target 78% 0.00393846

# target 79% 0.00398974

# target 80% 0.00409756

# target 81% 0.00421951

# target 82% 0.00434146

# target 90% 0.202764

# target 95% 0.220036

Sockets used: 496 (for perfect keepalive, would be 10)

Uniform: false, Jitter: false, Catchup allowed: true

IP addresses distribution:

10.43.43.151:8080: 496

Code 200 : 504 (50.4 %)

Code 503 : 496 (49.6 %)

All done 1000 calls (plus 0 warmup) 24.086 ms avg, 199.8 qps

Checking the logs of the sidecar, the number of slow calls reached 100 on the 504th request, triggering the circuit breaker.

2023-02-08 07:27:01.106 [INF] [circuit_breaker] total/slowAmount/errorAmount (open) server/fortio|8080 504 100 0

Policy: Slow Call Rate Triggers Circuit Breaking

The slow call rate is set to 0.10, meaning that the circuit breaker will be triggered when it is expected that 10% of the requests within the statistical window will take more than 200ms.

kubectl apply -f - <<EOF

apiVersion: policy.flomesh.io/v1alpha1

kind: UpstreamTrafficSetting

metadata:

name: http-circuit-breaking

namespace: server

spec:

host: fortio.server.svc.cluster.local

connectionSettings:

http:

circuitBreaking:

statTimeWindow: 1m

minRequestAmount: 200

slowTimeThreshold: 200ms

slowRatioThreshold: 0.1

degradedTimeWindow: 10s

degradedStatusCode: 503

degradedResponseContent: 'Service Unavailable!'

EOF

Add 200ms delay to 20% of requests.

kubectl exec "$fortio_client" -n client -c fortio-client -- fortio load -quiet -c 10 -n 1000 -qps 200 -p 50,78,79,80,81,82,90,95 http://fortio.server.svc.cluster.local:8080/echo\?delay\=200ms:20

From the output results, there are 202 successful requests which meets the minimum number of requests required for the configuration to take effect. Among them, 20% are slow calls, reaching the threshold for triggering circuit breaker.

# target 50% 0.00305539

# target 78% 0.00387172

# target 79% 0.00390087

# target 80% 0.00393003

# target 81% 0.00395918

# target 82% 0.00398834

# target 90% 0.00458915

# target 95% 0.00497674

Sockets used: 798 (for perfect keepalive, would be 10)

Uniform: false, Jitter: false, Catchup allowed: true

IP addresses distribution:

10.43.43.151:8080: 798

Code 200 : 202 (20.2 %)

Code 503 : 798 (79.8 %)

All done 1000 calls (plus 0 warmup) 10.133 ms avg, 199.8 qps

Check the logs of the sidecar, at the 202nd request, the number of slow requests was 28, which triggered the circuit breaker.

2023-02-08 07:38:25.284 [INF] [circuit_breaker] total/slowAmount/errorAmount (open) server/fortio|8080 202 28 0

1.6 - Local rate limiting of L4 connections

This guide demonstrates how to configure rate limiting for L4 TCP connections destined to a target host that is a part of a FSM managed service mesh.

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater.

- Have FSM installed.

- Have

kubectlavailable to interact with the API server. - Have

fsmCLI available for managing the service mesh. - FSM version >= v1.2.0.

Demo

The following demo shows a client fortio-client sending TCP traffic to the fortio TCP echo service. The fortio service echoes TCP messages back to the client. We will see the impact of applying local TCP rate limiting policies targeting the fortio service to control the throughput of traffic destined to the service backend.

For simplicity, enable permissive traffic policy mode so that explicit SMI traffic access policies are not required for application connectivity within the mesh.

export FSM_NAMESPACE=fsm-system # Replace fsm-system with the namespace where FSM is installed kubectl patch meshconfig fsm-mesh-config -n "$FSM_NAMESPACE" -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":true}}}' --type=mergeDeploy the

fortioTCP echoservice in thedemonamespace after enrolling its namespace to the mesh. ThefortioTCP echoservice runs on port8078.# Create the demo namespace kubectl create namespace demo # Add the namespace to the mesh fsm namespace add demo # Deploy fortio TCP echo in the demo namespace kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/fortio/fortio.yaml -n demoConfirm the

fortioservice pod is up and running.kubectl get pods -n demo NAME READY STATUS RESTARTS AGE fortio-c4bd7857f-7mm6w 2/2 Running 0 22mDeploy the

fortio-clientapp in thedemonamespace. We will use this client to send TCP traffic to thefortio TCP echoservice deployed previously.kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/fortio/fortio-client.yaml -n demoConfirm the

fortio-clientpod is up and running.NAME READY STATUS RESTARTS AGE fortio-client-b9b7bbfb8-prq7r 2/2 Running 0 7sConfirm the

fortio-clientapp is able to successfully make TCP connections and send data to thefrotioTCP echoservice on port8078. We call thefortioservice with3concurrent connections (-c 3) and send10calls (-n 10).fortio_client="$(kubectl get pod -n demo -l app=fortio-client -o jsonpath='{.items[0].metadata.name}')" kubectl exec "$fortio_client" -n demo -c fortio-client -- fortio load -qps -1 -c 3 -n 10 tcp://fortio.demo.svc.cluster.local:8078 Fortio 1.32.3 running at -1 queries per second, 8->8 procs, for 10 calls: tcp://fortio.demo.svc.cluster.local:8078 20:41:47 I tcprunner.go:238> Starting tcp test for tcp://fortio.demo.svc.cluster.local:8078 with 3 threads at -1.0 qps Starting at max qps with 3 thread(s) [gomax 8] for exactly 10 calls (3 per thread + 1) 20:41:47 I periodic.go:723> T001 ended after 34.0563ms : 3 calls. qps=88.0894283876992 20:41:47 I periodic.go:723> T000 ended after 35.3117ms : 4 calls. qps=113.2769025563765 20:41:47 I periodic.go:723> T002 ended after 44.0273ms : 3 calls. qps=68.13954069406937 Ended after 44.2097ms : 10 calls. qps=226.19 Aggregated Function Time : count 10 avg 0.01096615 +/- 0.01386 min 0.001588 max 0.0386716 sum 0.1096615 # range, mid point, percentile, count >= 0.001588 <= 0.002 , 0.001794 , 40.00, 4 > 0.002 <= 0.003 , 0.0025 , 60.00, 2 > 0.003 <= 0.004 , 0.0035 , 70.00, 1 > 0.025 <= 0.03 , 0.0275 , 90.00, 2 > 0.035 <= 0.0386716 , 0.0368358 , 100.00, 1 # target 50% 0.0025 # target 75% 0.02625 # target 90% 0.03 # target 99% 0.0383044 # target 99.9% 0.0386349 Error cases : no data Sockets used: 3 (for perfect no error run, would be 3) Total Bytes sent: 240, received: 240 tcp OK : 10 (100.0 %) All done 10 calls (plus 0 warmup) 10.966 ms avg, 226.2 qpsAs seen above, all the TCP connections from the

fortio-clientpod succeeded.Total Bytes sent: 240, received: 240 tcp OK : 10 (100.0 %) All done 10 calls (plus 0 warmup) 10.966 ms avg, 226.2 qpsNext, apply a local rate limiting policy to rate limit L4 TCP connections to the

fortio.demo.svc.cluster.localservice to1 connection per minute.kubectl apply -f - <<EOF apiVersion: policy.flomesh.io/v1alpha1 kind: UpstreamTrafficSetting metadata: name: tcp-rate-limit namespace: demo spec: host: fortio.demo.svc.cluster.local rateLimit: local: tcp: connections: 1 unit: minute EOFConfirm no traffic has been rate limited yet by examining the stats on the

fortiobackend pod.fortio_server="$(kubectl get pod -n demo -l app=fortio -o jsonpath='{.items[0].metadata.name}')" fsm proxy get stats "$fortio_server" -n demo | grep fortio.*8078.*rate_limit no matches found: fortio.*8078.*rate_limitConfirm TCP connections are rate limited.

kubectl exec "$fortio_client" -n demo -c fortio-client -- fortio load -qps -1 -c 3 -n 10 tcp://fortio.demo.svc.cluster.local:8078 Fortio 1.32.3 running at -1 queries per second, 8->8 procs, for 10 calls: tcp://fortio.demo.svc.cluster.local:8078 20:49:38 I tcprunner.go:238> Starting tcp test for tcp://fortio.demo.svc.cluster.local:8078 with 3 threads at -1.0 qps Starting at max qps with 3 thread(s) [gomax 8] for exactly 10 calls (3 per thread + 1) 20:49:38 E tcprunner.go:203> [2] Unable to read: read tcp 10.244.1.19:59244->10.96.83.254:8078: read: connection reset by peer 20:49:38 E tcprunner.go:203> [0] Unable to read: read tcp 10.244.1.19:59246->10.96.83.254:8078: read: connection reset by peer 20:49:38 E tcprunner.go:203> [2] Unable to read: read tcp 10.244.1.19:59258->10.96.83.254:8078: read: connection reset by peer 20:49:38 E tcprunner.go:203> [0] Unable to read: read tcp 10.244.1.19:59260->10.96.83.254:8078: read: connection reset by peer 20:49:38 E tcprunner.go:203> [2] Unable to read: read tcp 10.244.1.19:59266->10.96.83.254:8078: read: connection reset by peer 20:49:38 I periodic.go:723> T002 ended after 9.643ms : 3 calls. qps=311.1065021258944 20:49:38 E tcprunner.go:203> [0] Unable to read: read tcp 10.244.1.19:59268->10.96.83.254:8078: read: connection reset by peer 20:49:38 E tcprunner.go:203> [0] Unable to read: read tcp 10.244.1.19:59274->10.96.83.254:8078: read: connection reset by peer 20:49:38 I periodic.go:723> T000 ended after 14.8212ms : 4 calls. qps=269.8836801338623 20:49:38 I periodic.go:723> T001 ended after 20.3458ms : 3 calls. qps=147.45057948077735 Ended after 20.5468ms : 10 calls. qps=486.69 Aggregated Function Time : count 10 avg 0.00438853 +/- 0.004332 min 0.0014184 max 0.0170216 sum 0.0438853 # range, mid point, percentile, count >= 0.0014184 <= 0.002 , 0.0017092 , 20.00, 2 > 0.002 <= 0.003 , 0.0025 , 50.00, 3 > 0.003 <= 0.004 , 0.0035 , 70.00, 2 > 0.004 <= 0.005 , 0.0045 , 90.00, 2 > 0.016 <= 0.0170216 , 0.0165108 , 100.00, 1 # target 50% 0.003 # target 75% 0.00425 # target 90% 0.005 # target 99% 0.0169194 # target 99.9% 0.0170114 Error cases : count 7 avg 0.0034268714 +/- 0.0007688 min 0.0024396 max 0.0047932 sum 0.0239881 # range, mid point, percentile, count >= 0.0024396 <= 0.003 , 0.0027198 , 42.86, 3 > 0.003 <= 0.004 , 0.0035 , 71.43, 2 > 0.004 <= 0.0047932 , 0.0043966 , 100.00, 2 # target 50% 0.00325 # target 75% 0.00409915 # target 90% 0.00451558 # target 99% 0.00476544 # target 99.9% 0.00479042 Sockets used: 8 (for perfect no error run, would be 3) Total Bytes sent: 240, received: 72 tcp OK : 3 (30.0 %) tcp short read : 7 (70.0 %) All done 10 calls (plus 0 warmup) 4.389 ms avg, 486.7 qpsAs seen above, only 30% of the 10 calls succeeded, while the remaining 70% was rate limitied. This is because we applied a rate limiting policy of 1 connection per minute at the

fortiobackend service, and thefortio-clientwas able to use 1 connection to make 3/10 calls, resulting in a 30% success rate.Examine the sidecar stats to further confirm this.

fsm proxy get stats "$fortio_server" -n demo | grep 'fortio.*8078.*rate_limit' local_rate_limit.inbound_demo/fortio_8078_tcp.rate_limited: 7Next, let’s update our rate limiting policy to allow a burst of connections. Bursts allow a given number of connections over the baseline rate of 1 connection per minute defined by our rate limiting policy.

kubectl apply -f - <<EOF apiVersion: policy.flomesh.io/v1alpha1 kind: UpstreamTrafficSetting metadata: name: tcp-echo-limit namespace: demo spec: host: fortio.demo.svc.cluster.local rateLimit: local: tcp: connections: 1 unit: minute burst: 10 EOFConfirm the burst capability allows a burst of connections within a small window of time.

kubectl exec "$fortio_client" -n demo -c fortio-client -- fortio load -qps -1 -c 3 -n 10 tcp://fortio.demo.svc.cluster.local:8078 Fortio 1.32.3 running at -1 queries per second, 8->8 procs, for 10 calls: tcp://fortio.demo.svc.cluster.local:8078 20:56:56 I tcprunner.go:238> Starting tcp test for tcp://fortio.demo.svc.cluster.local:8078 with 3 threads at -1.0 qps Starting at max qps with 3 thread(s) [gomax 8] for exactly 10 calls (3 per thread + 1) 20:56:56 I periodic.go:723> T002 ended after 5.1568ms : 3 calls. qps=581.7561278312132 20:56:56 I periodic.go:723> T001 ended after 5.2334ms : 3 calls. qps=573.2411052088509 20:56:56 I periodic.go:723> T000 ended after 5.2464ms : 4 calls. qps=762.4275693809088 Ended after 5.2711ms : 10 calls. qps=1897.1 Aggregated Function Time : count 10 avg 0.00153124 +/- 0.001713 min 0.00033 max 0.0044054 sum 0.0153124 # range, mid point, percentile, count >= 0.00033 <= 0.001 , 0.000665 , 70.00, 7 > 0.003 <= 0.004 , 0.0035 , 80.00, 1 > 0.004 <= 0.0044054 , 0.0042027 , 100.00, 2 # target 50% 0.000776667 # target 75% 0.0035 # target 90% 0.0042027 # target 99% 0.00438513 # target 99.9% 0.00440337 Error cases : no data Sockets used: 3 (for perfect no error run, would be 3) Total Bytes sent: 240, received: 240 tcp OK : 10 (100.0 %) All done 10 calls (plus 0 warmup) 1.531 ms avg, 1897.1 qpsAs seen above, all the TCP connections from the

fortio-clientpod succeeded.Total Bytes sent: 240, received: 240 tcp OK : 10 (100.0 %) All done 10 calls (plus 0 warmup) 1.531 ms avg, 1897.1 qpsFurther, examine the stats to confirm the burst allows additional connections to go through. The number of connections rate limited hasn’t increased since our previous rate limit test before we configured the burst setting.

fsm proxy get stats "$fortio_server" -n demo | grep 'fortio.*8078.*rate_limit' local_rate_limit.inbound_demo/fortio_8078_tcp.rate_limited: 0

1.7 - Local rate limiting of HTTP requests

This guide demonstrates how to configure rate limiting for HTTP requests destined to a target host that is a part of a FSM managed service mesh.

Prerequisites

- Kubernetes cluster running Kubernetes v1.19.0 or greater.

- Have FSM installed.

- Have

kubectlavailable to interact with the API server. - Have

fsmCLI available for managing the service mesh. - FSM version >= v1.2.0.

Demo

The following demo shows a client sending HTTP requests to the fortio service. We will see the impact of applying local HTTP rate limiting policies targeting the fortio service to control the throughput of requests destined to the service backend.

For simplicity, enable permissive traffic policy mode so that explicit SMI traffic access policies are not required for application connectivity within the mesh.

export FSM_NAMESPACE=fsm-system # Replace fsm-system with the namespace where FSM is installed kubectl patch meshconfig fsm-mesh-config -n "$FSM_NAMESPACE" -p '{"spec":{"traffic":{"enablePermissiveTrafficPolicyMode":true}}}' --type=mergeDeploy the

fortioHTTP service in thedemonamespace after enrolling its namespace to the mesh. ThefortioHTTP service runs on port8080.# Create the demo namespace kubectl create namespace demo # Add the namespace to the mesh fsm namespace add demo # Deploy fortio TCP echo in the demo namespace kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/fortio/fortio.yaml -n demoConfirm the

fortioservice pod is up and running.kubectl get pods -n demo NAME READY STATUS RESTARTS AGE fortio-c4bd7857f-7mm6w 2/2 Running 0 22mDeploy the

fortio-clientapp in thedemonamespace. We will use this client to send TCP traffic to thefortio TCP echoservice deployed previously.kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/fortio/fortio-client.yaml -n demoConfirm the

fortio-clientpod is up and running.kubectl get pods -n demo NAME READY STATUS RESTARTS AGE fortio-client-b9b7bbfb8-prq7r 2/2 Running 0 7sConfirm the

fortio-clientapp is able to successfully make HTTP requests to thefortioHTTP service on port8080. We call thefortioservice with3concurrent connections (-c 3) and send10requests (-n 10).fortio_client="$(kubectl get pod -n demo -l app=fortio-client -o jsonpath='{.items[0].metadata.name}')" kubectl exec "$fortio_client" -n demo -c fortio-client -- fortio load -c 3 -n 10 http://fortio.demo.svc.cluster.local:8080You will get the result as below.

Fortio 1.33.0 running at 8 queries per second, 8->8 procs, for 10 calls: http://fortio.demo.svc.cluster.local:8080 20:58:07 I httprunner.go:93> Starting http test for http://fortio.demo.svc.cluster.local:8080 with 3 threads at 8.0 qps and parallel warmup Starting at 8 qps with 3 thread(s) [gomax 8] : exactly 10, 3 calls each (total 9 + 1) 20:58:08 I periodic.go:723> T002 ended after 1.1273523s : 3 calls. qps=2.661102478790348 20:58:08 I periodic.go:723> T001 ended after 1.1273756s : 3 calls. qps=2.661047480537986 20:58:08 I periodic.go:723> T000 ended after 1.5023464s : 4 calls. qps=2.662501803844972 Ended after 1.5024079s : 10 calls. qps=6.656 Sleep times : count 7 avg 0.52874391 +/- 0.03031 min 0.4865562 max 0.5604152 sum 3.7012074 Aggregated Function Time : count 10 avg 0.0050187 +/- 0.005515 min 0.0012575 max 0.0135401 sum 0.050187 # range, mid point, percentile, count >= 0.0012575 <= 0.002 , 0.00162875 , 70.00, 7 > 0.012 <= 0.0135401 , 0.01277 , 100.00, 3 # target 50% 0.0017525 # target 75% 0.0122567 # target 90% 0.0130267 # target 99% 0.0134888 # target 99.9% 0.013535 Error cases : no data 20:58:08 I httprunner.go:190> [0] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 20:58:08 I httprunner.go:190> [1] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 20:58:08 I httprunner.go:190> [2] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 Sockets used: 3 (for perfect keepalive, would be 3) Uniform: false, Jitter: false IP addresses distribution: 10.96.189.159:8080: 3 Code 200 : 10 (100.0 %) Response Header Sizes : count 10 avg 124.3 +/- 0.4583 min 124 max 125 sum 1243 Response Body/Total Sizes : count 10 avg 124.3 +/- 0.4583 min 124 max 125 sum 1243 All done 10 calls (plus 0 warmup) 5.019 ms avg, 6.7 qpsAs seen above, all the HTTP requests from the

fortio-clientpod succeeded.Code 200 : 10 (100.0 %)Next, apply a local rate limiting policy to rate limit HTTP requests at the virtual host level to

3 requests per minute.kubectl apply -f - <<EOF apiVersion: policy.flomesh.io/v1alpha1 kind: UpstreamTrafficSetting metadata: name: http-rate-limit namespace: demo spec: host: fortio.demo.svc.cluster.local rateLimit: local: http: requests: 3 unit: minute EOFConfirm no HTTP requests have been rate limited yet by examining the stats on the

fortiobackend pod.fortio_server="$(kubectl get pod -n demo -l app=fortio -o jsonpath='{.items[0].metadata.name}')" fsm proxy get stats "$fortio_server" -n demo | grep 'http_local_rate_limiter.http_local_rate_limit.rate_limited' http_local_rate_limiter.http_local_rate_limit.rate_limited: 0Confirm HTTP requests are rate limited.

kubectl exec "$fortio_client" -n demo -c fortio-client -- fortio load -c 3 -n 10 http://fortio.demo.svc.cluster.local:8080 Fortio 1.33.0 running at 8 queries per second, 8->8 procs, for 10 calls: http://fortio.demo.svc.cluster.local:8080 21:06:36 I httprunner.go:93> Starting http test for http://fortio.demo.svc.cluster.local:8080 with 3 threads at 8.0 qps and parallel warmup Starting at 8 qps with 3 thread(s) [gomax 8] : exactly 10, 3 calls each (total 9 + 1) 21:06:37 W http_client.go:838> [0] Non ok http code 429 (HTTP/1.1 429) 21:06:37 W http_client.go:838> [1] Non ok http code 429 (HTTP/1.1 429) 21:06:37 W http_client.go:838> [2] Non ok http code 429 (HTTP/1.1 429) 21:06:37 W http_client.go:838> [0] Non ok http code 429 (HTTP/1.1 429) 21:06:37 W http_client.go:838> [1] Non ok http code 429 (HTTP/1.1 429) 21:06:37 I periodic.go:723> T001 ended after 1.1269827s : 3 calls. qps=2.661975201571417 21:06:37 W http_client.go:838> [2] Non ok http code 429 (HTTP/1.1 429) 21:06:37 I periodic.go:723> T002 ended after 1.1271942s : 3 calls. qps=2.66147572441377 21:06:38 W http_client.go:838> [0] Non ok http code 429 (HTTP/1.1 429) 21:06:38 I periodic.go:723> T000 ended after 1.5021191s : 4 calls. qps=2.662904692444161 Ended after 1.5021609s : 10 calls. qps=6.6571 Sleep times : count 7 avg 0.53138026 +/- 0.03038 min 0.4943128 max 0.5602373 sum 3.7196618 Aggregated Function Time : count 10 avg 0.00318326 +/- 0.002431 min 0.0012651 max 0.0077951 sum 0.0318326 # range, mid point, percentile, count >= 0.0012651 <= 0.002 , 0.00163255 , 60.00, 6 > 0.002 <= 0.003 , 0.0025 , 70.00, 1 > 0.005 <= 0.006 , 0.0055 , 80.00, 1 > 0.006 <= 0.007 , 0.0065 , 90.00, 1 > 0.007 <= 0.0077951 , 0.00739755 , 100.00, 1 # target 50% 0.00185302 # target 75% 0.0055 # target 90% 0.007 # target 99% 0.00771559 # target 99.9% 0.00778715 Error cases : count 7 avg 0.0016392143 +/- 0.000383 min 0.0012651 max 0.0023951 sum 0.0114745 # range, mid point, percentile, count >= 0.0012651 <= 0.002 , 0.00163255 , 85.71, 6 > 0.002 <= 0.0023951 , 0.00219755 , 100.00, 1 # target 50% 0.00163255 # target 75% 0.00188977 # target 90% 0.00211853 # target 99% 0.00236744 # target 99.9% 0.00239233 21:06:38 I httprunner.go:190> [0] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 21:06:38 I httprunner.go:190> [1] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 21:06:38 I httprunner.go:190> [2] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 Sockets used: 7 (for perfect keepalive, would be 3) Uniform: false, Jitter: false IP addresses distribution: 10.96.189.159:8080: 3 Code 200 : 3 (30.0 %) Code 429 : 7 (70.0 %) Response Header Sizes : count 10 avg 37.2 +/- 56.82 min 0 max 124 sum 372 Response Body/Total Sizes : count 10 avg 166 +/- 27.5 min 124 max 184 sum 1660 All done 10 calls (plus 0 warmup) 3.183 ms avg, 6.7 qpsAs seen above, only

3out of10HTTP requests succeeded, while the remaining7requests were rate limited as per the rate limiting policy.Code 200 : 3 (30.0 %) Code 429 : 7 (70.0 %)Examine the stats to further confirm this.

fsm proxy get stats "$fortio_server" -n demo | grep 'http_local_rate_limiter.http_local_rate_limit.rate_limited' http_local_rate_limiter.http_local_rate_limit.rate_limited: 7Next, let’s update our rate limiting policy to allow a burst of requests. Bursts allow a given number of requests over the baseline rate of 3 requests per minute defined by our rate limiting policy.

kubectl apply -f - <<EOF apiVersion: policy.flomesh.io/v1alpha1 kind: UpstreamTrafficSetting metadata: name: http-rate-limit namespace: demo spec: host: fortio.demo.svc.cluster.local rateLimit: local: http: requests: 3 unit: minute burst: 10 EOFConfirm the burst capability allows a burst of requests within a small window of time.

kubectl exec "$fortio_client" -n demo -c fortio-client -- fortio load -c 3 -n 10 http://fortio.demo.svc.cluster.local:8080 Fortio 1.33.0 running at 8 queries per second, 8->8 procs, for 10 calls: http://fortio.demo.svc.cluster.local:8080 21:11:04 I httprunner.go:93> Starting http test for http://fortio.demo.svc.cluster.local:8080 with 3 threads at 8.0 qps and parallel warmup Starting at 8 qps with 3 thread(s) [gomax 8] : exactly 10, 3 calls each (total 9 + 1) 21:11:05 I periodic.go:723> T002 ended after 1.127252s : 3 calls. qps=2.6613392568831107 21:11:05 I periodic.go:723> T001 ended after 1.1273028s : 3 calls. qps=2.661219328116634 21:11:05 I periodic.go:723> T000 ended after 1.5019947s : 4 calls. qps=2.663125242718899 Ended after 1.5020768s : 10 calls. qps=6.6574 Sleep times : count 7 avg 0.53158916 +/- 0.03008 min 0.4943959 max 0.5600713 sum 3.7211241 Aggregated Function Time : count 10 avg 0.00318637 +/- 0.002356 min 0.0012401 max 0.0073302 sum 0.0318637 # range, mid point, percentile, count >= 0.0012401 <= 0.002 , 0.00162005 , 60.00, 6 > 0.002 <= 0.003 , 0.0025 , 70.00, 1 > 0.005 <= 0.006 , 0.0055 , 80.00, 1 > 0.007 <= 0.0073302 , 0.0071651 , 100.00, 2 # target 50% 0.00184802 # target 75% 0.0055 # target 90% 0.0071651 # target 99% 0.00731369 # target 99.9% 0.00732855 Error cases : no data 21:11:05 I httprunner.go:190> [0] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 21:11:05 I httprunner.go:190> [1] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 21:11:05 I httprunner.go:190> [2] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 Sockets used: 3 (for perfect keepalive, would be 3) Uniform: false, Jitter: false IP addresses distribution: 10.96.189.159:8080: 3 Code 200 : 10 (100.0 %) Response Header Sizes : count 10 avg 124 +/- 0 min 124 max 124 sum 1240 Response Body/Total Sizes : count 10 avg 124 +/- 0 min 124 max 124 sum 1240 All done 10 calls (plus 0 warmup) 3.186 ms avg, 6.7 qpsAs seen above, all HTTP requests succeeded as we allowed a burst of 10 requests with our rate limiting policy.

Code 200 : 10 (100.0 %)Further, examine the stats to confirm the burst allows additional requests to go through. The number of requests rate limited hasn’t increased since our previous rate limit test before we configured the burst setting.

fsm proxy get stats "$fortio_server" -n demo | grep 'http_local_rate_limiter.http_local_rate_limit.rate_limited' http_local_rate_limiter.http_local_rate_limit.rate_limited: 0Next, let’s configure the rate limting policy for a specific HTTP route allowed on the upstream service.

Note: Since we are using permissive traffic policy mode in the demo, an HTTP route with a wildcard path regex

.*is allowed on the upstream backend, so we will configure a rate limiting policy for this route. However, when using SMI policies in the mesh, paths corresponding to matching allowed SMI HTTP routing rules can be configured.kubectl apply -f - <<EOF apiVersion: policy.flomesh.io/v1alpha1 kind: UpstreamTrafficSetting metadata: name: http-rate-limit namespace: demo spec: host: fortio.demo.svc.cluster.local httpRoutes: - path: .* rateLimit: local: requests: 3 unit: minute EOFConfirm HTTP requests are rate limited at a per-route level.

kubectl exec "$fortio_client" -n demo -c fortio-client -- fortio load -c 3 -n 10 http://fortio.demo.svc.cluster.local:8080 Fortio 1.33.0 running at 8 queries per second, 8->8 procs, for 10 calls: http://fortio.demo.svc.cluster.local:8080 21:19:34 I httprunner.go:93> Starting http test for http://fortio.demo.svc.cluster.local:8080 with 3 threads at 8.0 qps and parallel warmup Starting at 8 qps with 3 thread(s) [gomax 8] : exactly 10, 3 calls each (total 9 + 1) 21:19:35 W http_client.go:838> [0] Non ok http code 429 (HTTP/1.1 429) 21:19:35 W http_client.go:838> [2] Non ok http code 429 (HTTP/1.1 429) 21:19:35 W http_client.go:838> [1] Non ok http code 429 (HTTP/1.1 429) 21:19:35 W http_client.go:838> [0] Non ok http code 429 (HTTP/1.1 429) 21:19:35 W http_client.go:838> [1] Non ok http code 429 (HTTP/1.1 429) 21:19:35 W http_client.go:838> [2] Non ok http code 429 (HTTP/1.1 429) 21:19:35 I periodic.go:723> T001 ended after 1.126703s : 3 calls. qps=2.6626360274180505 21:19:35 I periodic.go:723> T002 ended after 1.1267472s : 3 calls. qps=2.6625315776245104 21:19:36 W http_client.go:838> [0] Non ok http code 429 (HTTP/1.1 429) 21:19:36 I periodic.go:723> T000 ended after 1.5027817s : 4 calls. qps=2.6617305760377574 Ended after 1.5028359s : 10 calls. qps=6.6541 Sleep times : count 7 avg 0.53089959 +/- 0.03079 min 0.4903791 max 0.5604715 sum 3.7162971 Aggregated Function Time : count 10 avg 0.00369734 +/- 0.003165 min 0.0011174 max 0.0095033 sum 0.0369734 # range, mid point, percentile, count >= 0.0011174 <= 0.002 , 0.0015587 , 60.00, 6 > 0.002 <= 0.003 , 0.0025 , 70.00, 1 > 0.007 <= 0.008 , 0.0075 , 90.00, 2 > 0.009 <= 0.0095033 , 0.00925165 , 100.00, 1 # target 50% 0.00182348 # target 75% 0.00725 # target 90% 0.008 # target 99% 0.00945297 # target 99.9% 0.00949827 Error cases : count 7 avg 0.0016556 +/- 0.0004249 min 0.0011174 max 0.0025594 sum 0.0115892 # range, mid point, percentile, count >= 0.0011174 <= 0.002 , 0.0015587 , 85.71, 6 > 0.002 <= 0.0025594 , 0.0022797 , 100.00, 1 # target 50% 0.0015587 # target 75% 0.00186761 # target 90% 0.00216782 # target 99% 0.00252024 # target 99.9% 0.00255548 21:19:36 I httprunner.go:190> [0] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 21:19:36 I httprunner.go:190> [1] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 21:19:36 I httprunner.go:190> [2] fortio.demo.svc.cluster.local:8080 resolved to 10.96.189.159:8080 Sockets used: 7 (for perfect keepalive, would be 3) Uniform: false, Jitter: false IP addresses distribution: 10.96.189.159:8080: 3 Code 200 : 3 (30.0 %) Code 429 : 7 (70.0 %) Response Header Sizes : count 10 avg 37.2 +/- 56.82 min 0 max 124 sum 372 Response Body/Total Sizes : count 10 avg 166 +/- 27.5 min 124 max 184 sum 1660 All done 10 calls (plus 0 warmup) 3.697 ms avg, 6.7 qpsAs seen above, only

3out of10HTTP requests succeeded, while the remaining7requests were rate limited as per the rate limiting policy.Code 200 : 3 (30.0 %) Code 429 : 7 (70.0 %)Examine the stats to further confirm this.

7additional requests have been rate limited after configuring HTTP route level rate limiting since our previous test, indicated by the total of14HTTP requests rate limited in the stats.fsm proxy get stats "$fortio_server" -n demo | grep 'http_local_rate_limiter.http_local_rate_limit.rate_limited' http_local_rate_limiter.http_local_rate_limit.rate_limited: 14

2 - Integration

2.1 - SpringCloud Consul Registry Integration

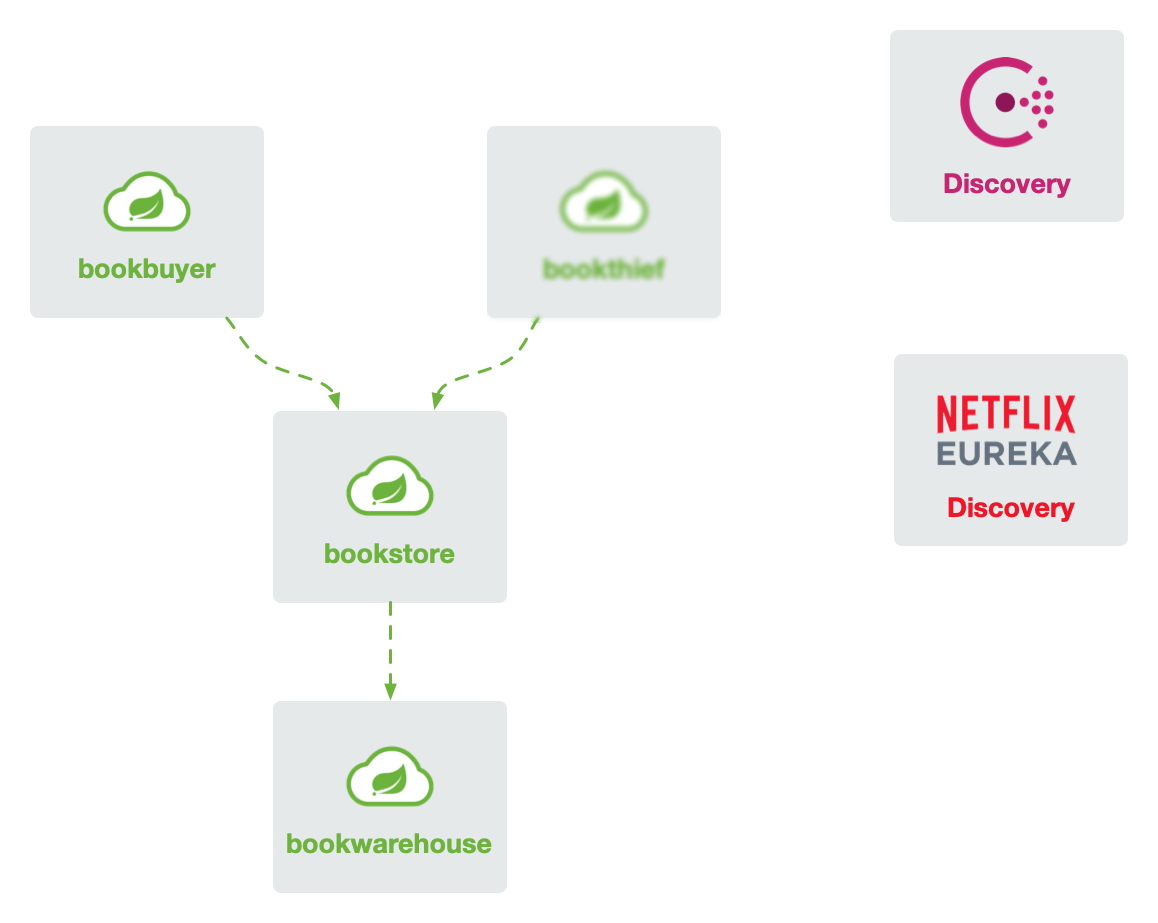

In this doc, we will demonstrate integrating Spring Cloud Consul microservices into the service mesh and testing the commonly used canary release scenario.

Demo Application

For the demonstration of Spring Cloud microservices integration into the service mesh, we have rewritten the classic BookStore application https://github.com/flomesh-io/springboot-bookstore-demo.

The application currently supports both Netflix Eureka and HashiCorp Consul registries (switchable at compile time), and specific usage can be referred to in the documentation.

Prerequisites

- Kubernetes cluster

- kubectl CLI

- FSM CLI

Initialization

First, clone the repository.

git clone https://github.com/flomesh-io/springboot-bookstore-demo.git

Then deploy Consul using a single-node instance for simplicity.

kubectl apply -n default -f manifests/consul.yaml

Deploying FSM

Deploy the control plane and connectors with the following parameters:

fsm.serviceAccessMode: The service access mode,mixedindicates support for accessing services using both Service names and pod IPs.fsm.deployConsulConnector: Deploy the Consul connector.fsm.cloudConnector.consul.deriveNamespace: The namespace where the encapsulated Consul services as K8s Services will reside.fsm.cloudConnector.consul.httpAddr: The HTTP address for Consul.fsm.cloudConnector.consul.passingOnly: Synchronize only the healthy service nodes.fsm.cloudConnector.consul.suffixTag: By default, the Consul connector creates a Service in the specified namespace with the same name as the service. For canary releases, synchronized Services for different versions are needed, such asbookstore-v1,bookstore-v2for different versions ofbookstore. ThesuffixTagdenotes using the service node’stagversionvalue as the service name suffix. In the example application, the version tag is specified by adding the environment variableSPRING_CLOUD_CONSUL_DISCOVERY_TAGS="version=v1"to the service nodes.

export fsm_namespace=fsm-system

export fsm_mesh_name=fsm

export consul_svc_addr="$(kubectl get svc -l name=consul -o jsonpath='{.items[0].spec.clusterIP}')"

fsm install \

--mesh-name "$fsm_mesh_name" \

--fsm-namespace "$fsm_namespace" \

--set=fsm.serviceAccessMode=mixed \

--set=fsm.deployConsulConnector=true \

--set=fsm.cloudConnector.consul.deriveNamespace=consul-derive \

--set=fsm.cloudConnector.consul.httpAddr=$consul_svc_addr:8500 \

--set=fsm.cloudConnector.consul.passingOnly=false \

--set=fsm.cloudConnector.consul.suffixTag=version \

--timeout=900s

Next, create the namespace specified above and bring it under the management of the service mesh.

kubectl create namespace consul-derive

fsm namespace add consul-derive

kubectl patch namespace consul-derive -p '{"metadata":{"annotations":{"flomesh.io/mesh-service-sync":"consul"}}}' --type=merge

Configuring Access Control Policies

By default, services within the mesh can access services outside the mesh; however, the reverse is not allowed. Therefore, set up access control policies to allow Consul to access microservices within the mesh for health checks.

kubectl apply -n consul-derive -f - <<EOF

kind: AccessControl

apiVersion: policy.flomesh.io/v1alpha1

metadata:

name: consul

spec:

sources:

- kind: Service

namespace: default

name: consul

EOF

Deploying Applications

Create namespaces and add them to the mesh.

kubectl create namespace bookstore

kubectl create namespace bookbuyer

kubectl create namespace bookwarehouse

fsm namespace add bookstore bookbuyer bookwarehouse

Deploy the applications.

kubectl apply -n bookwarehouse -f manifests/consul/bookwarehouse-consul.yaml

kubectl apply -n bookstore -f manifests/consul/bookstore-consul.yaml

kubectl apply -n bookbuyer -f manifests/consul/bookbuyer-consul.yaml

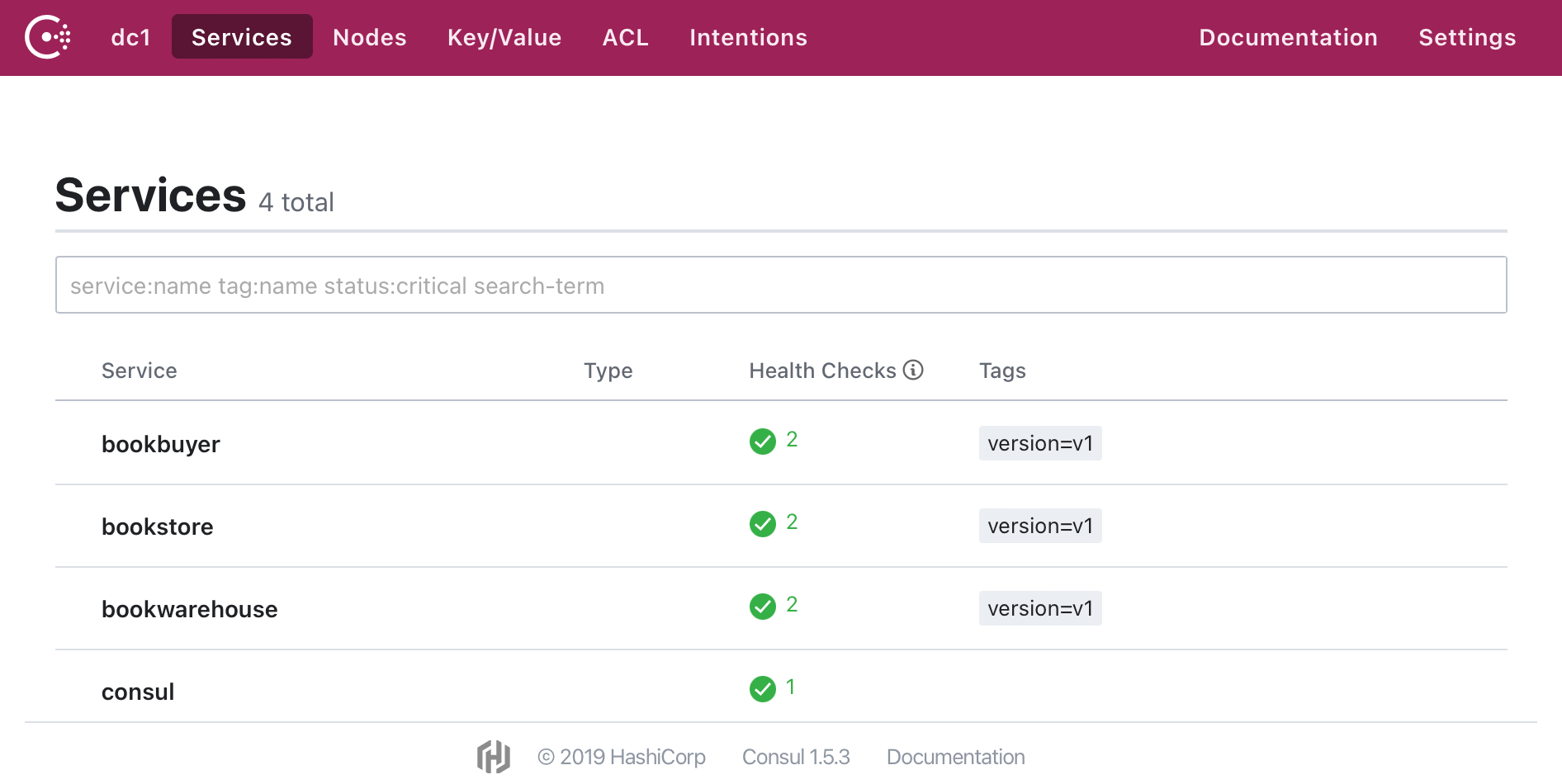

In the consul-derive namespace, you can see the synchronized Services.

kubectl get service -n consul-derive

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

bookstore ClusterIP None <none> 14001/TCP 8m42s

bookstore-v1 ClusterIP None <none> 14001/TCP 8m42s

bookwarehouse ClusterIP None <none> 14001/TCP 8m38s

bookwarehouse-v1 ClusterIP None <none> 14001/TCP 8m38s

bookbuyer ClusterIP None <none> 14001/TCP 8m38s

bookbuyer-v1 ClusterIP None <none> 14001/TCP 8m38s

Use port forwarding or create Ingress rules to view the running services: the page auto-refreshes and the counter increases continuously.

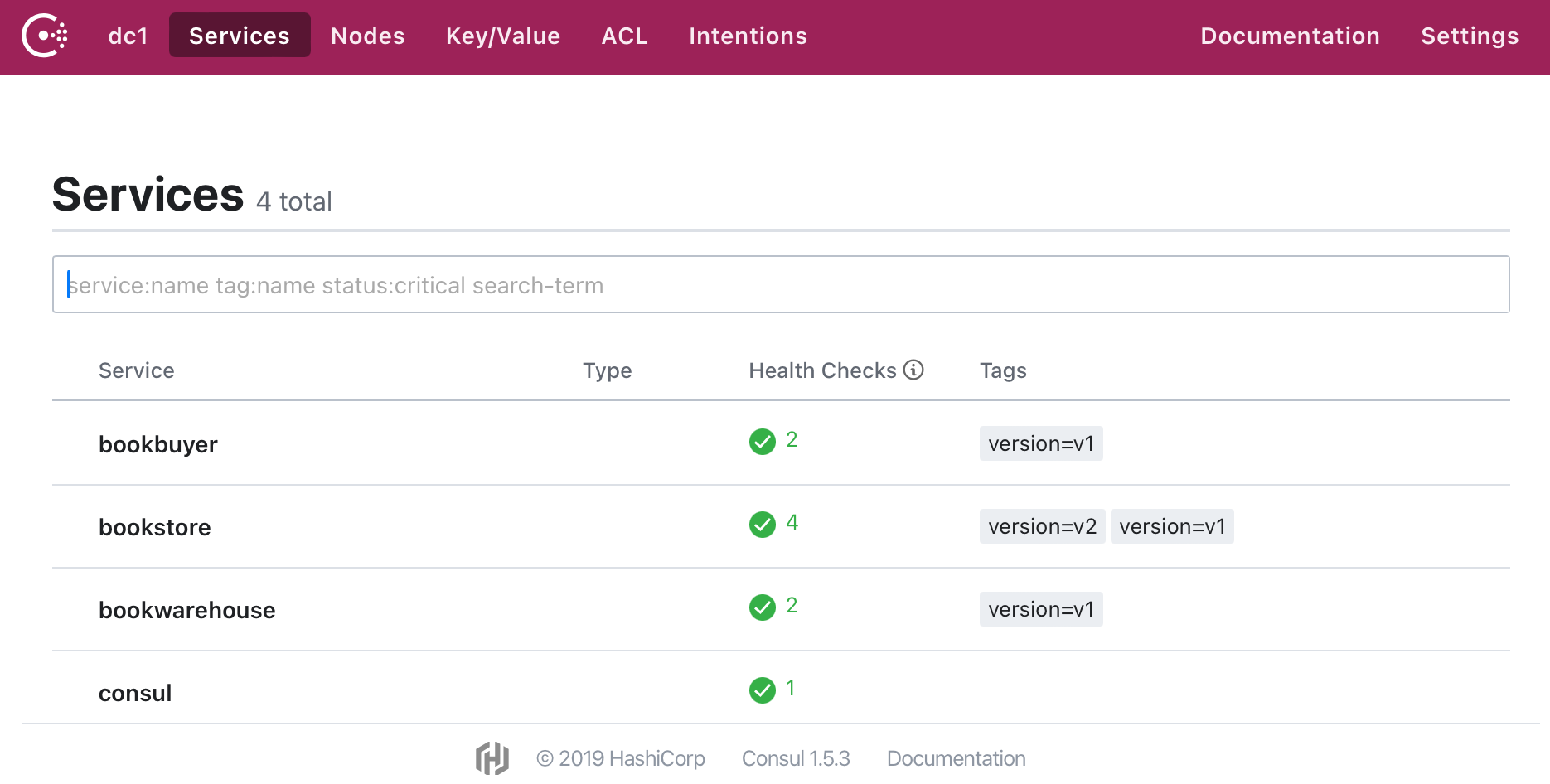

Canary Release Testing

Next is the common scenario for canary releases. Referencing FSM documentation examples, we use weight-based canary releases.

Before releasing a new version, create TrafficSplit bookstore-v1 to ensure all traffic routes to bookstore-v1:

kubectl apply -n consul-derive -f - <<EOF

apiVersion: split.smi-spec.io/v1alpha4

kind: TrafficSplit

metadata:

name: bookstore-split

spec:

service: bookstore

backends:

- service: bookstore-v1

weight: 100

- service: bookstore-v2

weight: 0

EOF

Then deploy the new version bookstore-v2:

kubectl apply -n bookstore -f ./manifests/consul/bookstore-v2-consul.yaml

At this point, viewing the bookstore-v2 page via port forwarding reveals no change in the counter, indicating no traffic is entering the new version of the service node.

Next, modify the traffic split strategy to route 50% of the traffic to the new version, and you will see the bookstore-v2 counter begin to increase.

kubectl apply -n consul-derive -f - <<EOF

apiVersion: split.smi-spec.io/v1alpha4

kind: TrafficSplit

metadata:

name: bookstore-split

spec:

service: bookstore

backends:

- service: bookstore-v1

weight: 50

- service: bookstore-v2

weight: 50

EOF

Continue adjusting the strategy to route 100% of the traffic to the new version. The counter for the old version bookstore-v1 will cease to increase.

kubectl apply -n consul-derive -f - <<EOF

apiVersion: split.smi-spec.io/v1alpha4

kind: TrafficSplit

metadata:

name: bookstore-split

spec:

service: bookstore

backends:

- service: bookstore-v1

weight: 0

- service: bookstore-v2

weight: 100

EOF

3 - Ingress

3.1 - Ingress with Service Mesh

FSM can optionally use the FSM ingress controller and Pipy-based edge proxies to route external traffic to the Service Mesh backend. This guide demonstrates how to configure HTTP ingress for services managed by the FSM service mesh.

Prerequisites

- Kubernetes cluster version v1.19.0 or higher.

- Interact with the API server using

kubectl. - FSM CLI installed.

- FSM Ingress Controller installed followed by installation document

Demo

Assume that we have FSM installed under the fsm-system namespace, and named with fsm.

export FSM_NAMESPACE=fsm-system # Replace fsm-system with the namespace where FSM will be installed

export FSM_MESH_NAME=fsm # Replace fsm with the desired FSM mesh name

Save the external IP address and port of the entry gateway, which will be used later to test access to the backend application.

export ingress_host="$(kubectl -n "$FSM_NAMESPACE" get service fsm-ingress -o jsonpath='{.status.loadBalancer.ingress[0].ip}')"

export ingress_port="$(kubectl -n "$FSM_NAMESPACE" get service fsm-ingress -o jsonpath='{.spec.ports[?(@.name=="http")].port}')"

The next step is to deploy the sample httpbin service.

# Create a namespace

kubectl create ns httpbin

# Add the namespace to the mesh

fsm namespace add httpbin

# Deploy the application

kubectl apply -f https://raw.githubusercontent.com/flomesh-io/fsm-docs/main/manifests/samples/httpbin/httpbin.yaml -n httpbin

Ensure that the httpbin service and pod are up and running properly by

kubectl get pods,svc -n httpbin default/fsm-system ⎈

NAME READY STATUS RESTARTS AGE

pod/httpbin-5c4bbfb664-xsk7j 0/2 PodInitializing 0 29s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/httpbin ClusterIP 10.43.83.102 <none> 14001/TCP 30s

HTTP Ingress

Next, create the necessary HTTPProxy and IngressBackend configurations to allow external clients to access port 14001 of the httpbin service under the httpbin namespace. Because TLS is not used, the link from the fsm entry gateway to the httpbin backend pod is not encrypted.

kubectl apply -f - <<EOF

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: httpbin

namespace: httpbin

spec:

ingressClassName: pipy

rules:

- host: httpbin.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: httpbin

port:

number: 14001

---

kind: IngressBackend

apiVersion: policy.flomesh.io/v1alpha1

metadata:

name: httpbin

namespace: httpbin

spec:

backends:

- name: httpbin

port:

number: 14001 # targetPort of httpbin service

protocol: http

sources:

- kind: Service

namespace: "$FSM_NAMESPACE"

name: fsm-ingress

EOF

Now we expect external clients to have access to the httpbin service, with the HOST request header of the HTTP request being httpbin.org.

curl -sI http://"$ingress_host":"$ingress_port"/get -H "Host: httpbin.org"

HTTP/1.1 200 OK

server: gunicorn/19.9.0

date: Tue, 05 Jul 2022 07:34:11 GMT

content-type: application/json

content-length: 241

access-control-allow-origin: *

access-control-allow-credentials: true

connection: keep-alive

3.2 - Ingress Controller - Basics

This guide demonstrate how to serve HTTP and HTTPs traffic via FSM Ingress controller.

Prerequisites

- Kubernetes cluster version v1.19.0 or higher.

- Interact with the API server using

kubectl. - FSM CLI installed.

- FSM Ingress Controller installed followed by installation document

Sample Application

The example application used here provides access through both HTTP at port 8000 and HTTPS at port 8443, with the following URI:

/returns a simple HTML page/hireturns a200response with stringHi, there!/api/privatereturns a401response with stringStaff only

To provide HTTPS, a CA certificate and server certificate need to be issued for the application first.

openssl genrsa 2048 > ca-key.pem

openssl req -new -x509 -nodes -days 365000 \

-key ca-key.pem \

-out ca-cert.pem \

-subj '/CN=flomesh.io'

openssl genrsa -out server-key.pem 2048

openssl req -new -key server-key.pem -out server.csr -subj '/CN=example.com'

openssl x509 -req -in server.csr -CA ca-cert.pem -CAkey ca-key.pem -CAcreateserial -out server-cert.pem -days 365

Before deploying the sample service, first let’s create a secret to save the certificate and key in the secret and mount it in the application pod.

kubectl create namespace httpbin

# mount self-signed cert to sample app pod via secret

kubectl create secret generic -n httpbin server-cert \

--from-file=./server-cert.pem \

--from-file=./server-key.pem

kubectl apply -n httpbin -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: httpbin

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

labels:

app: httpbin

service: httpbin

spec:

ports:

- port: 8443

name: https

- port: 8000

name: http

selector:

app: httpbin

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

labels:

app: httpbin

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

template:

metadata:

labels:

app: httpbin

spec:

containers:

- name: pipy

image: addozhang/httpbin:latest

env:

- name: PIPY_CONFIG_FILE

value: /etc/pipy/tutorial/gateway/main.js

ports:

- containerPort: 8443

- containerPort: 8000

volumeMounts:

- name: cert

mountPath: "/etc/pipy/tutorial/gateway/secret"

readOnly: true

volumes:

- name: cert

secret:

secretName: server-cert

EOF

Basic configurations

HTTP Protocol

In the following example, an Ingress resource is defined that routes requests with host example.com and path /get and / to the back-end service httpbin listening at port 8000.

Note that the

Ingressresource and the back-end service should belong to the same namespace.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: httpbin

spec:

ingressClassName: pipy

rules:

- host: example.com

http:

paths:

- path: /

pathType: Exact

backend:

service:

name: httpbin

port:

number: 8000

- path: /hi

pathType: Exact

backend:

service:

name: httpbin

port:

number: 8000

Explanation of some of fields:

metadata.namefield defines the resource name of the Ingress.spec.ingressClassNamefield is used to specify the implementation of the entrance controller.Ingressclassis the name defined by the implementation of each entrance controller, and here we usepipy. The installed entrance controllers can be viewed throughkubectl get ingressclass.spec.rulesfield is used to define the routing resource.hostfield defines the hostnameexample.compathsfield defines two path rules: the request matching the path/, and the uri/hibackendfield defines the backend servicehttpbinand port8000used to handle the path rule.

By viewing the Ingress Controller Service, you can see that its type is LoadBalancer, and its external address is 10.0.0.12, which is exactly the node’s IP address.

kubectl get svc -n fsm-system -l app=fsm-ingress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

fsm-ingress LoadBalancer 10.43.243.124 10.0.2.4 80:30508/TCP 16h

Applying the Ingress configuration above, when accessing the uri /hi and / endpoints of the httpbin service, we can use the node’s IP address and port 80.

export HOST_IP=$(ip addr show eth0 | grep 'inet ' | awk '{print $2}' | cut -d/ -f1)

curl http://example.com/hi --connect-to example.com:80:$HOST_IP:80

Hi, there!

curl http://example.com/ --connect-to example.com:80:$HOST_IP:80

<!DOCTYPE html>

<html>

<head>

<title>Hi, Pipy!</title>

</head>

<body>

<h1>Hi, Pipy!</h1>

<p>This is a web page served from Pipy.</p>

</body>

</html>

HTTPS protocol

This example shows how to configure an ingress controller to support HTTPS access. By default, the FSM Ingress does not enable TLS ingress, and you need to turn on the TLS ingress functionality by using the parameter --set fsm.ingress.tls.enabled=true during installation.

Or execute the command below to enable ingress TLS after installed.

export FSM_NAMESPACE=fsm-system

kubectl patch meshconfig fsm-mesh-config -n "$FSM_NAMESPACE" -p '{"spec":{"ingress":{"tls":{"enabled":true}}}}' --type=merge

The Ingress resource in the following example configures the url https://example.com to access ingress.

spec.tlsis the exclusive field for TLS configuration and can configure multiple HTTPS ingresses.hostsfield is used to configure SNI and can configure multiple SNIs. Here,example.comis used, and wildcard*.example.comis also supported.secretNamefield is used to specify theSecretthat stores the certificate and key. Note that the Ingress resource and Secret should belong to the same namespace.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: httpbin

spec:

ingressClassName: pipy

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: httpbin

port:

number: 8000

tls:

- hosts:

- example.com

secretName: ingress-cert

Issue TLS certificate